Readme

HairCLIP: Design Your Hair by Text and Reference Image (CVPR2022)

This repository hosts the official PyTorch implementation of the paper: “HairCLIP: Design Your Hair by Text and Reference Image”.

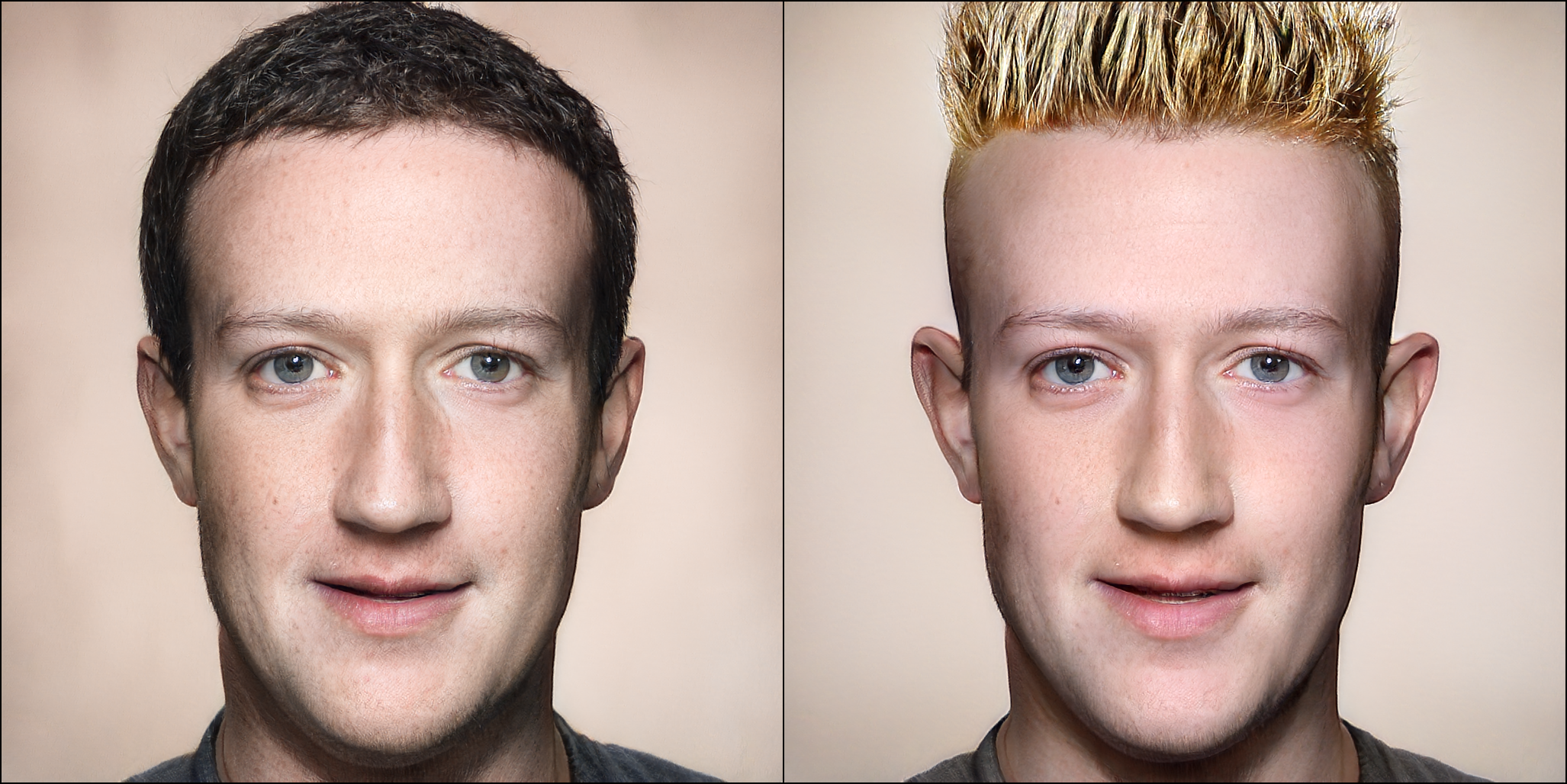

Our single framework supports hairstyle and hair color editing individually or jointly, and conditional inputs can come from either image or text domain.

Acknowledgements

This code is based on StyleCLIP.

Citation

If you find our work useful for your research, please consider citing the following papers :)

@article{wei2022hairclip,

title={Hairclip: Design your hair by text and reference image},

author={Wei, Tianyi and Chen, Dongdong and Zhou, Wenbo and Liao, Jing and Tan, Zhentao and Yuan, Lu and Zhang, Weiming and Yu, Nenghai},

journal={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2022}

}