Readme

This model doesn't have a readme.

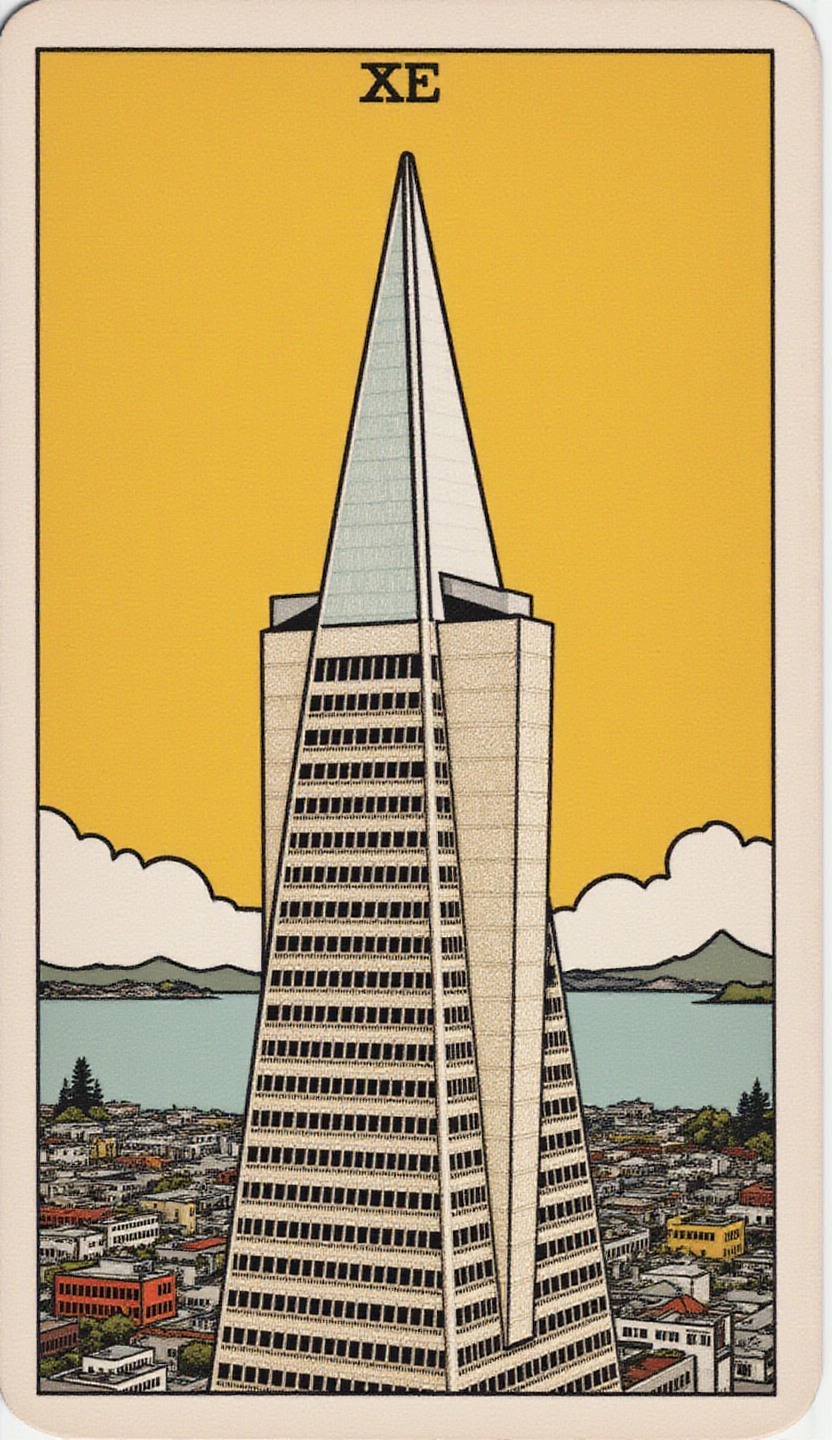

Flux fine-tune of the Transamerica Pyramid building in San Francisco

This model runs on Nvidia H100 GPU hardware. We don't yet have enough runs of this model to provide performance information.

This model doesn't have a readme.