Readme

Model Description

MasaCtrl: Tuning-free Mutual Self-Attention Control for Consistent Image Synthesis and Editing by Tencent ARC Lab and University of Tokyo. This model uses xyn-ai/anything-v4.0 as the base model and can simultaneously perform image synthesis and editing.

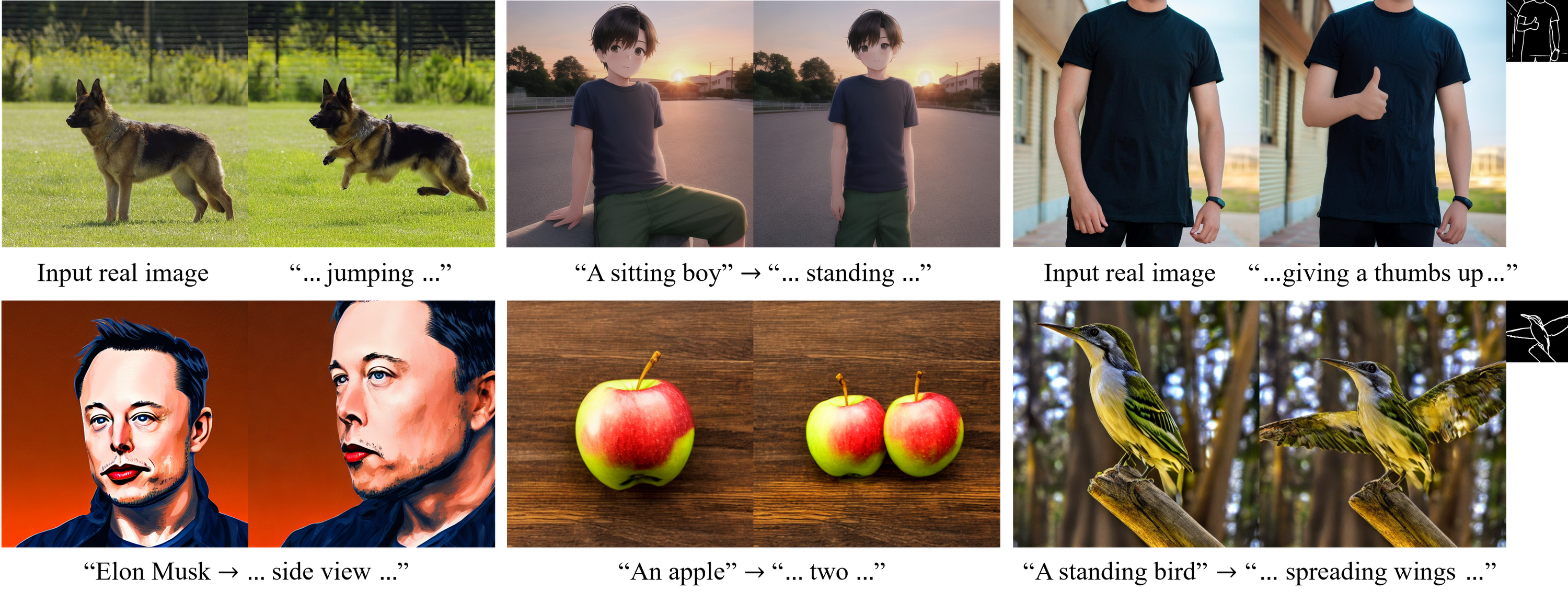

Abstract: Despite the success in large-scale text-to-image generation and text-conditioned image editing, existing methods still struggle to produce consistent generation and editing results. For example, generation approaches usually fail to synthesize multiple images of the same objects/characters but with different views or poses. Meanwhile, existing editing methods either fail to achieve effective complex non-rigid editing while maintaining the overall textures and identity, or require time-consuming fine-tuning to capture the image-specific appearance. In this paper, we develop MasaCtrl, a tuning-free method to achieve consistent image generation and complex non-rigid image editing simultaneously. Specifically, MasaCtrl converts existing self-attention in diffusion models into mutual self-attention, so that it can query correlated local contents and textures from source images for consistency. To further alleviate the query confusion between foreground and background, we propose a mask-guided mutual self-attention strategy, where the mask can be easily extracted from the cross-attention maps. Extensive experiments show that the proposed MasaCtrl can produce impressive results in both consistent image generation and complex non-rigid real image editing.

See the paper, official repository and project page for more information.

Usage

The model has two modes: (1) simultaneous image synthesis and editing and (2) editing of real or generated images. To use the model in mode (1), simply enter the source_prompt for the initial image and target_prompt for the final / edited image. To use the model in mode (2), upload your source_image and enter your target_prompt to edit the image.

Other MasaCtrl Models

- MasaCtrl with CompVis/stable-diffusion-v1-4 as the base model

Citation

@InProceedings{cao_2023_masactrl,

author = {Cao, Mingdeng and Wang, Xintao and Qi, Zhongang and Shan, Ying and Qie, Xiaohu and Zheng, Yinqiang},

title = {MasaCtrl: Tuning-Free Mutual Self-Attention Control for Consistent Image Synthesis and Editing},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {22560-22570}

}