Readme

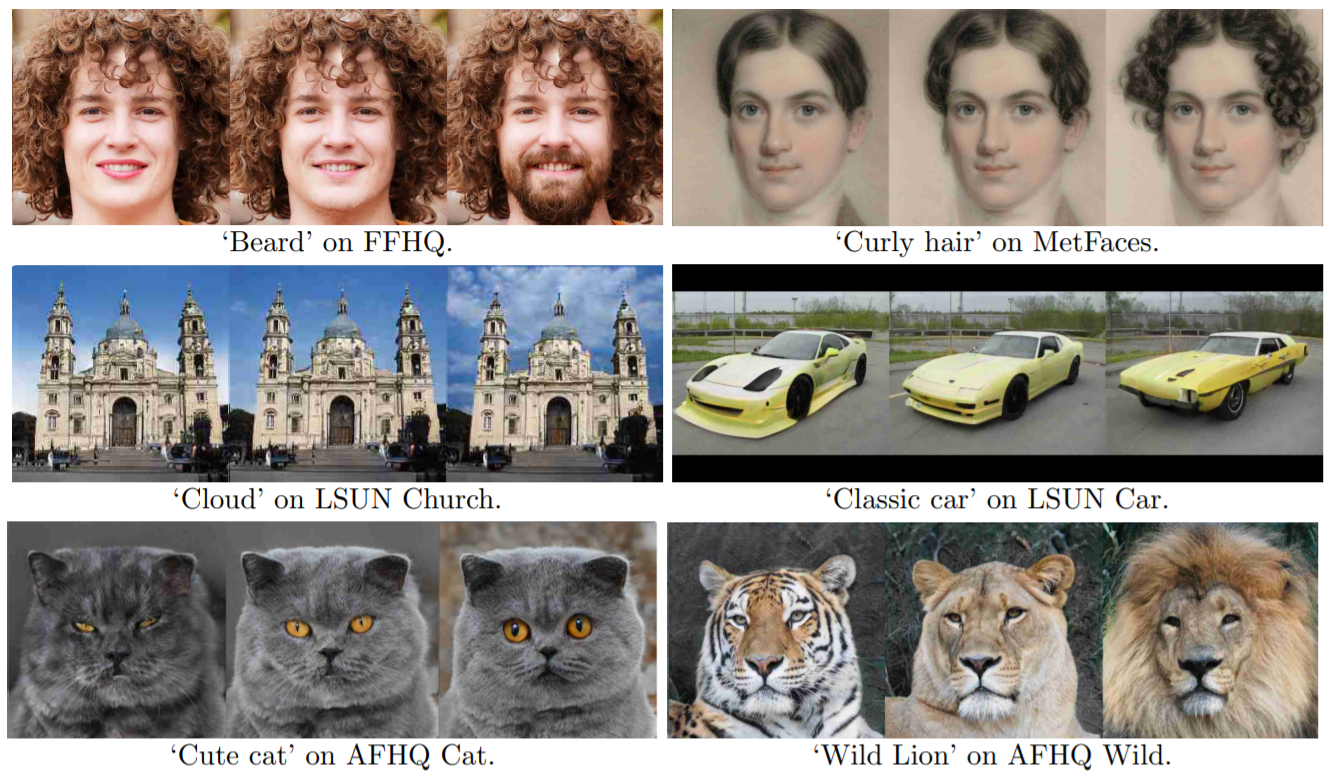

StyleMC: Multi-Channel Based Fast Text-Guided Image Generation and Manipulation

Paper: https://arxiv.org/abs/2112.08493

Video: https://www.youtube.com/watch?v=ILm_5tvtzPI

Using the API

The input arguments to StyleMC are as follows:

- image: input face image, should be front facing and close up for optimal results.

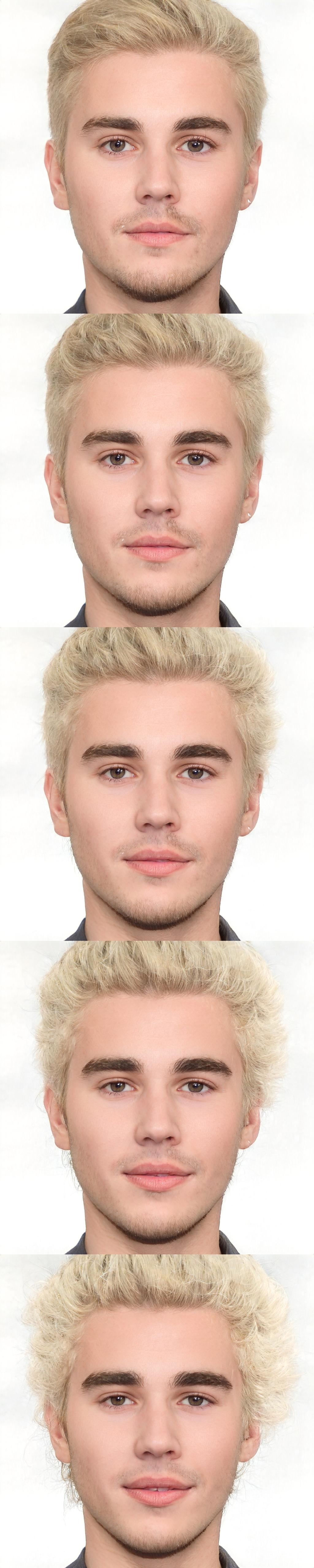

- prompt: a selection of precomputed style change directions encoding different concepts such as “happy”, “blonde”, “curly hair”, “beard”.

- custom_prompt: custom prompt to compute and use a new style direction. If provided, prompt will be ignored.

- change_alpha: strength coefficient to apply the style direction to input image. Negative values yields changes in opposite direction - generating “straight hair” if “curly hair” is applied with a negative coefficient.

- id_loss_coeff: identity loss coefficient, use higher values for better identity preservation.

References

This repo is built on the official StyleGAN2 repo, please refer to NVIDIA’s repo for further details.

If you use this code for your research, please cite our paper:

@inproceedings{kocasari2022stylemc,

title = {StyleMC: Multi-Channel Based Fast Text-Guided Image Generationand Manipulation},

author = {Umut Kocasari and Alara Dirik and Mert Tiftikci and Pinar Yanardag},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

year = {2022}

}