Readme

Model Description

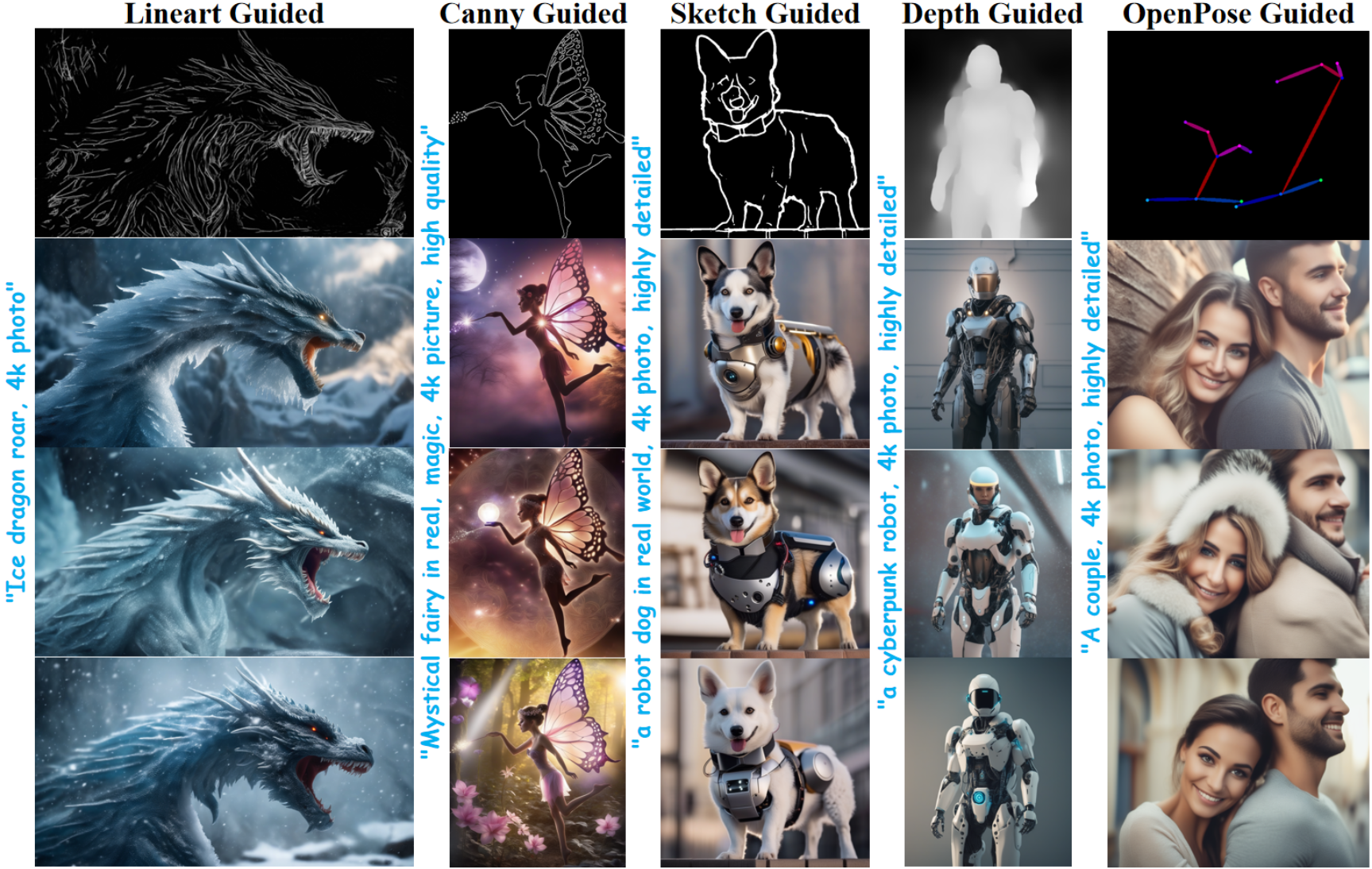

T2I-Adapter based on Stable Diffusion-XL by Tencent ARC Lab and Peking University VILLA. Cog-wrapper is adapted from the [official repository.]( (https://github.com/TencentARC/T2I-Adapter). T2I-Adapter performs image editing using text prompts combined with depth map, human body pose, line art, canny edge and sketch conditions.

Abstract: We propose T2I-Adapter, a simple and small (~70M parameters, ~300M storage space) network that can provide extra guidance to pre-trained text-to-image models while freezing the original large text-to-image models.

T2I-Adapter aligns internal knowledge in T2I models with external control signals. We can train various adapters according to different conditions, and achieve rich control and editing effects.

See the paper, official repository and Hugging Face model page and demo for more information.

Usage

To start, upload an image you would like to modify and prompt the model to generate an image as you would for Stable Diffusion. The model generating the image will use your input image as a template and internally detect the body pose to guide image generation.

Other T2I-Adapter Models

There are many different ways to use a T2I-Adapter to modify the output of Stable Diffusion XL and Stable Diffusion. Here are a few different options, all of which use an input image in addition to a prompt to generate an output. The methods process the input in different ways; try them out to see which works best for a given application.

T2I-Adapter for generating images from sketches

https://replicate.com/alaradirik/t2i-adapter-sdxl-sketch

T2I-Adapter for preserving general qualities about an input image

https://replicate.com/alaradirik/t2i-adapter-sdxl-canny

https://replicate.com/alaradirik/t2i-adapter-sdxl-lineart

https://replicate.com/alaradirik/t2i-adapter-sdxl-depth-midas

T2I-Adapter SD

https://replicate.com/cjwbw/t2i-adapter

Citation

@article{mou2023t2i,

title={T2i-adapter: Learning adapters to dig out more controllable ability for text-to-image diffusion models},

author={Mou, Chong and Wang, Xintao and Xie, Liangbin and Wu, Yanze and Zhang, Jian and Qi, Zhongang and Shan, Ying and Qie, Xiaohu},

journal={arXiv preprint arXiv:2302.08453},

year={2023}

}