Readme

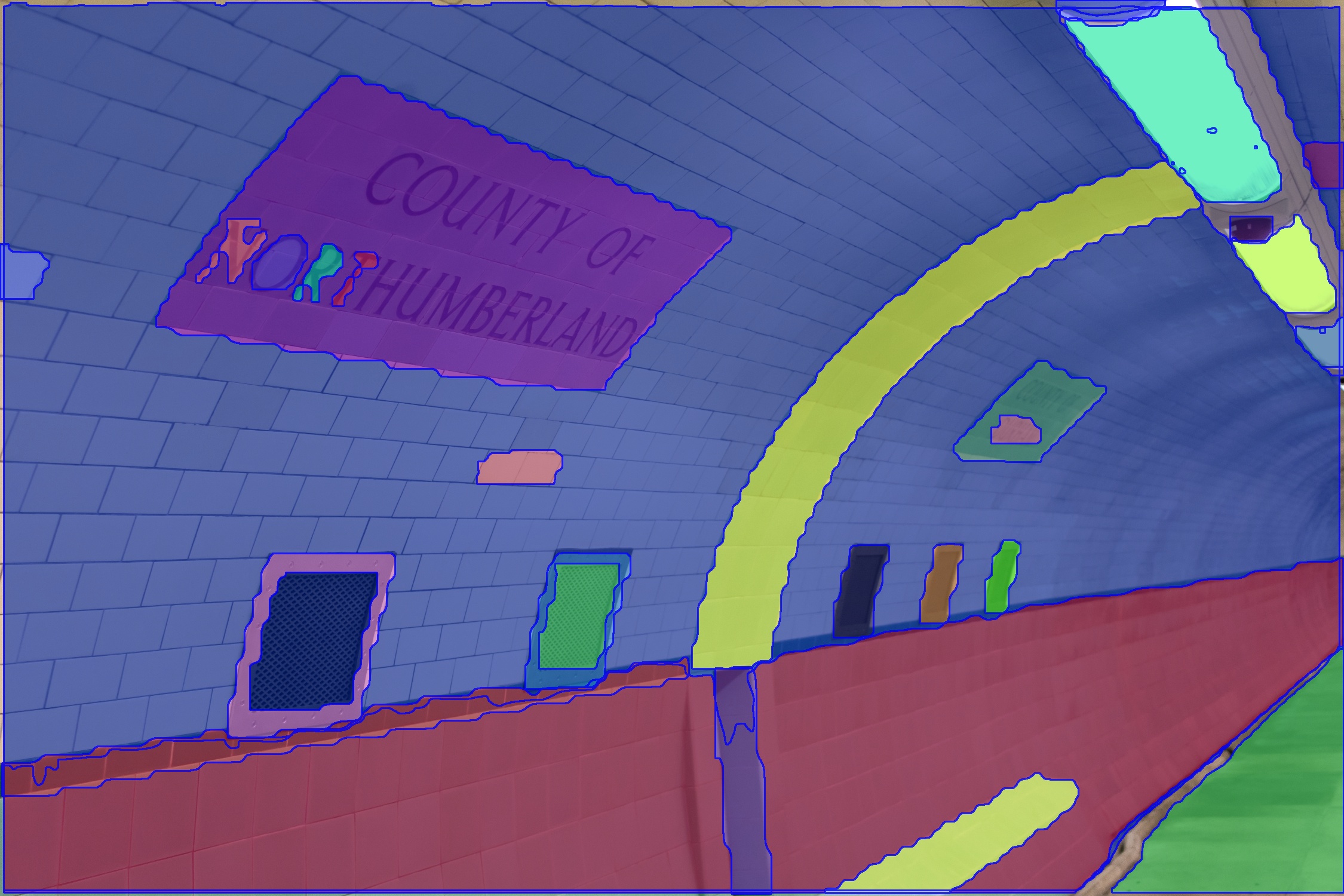

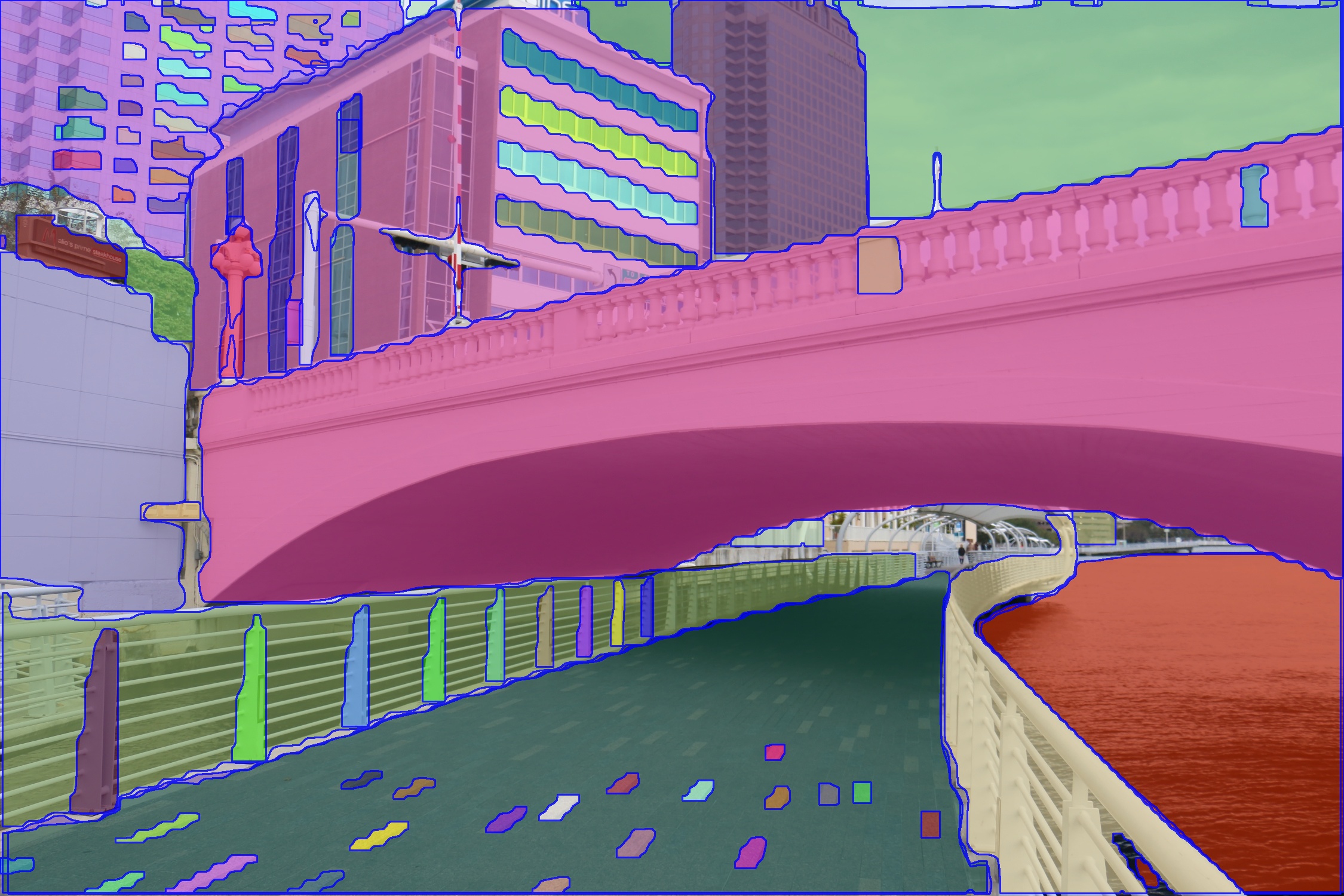

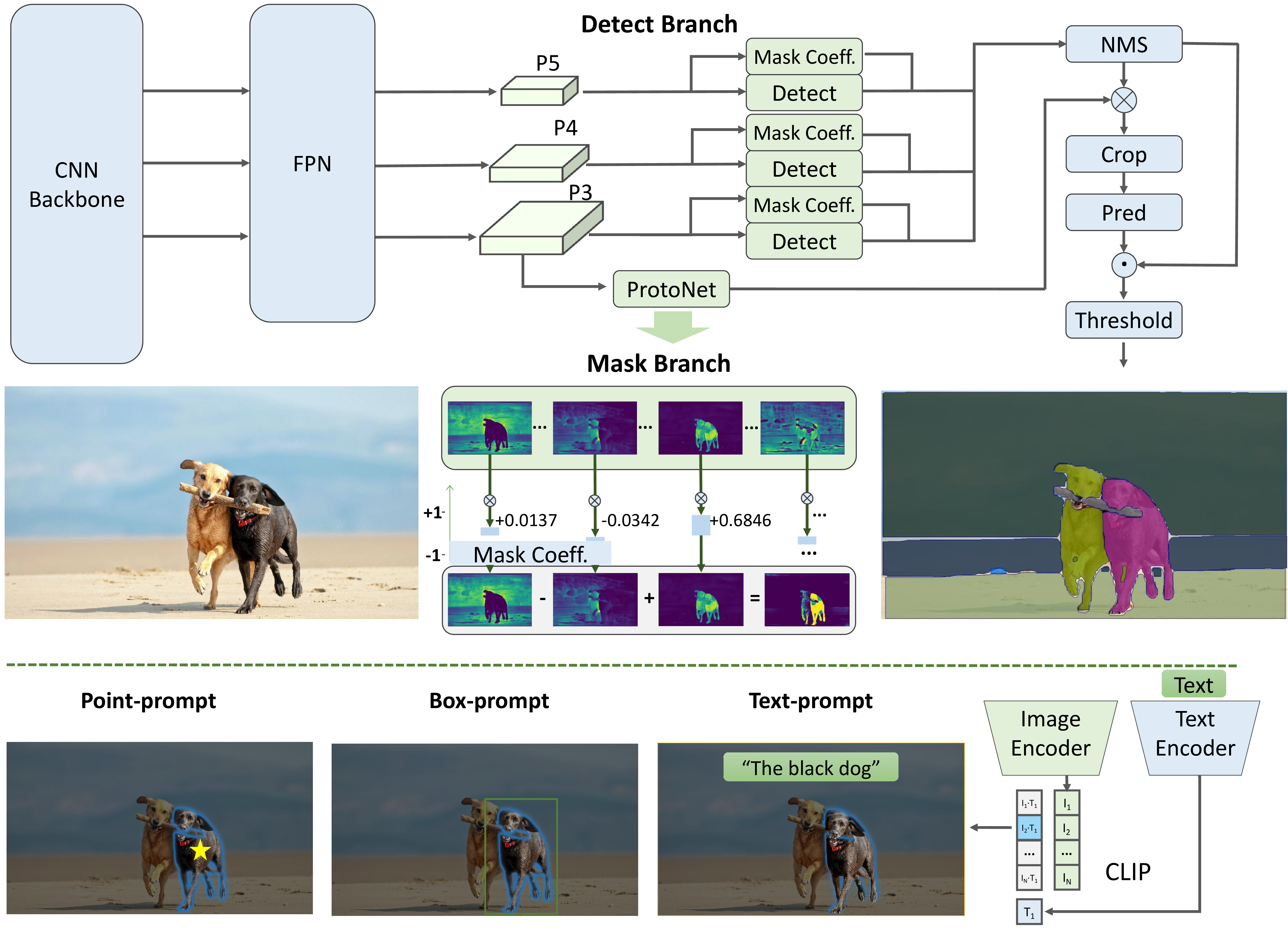

Fast Segment Anything

The Fast Segment Anything Model(FastSAM) is a CNN Segment Anything Model trained by only 2% of the SA-1B dataset published by SAM authors. The FastSAM achieve comparable performance with the SAM method at 50× higher run-time speed.

Citing FastSAM

If you find this project useful for your research, please consider citing the following BibTeX entry.

@misc{zhao2023fast,

title={Fast Segment Anything},

author={Xu Zhao and Wenchao Ding and Yongqi An and Yinglong Du and Tao Yu and Min Li and Ming Tang and Jinqiao Wang},

year={2023},

eprint={2306.12156},

archivePrefix={arXiv},

primaryClass={cs.CV}

}