Readme

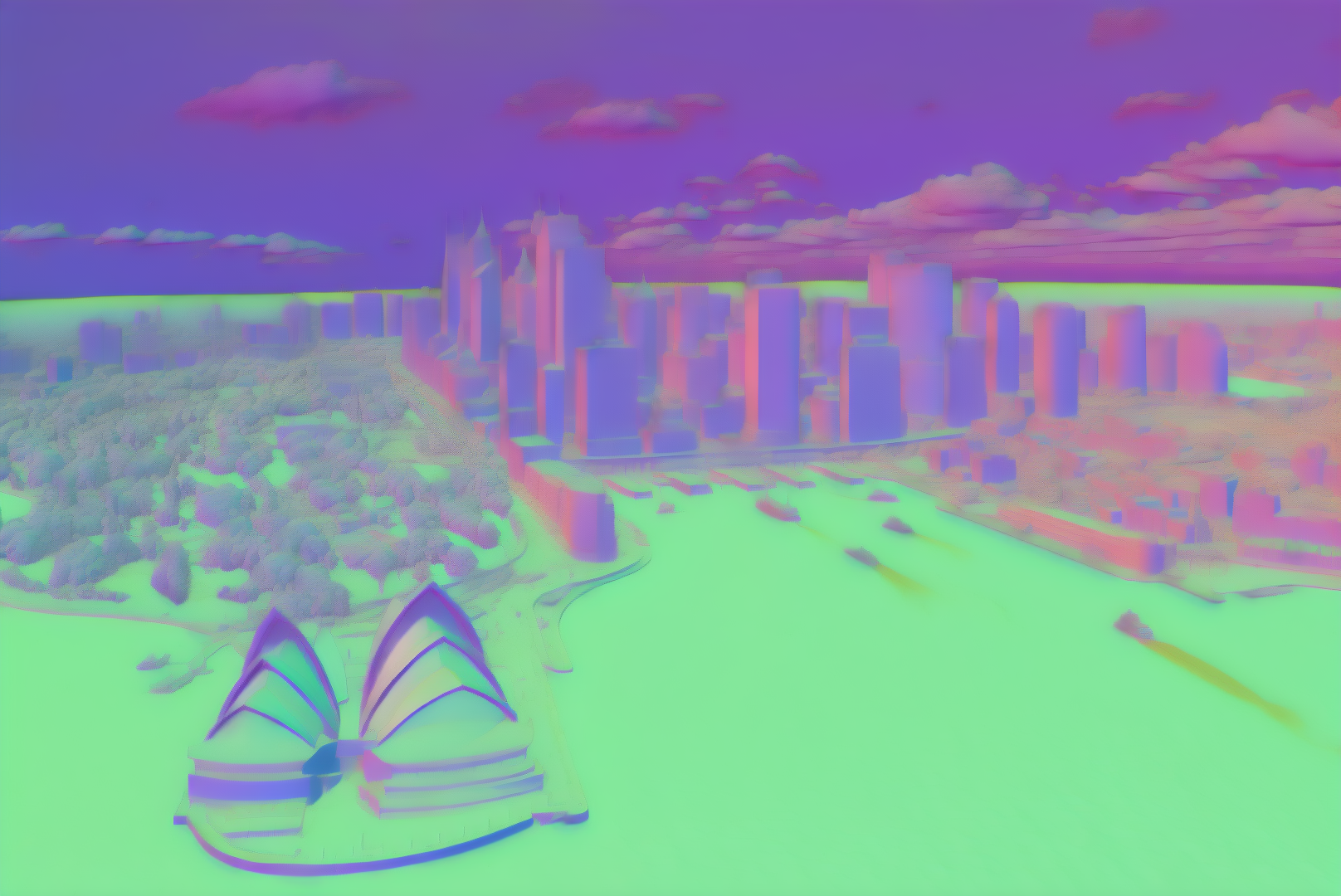

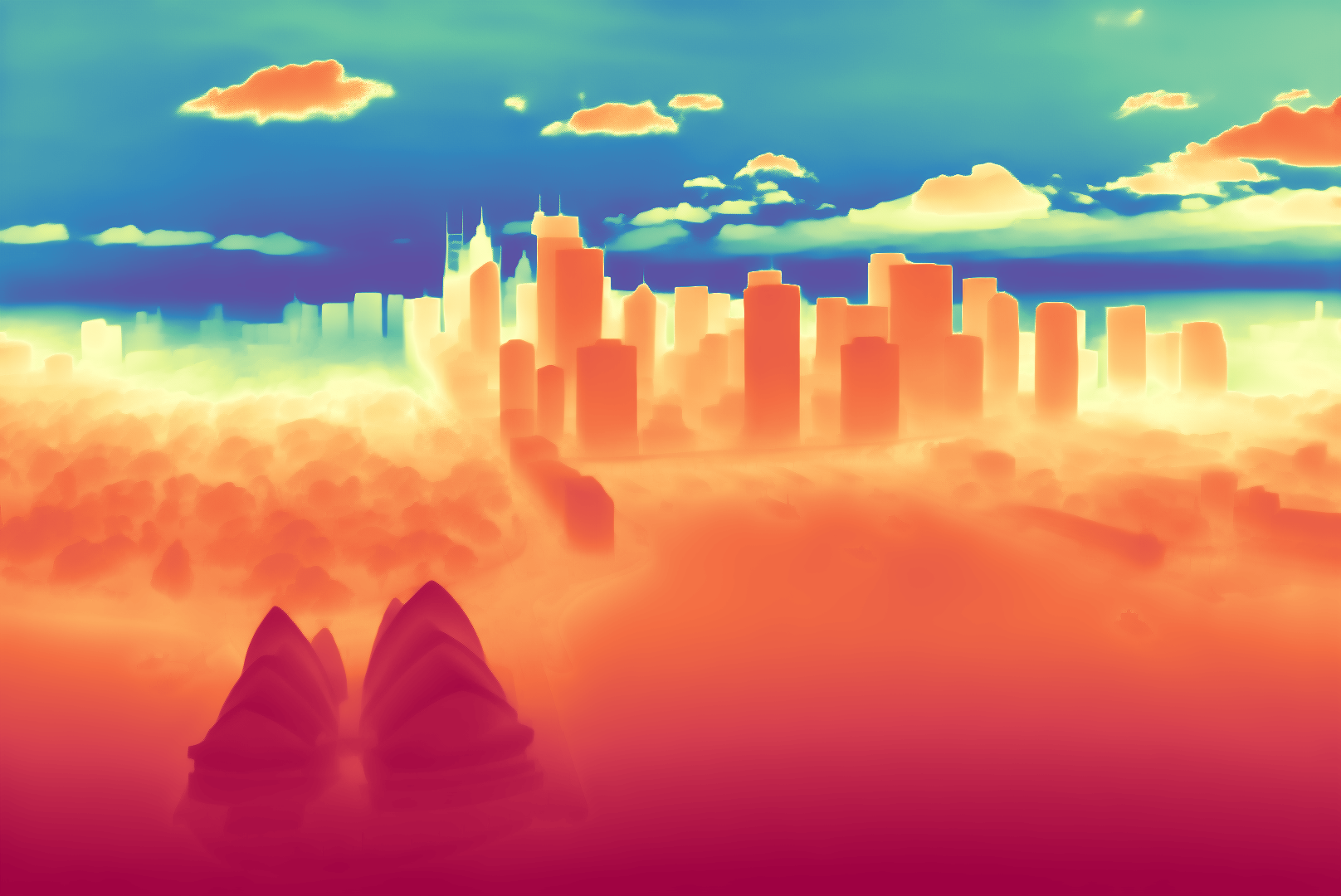

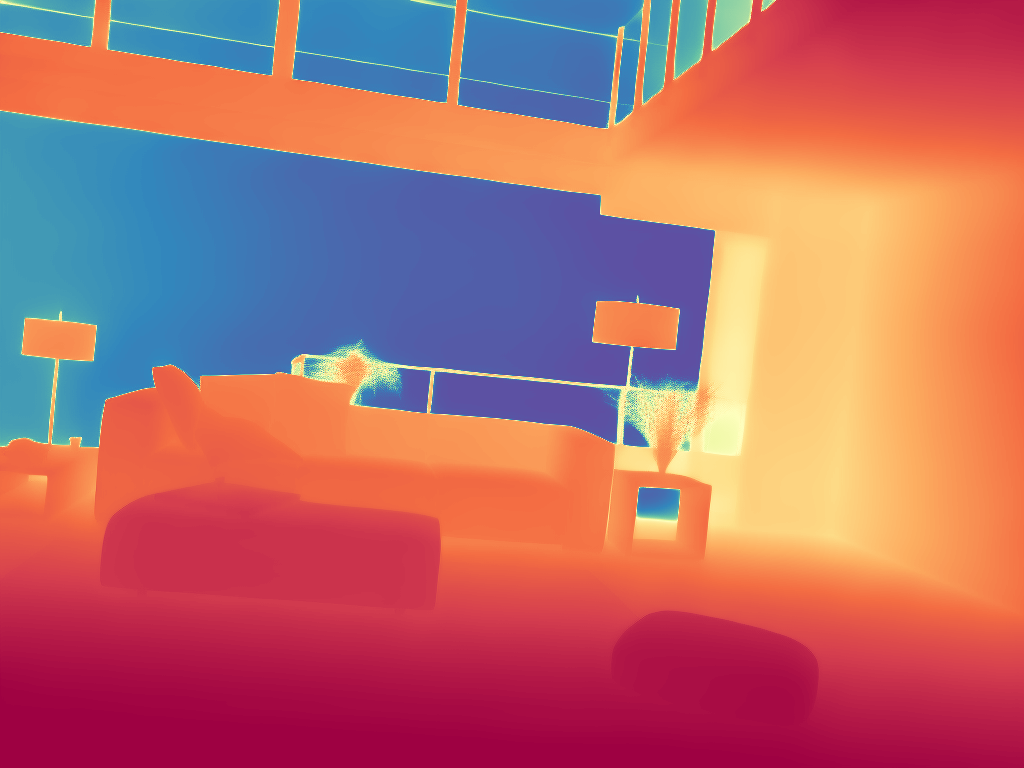

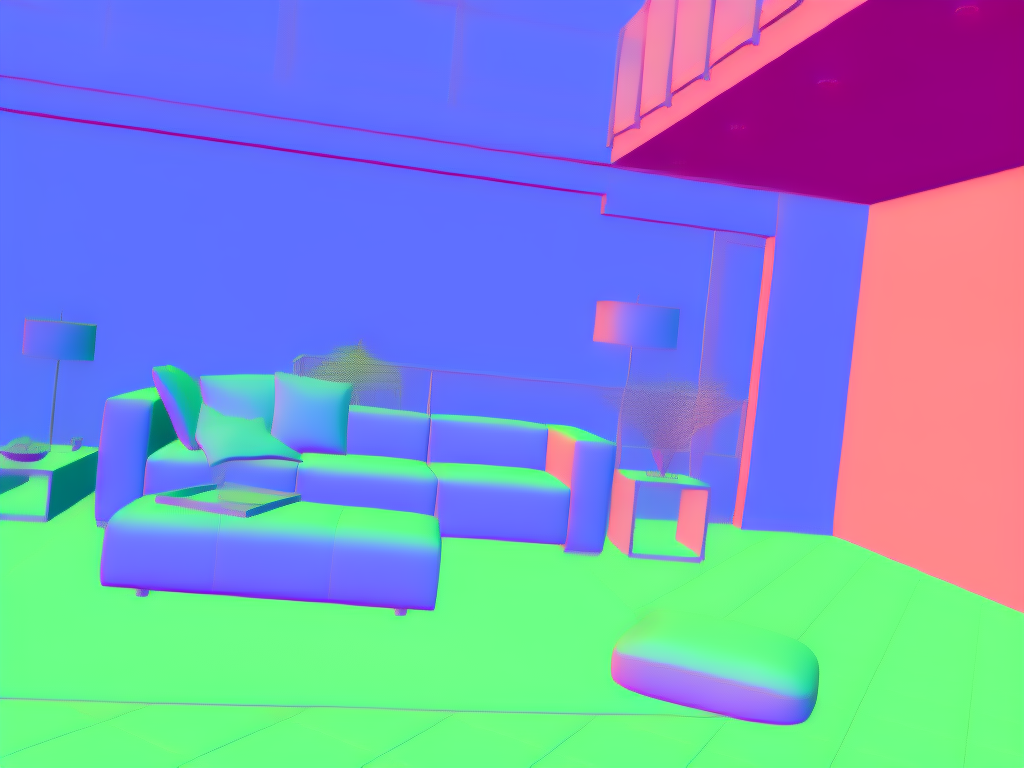

Lotus: Diffusion-based Visual Foundation Model for High-quality Dense Prediction

Lotus: Diffusion-based Visual Foundation Model for High-quality Dense Prediction

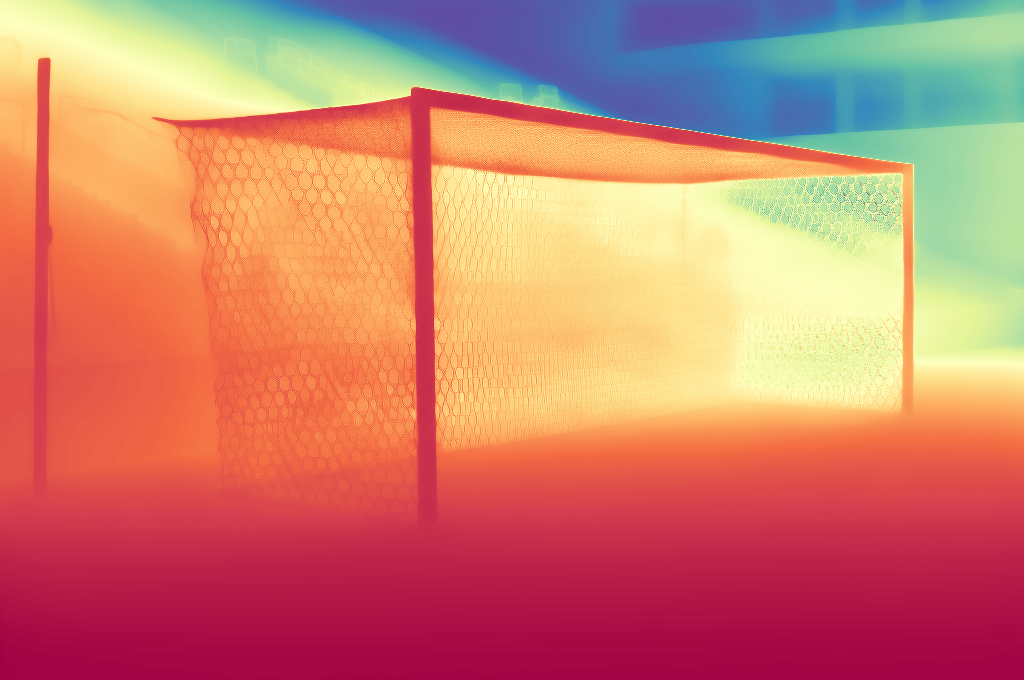

We present Lotus, a diffusion-based visual foundation model for dense geometry prediction. With minimal training data, Lotus achieves SoTA performance in two key geometry perception tasks, i.e., zero-shot depth and normal estimation. “Avg. Rank” indicates the average ranking across all metrics, where lower values are better. Bar length represents the amount of training data used.

🎓 Citation

If you find our work useful in your research, please consider citing our paper:

@article{he2024lotus,

title={Lotus: Diffusion-based Visual Foundation Model for High-quality Dense Prediction},

author={He, Jing and Li, Haodong and Yin, Wei and Liang, Yixun and Li, Leheng and Zhou, Kaiqiang and Liu, Hongbo and Liu, Bingbing and Chen, Ying-Cong},

journal={arXiv preprint arXiv:2409.18124},

year={2024}

}