Readme

Depth Pro: Sharp Monocular Metric Depth in Less Than a Second

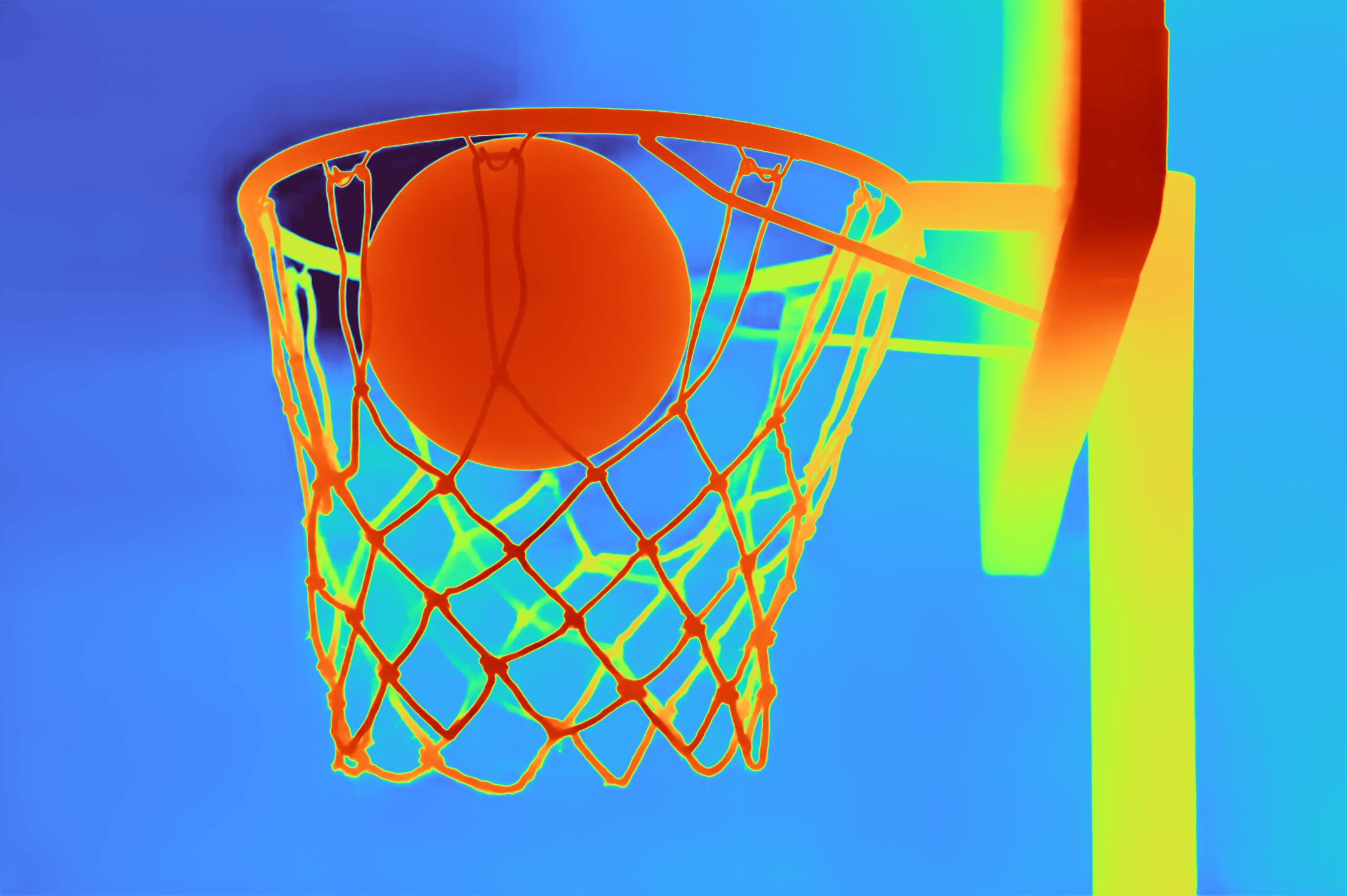

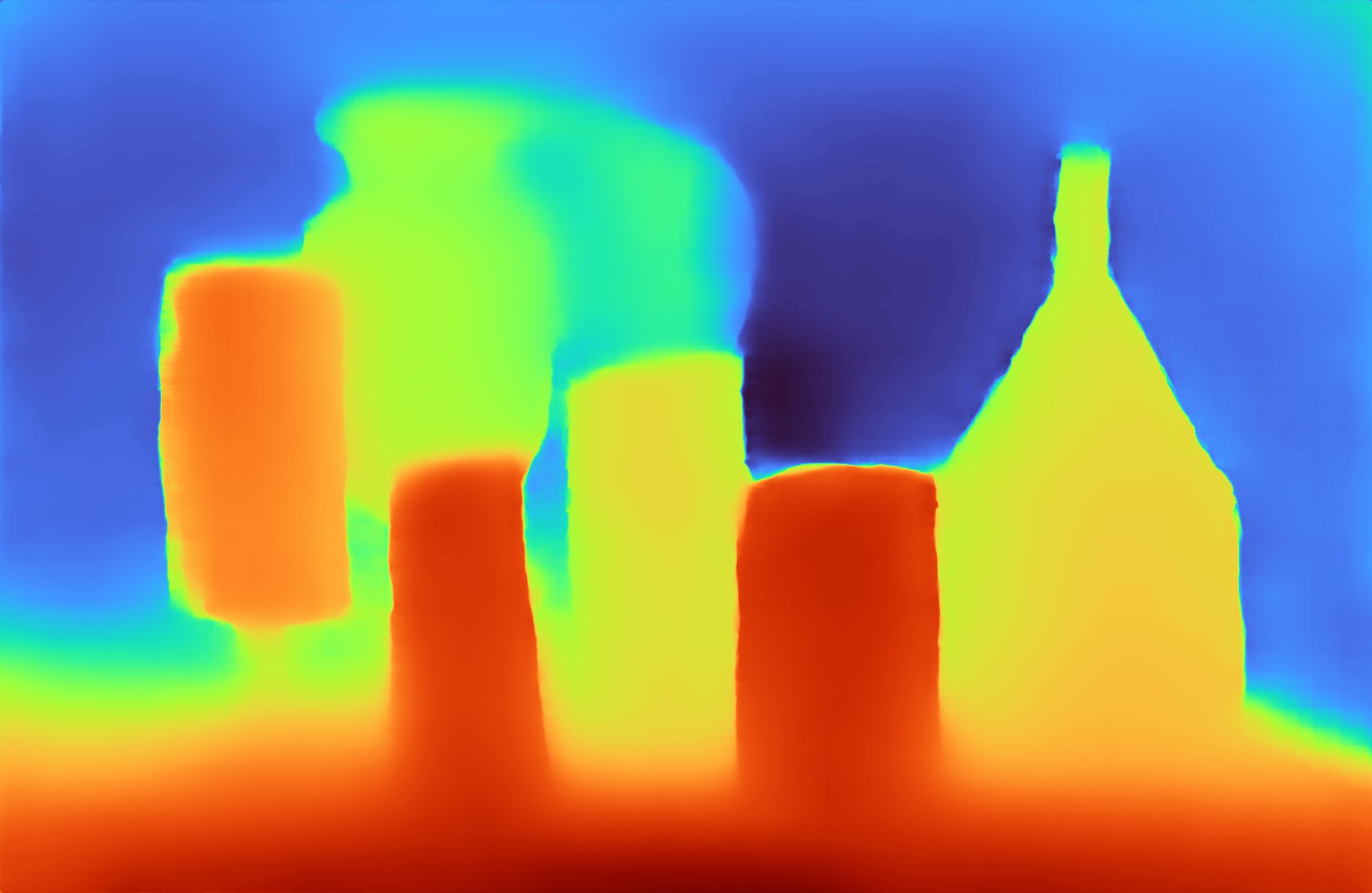

We present a foundation model for zero-shot metric monocular depth estimation. Our model, Depth Pro, synthesizes high-resolution depth maps with unparalleled sharpness and high-frequency details. The predictions are metric, with absolute scale, without relying on the availability of metadata such as camera intrinsics. And the model is fast, producing a 2.25-megapixel depth map in 0.3 seconds on a standard GPU. These characteristics are enabled by a number of technical contributions, including an efficient multi-scale vision transformer for dense prediction, a training protocol that combines real and synthetic datasets to achieve high metric accuracy alongside fine boundary tracing, dedicated evaluation metrics for boundary accuracy in estimated depth maps, and state-of-the-art focal length estimation from a single image.

Citation

If you find our work useful, please cite the following paper:

@article{Bochkovskii2024:arxiv,

author = {Aleksei Bochkovskii and Ama\"{e}l Delaunoy and Hugo Germain and Marcel Santos and

Yichao Zhou and Stephan R. Richter and Vladlen Koltun}

title = {Depth Pro: Sharp Monocular Metric Depth in Less Than a Second},

journal = {arXiv},

year = {2024},

url = {https://arxiv.org/abs/2410.02073},

}

License

This sample code is released under the LICENSE terms.

The model weights are released under the LICENSE terms.

Acknowledgements

Our codebase is built using multiple opensource contributions, please see Acknowledgements for more details.

Please check the paper for a complete list of references and datasets used in this work.