Readme

BLIP-Diffusion: Pre-trained Subject Representation for Controllable Text-to-Image Generation and Editing

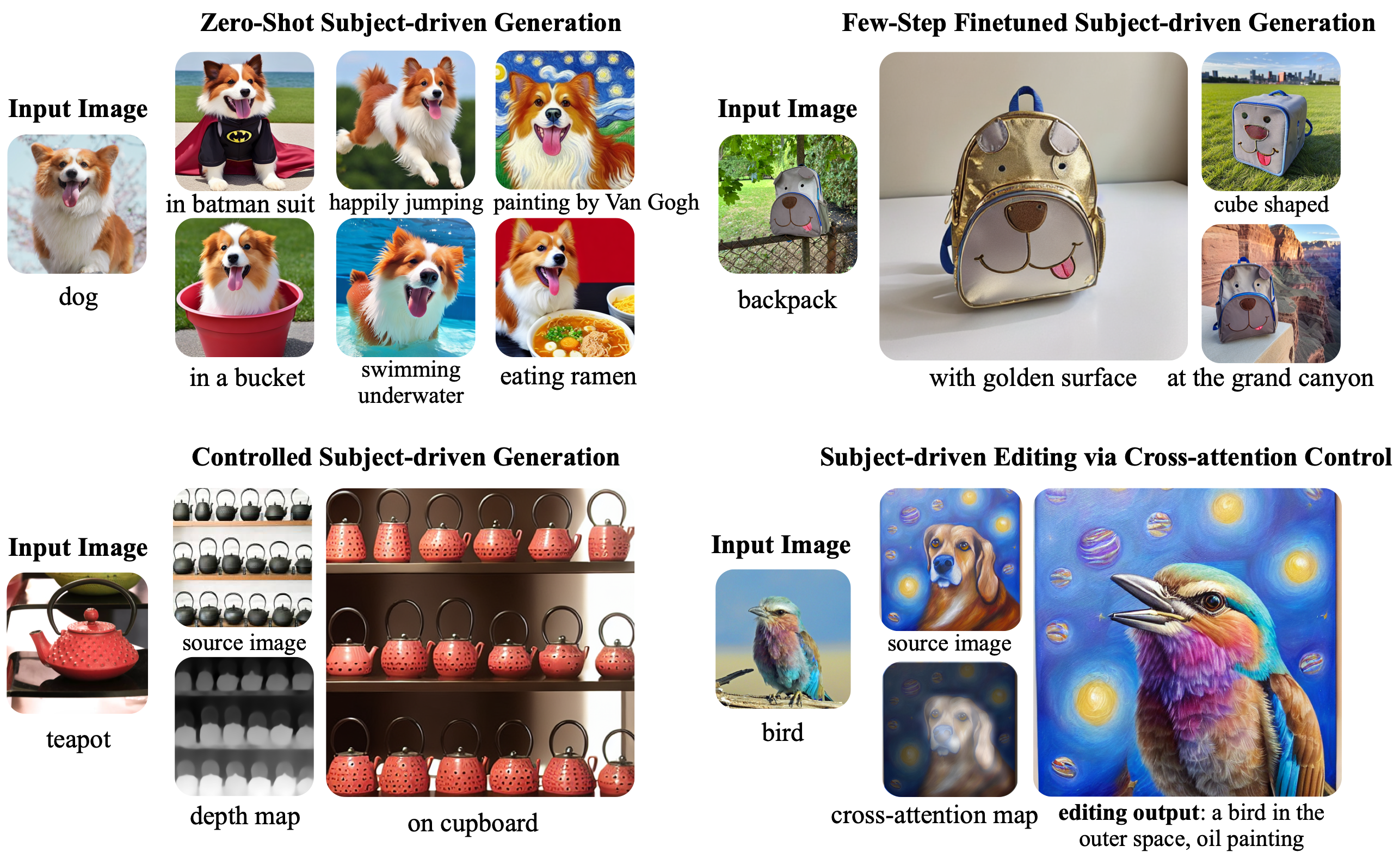

This repo hosts the official implementation of BLIP-Diffusion, a text-to-image diffusion model with built-in support for multimodal subject-and-text condition. BLIP-Diffusion enables zero-shot subject-driven generation, and efficient fine-tuning for customized subjects with up to 20x speedup. In addition, BLIP-Diffusion can be flexibly combiend with ControlNet and prompt-to-prompt to enable novel subject-driven generation and editing applications.

Cite BLIP-Diffusion

If you find our work helpful, please consider citing:

@article{li2023blip,

title={BLIP-Diffusion: Pre-trained Subject Representation for Controllable Text-to-Image Generation and Editing},

author={Li, Dongxu and Li, Junnan and Hoi, Steven CH},

journal={arXiv preprint arXiv:2305.14720},

year={2023}

}

@inproceedings{li2023lavis,

title={LAVIS: A One-stop Library for Language-Vision Intelligence},

author={Li, Dongxu and Li, Junnan and Le, Hung and Wang, Guangsen and Savarese, Silvio and Hoi, Steven CH},

booktitle={Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations)},

pages={31--41},

year={2023}

}