Readme

ControlVideo

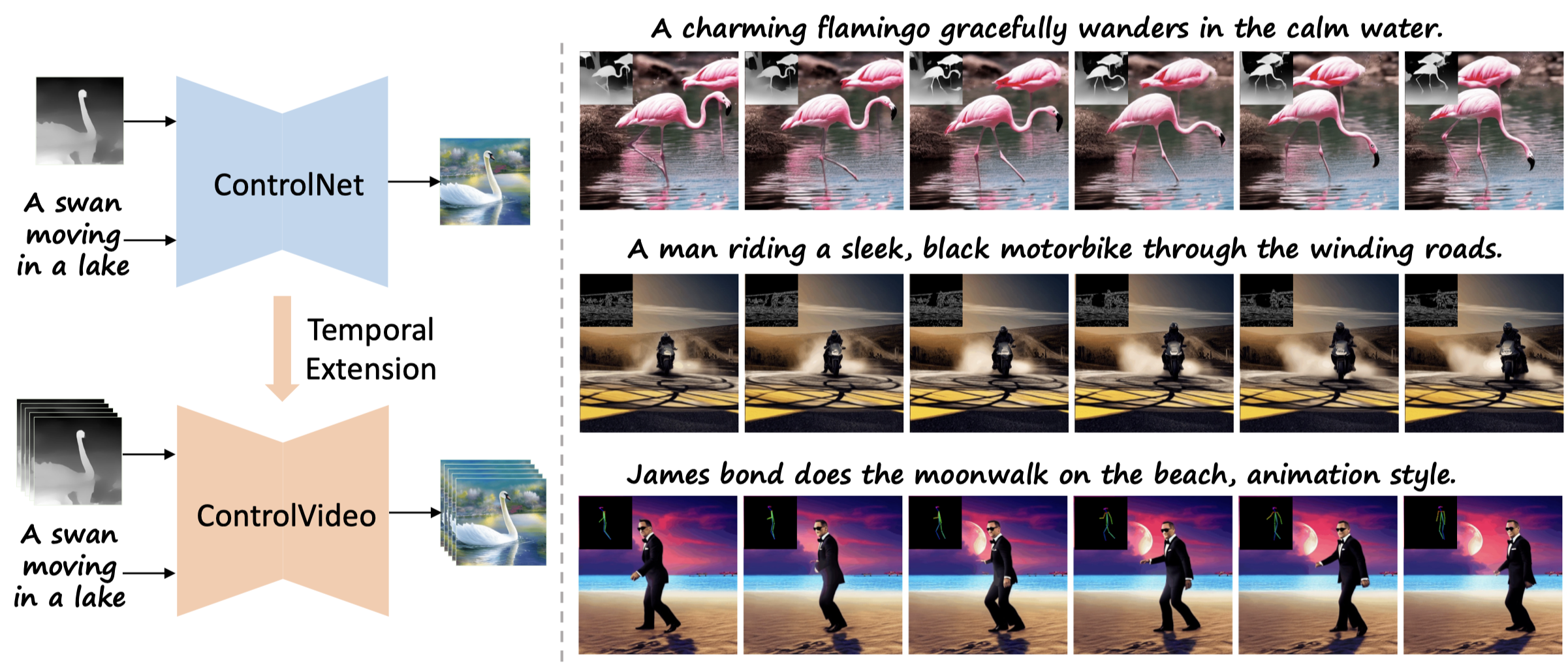

Official PyTorch implementation of “ControlVideo: Training-free Controllable Text-to-Video Generation”

ControlVideo adapts ControlNet to the video counterpart without any finetuning, aiming to directly inherit its high-quality and consistent generation

Citation

If you make use of our work, please cite our paper.

@article{zhang2023controlvideo,

title={ControlVideo: Training-free Controllable Text-to-Video Generation},

author={Zhang, Yabo and Wei, Yuxiang and Jiang, Dongsheng and Zhang, Xiaopeng and Zuo, Wangmeng and Tian, Qi},

journal={arXiv preprint arXiv:2305.13077},

year={2023}

}

Acknowledgement

This work repository borrows heavily from Diffusers, ControlNet, Tune-A-Video, and RIFE.

There are also many interesting works on video generation: Tune-A-Video, Text2Video-Zero, Follow-Your-Pose, Control-A-Video, et al.