Readme

Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data

This work presents Depth Anything, a highly practical solution for robust monocular depth estimation by training on a combination of 1.5M labeled images and 62M+ unlabeled images.

Features of Depth Anything

-

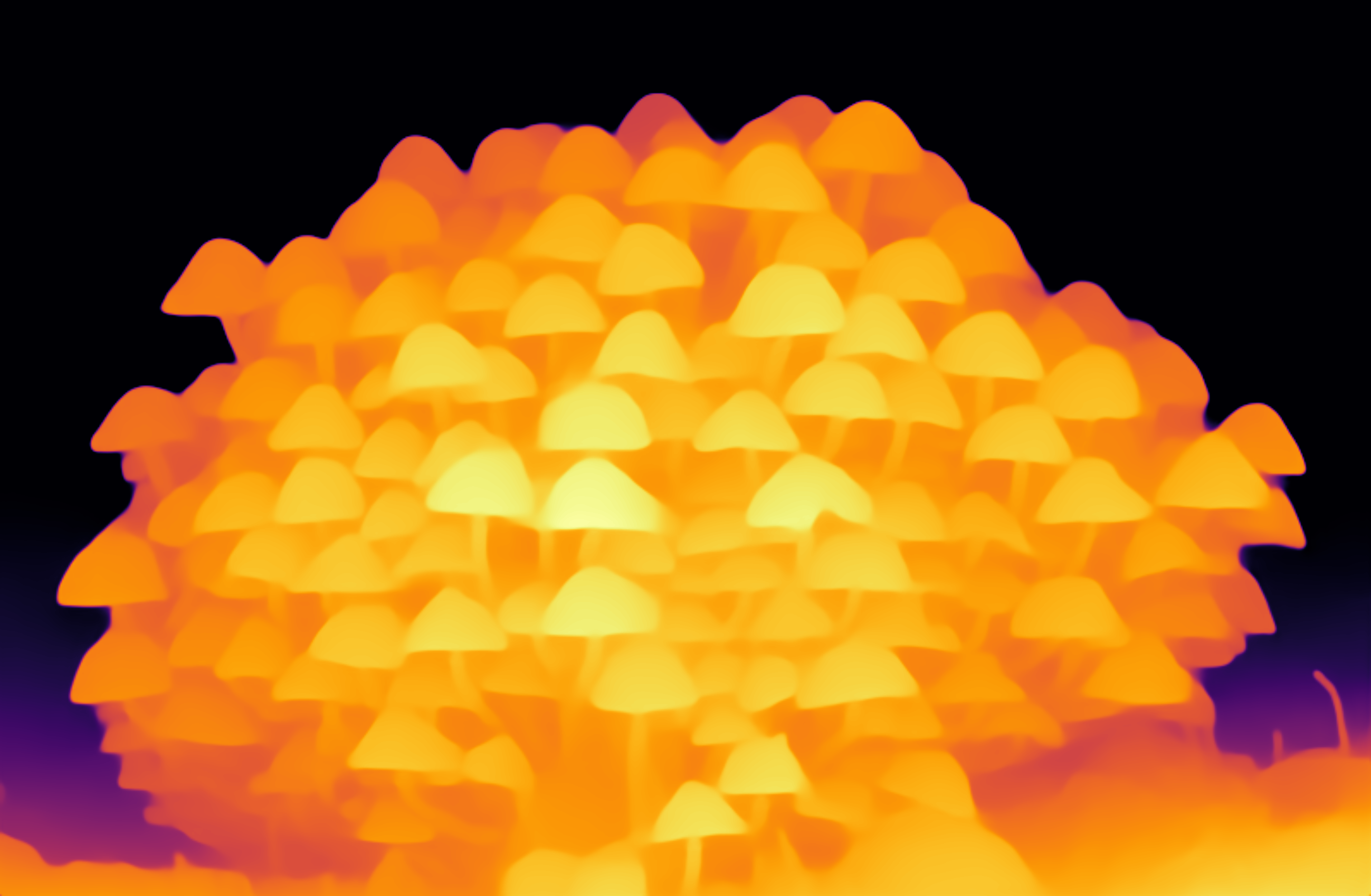

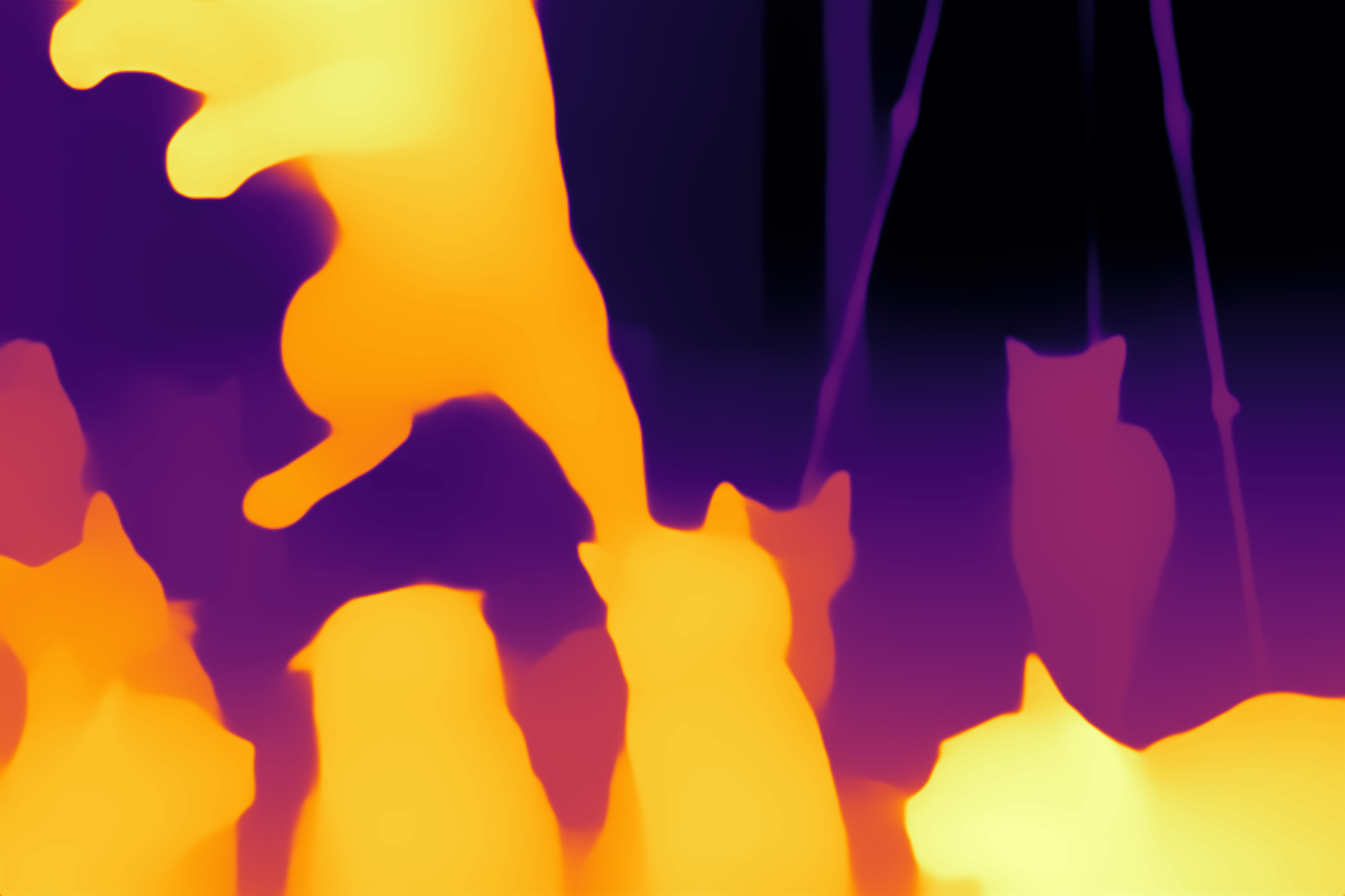

Relative depth estimation:

Our foundation models listed here can provide relative depth estimation for any given image robustly.

-

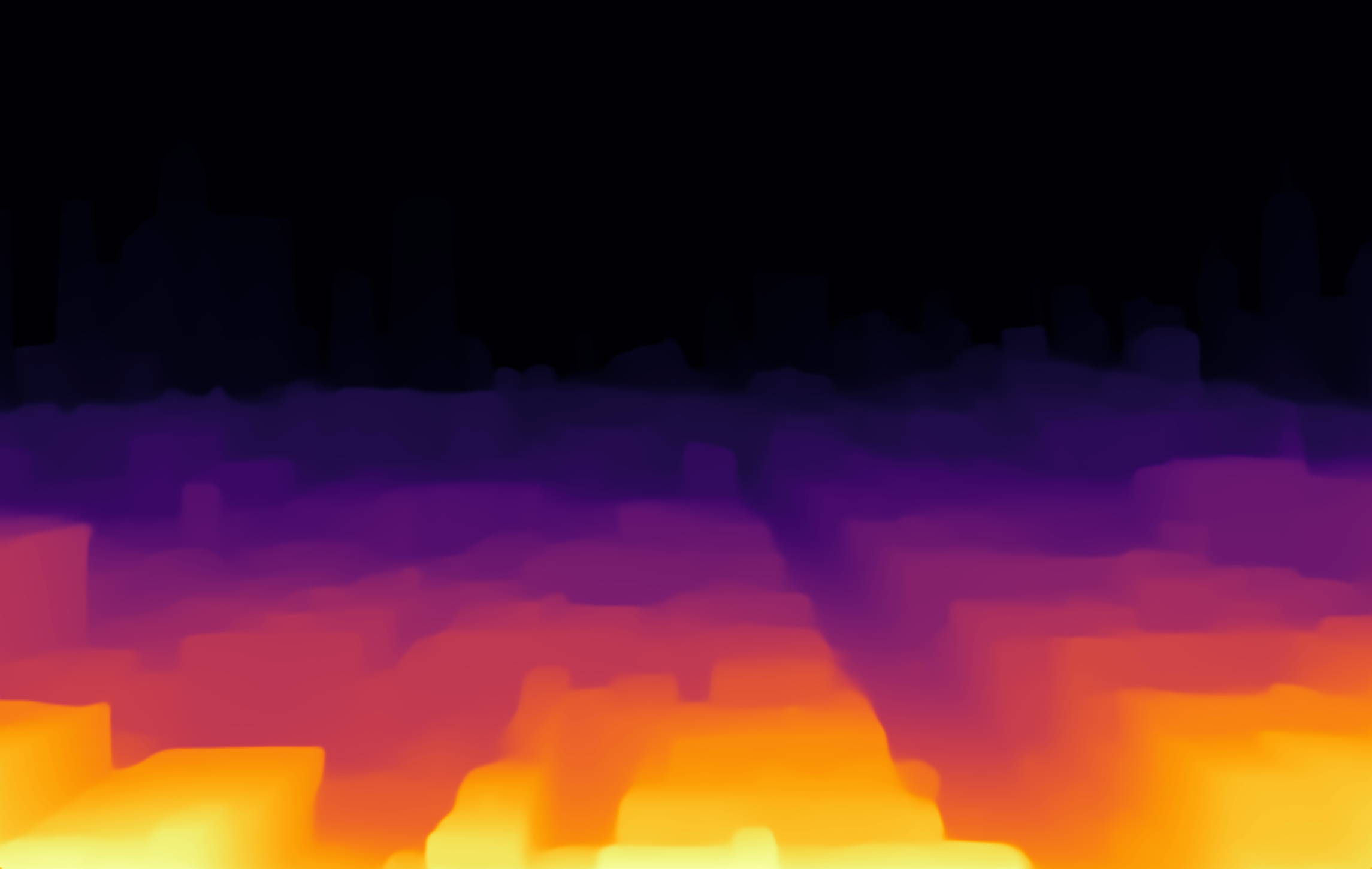

Metric depth estimation

We fine-tune our Depth Anything model with metric depth information from NYUv2 or KITTI. It offers strong capabilities of both in-domain and zero-shot metric depth estimation.

-

Better depth-conditioned ControlNet

We re-train a better depth-conditioned ControlNet based on Depth Anything. It offers more precise synthesis than the previous MiDaS-based ControlNet.

-

Downstream high-level scene understanding

The Depth Anything encoder can be fine-tuned to downstream high-level perception tasks, e.g., semantic segmentation, 86.2 mIoU on Cityscapes and 59.4 mIoU on ADE20K.

Citation

If you find this project useful, please consider citing:

@article{depthanything,

title={Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data},

author={Yang, Lihe and Kang, Bingyi and Huang, Zilong and Xu, Xiaogang and Feng, Jiashi and Zhao, Hengshuang},

journal={arXiv:2401.10891},

year={2024}

}