Readme

This is a cog implementation of https://github.com/xingyizhou/GTR

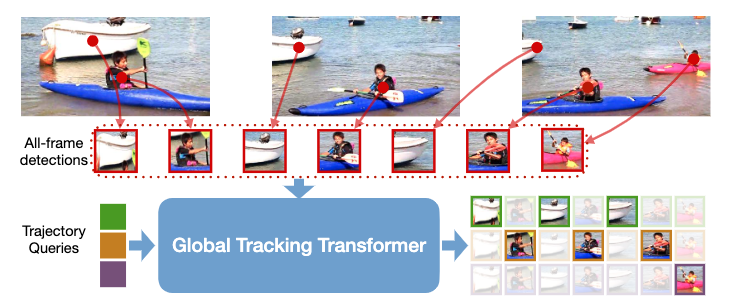

Global Tracking Transformers

Global Tracking Transformers,

Xingyi Zhou, Tianwei Yin, Vladlen Koltun, Philipp Krähenbühl,

CVPR 2022 (arXiv 2203.13250)

Features

-

Object association within a long temporal window (32 frames).

-

Classification after tracking for long-tail recognition.

-

“Detector” of global trajectories.

License

The majority of GTR is licensed under the Apache 2.0 license, however portions of the project are available under separate license terms: trackeval in gtr/tracking/trackeval/, is licensed under the MIT license. FairMOT in gtr/tracking/local_tracker is under MIT license. Please see NOTICE for license details.

The demo video is from TAO dataset, which is originally from YFCC100M dataset. Please be aware of the original dataset license.

Citation

If you find this project useful for your research, please use the following BibTeX entry.

@inproceedings{zhou2022global,

title={Global Tracking Transformers},

author={Zhou, Xingyi and Yin, Tianwei and Koltun, Vladlen and Kr{\"a}henb{\"u}hl, Philipp},

booktitle={CVPR},

year={2022}

}