Readme

BRIA Background Removal v1.4 Model Card

RMBG v1.4 is our state-of-the-art background removal model, designed to effectively separate foreground from background in a range of categories and image types. This model has been trained on a carefully selected dataset, which includes: general stock images, e-commerce, gaming, and advertising content, making it suitable for commercial use cases powering enterprise content creation at scale. The accuracy, efficiency, and versatility currently rival leading open source models. It is ideal where content safety, legally licensed datasets, and bias mitigation are paramount.

Developed by BRIA AI, RMBG v1.4 is available as an open-source model for non-commercial use.

Model Description

- Developed by: BRIA AI

- Model type: Background Removal

- License: bria-rmbg-1.4

- The model is released under an open-source license for non-commercial use.

-

Commercial use is subject to a commercial agreement with BRIA. Contact Us for more information.

-

Model Description: BRIA RMBG 1.4 is a saliency segmentation model trained exclusively on a professional-grade dataset.

- BRIA: Resources for more information: BRIA AI

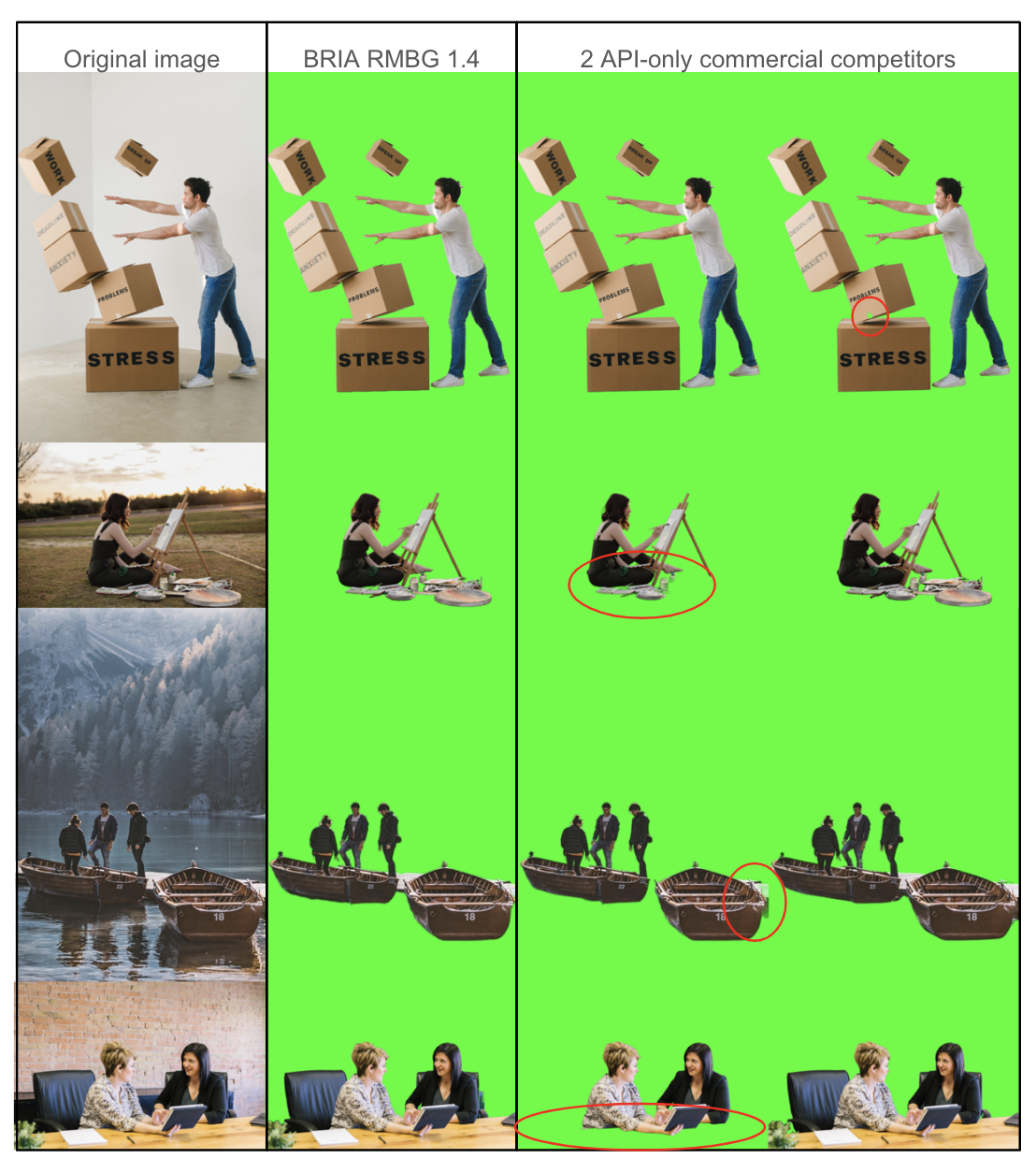

Qualitative Evaluation

Architecture

RMBG v1.4 is developed on the IS-Net enhanced with our unique training scheme and proprietary dataset. These modifications significantly improve the model’s accuracy and effectiveness in diverse image-processing scenarios.