Readme

Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation

Abstract

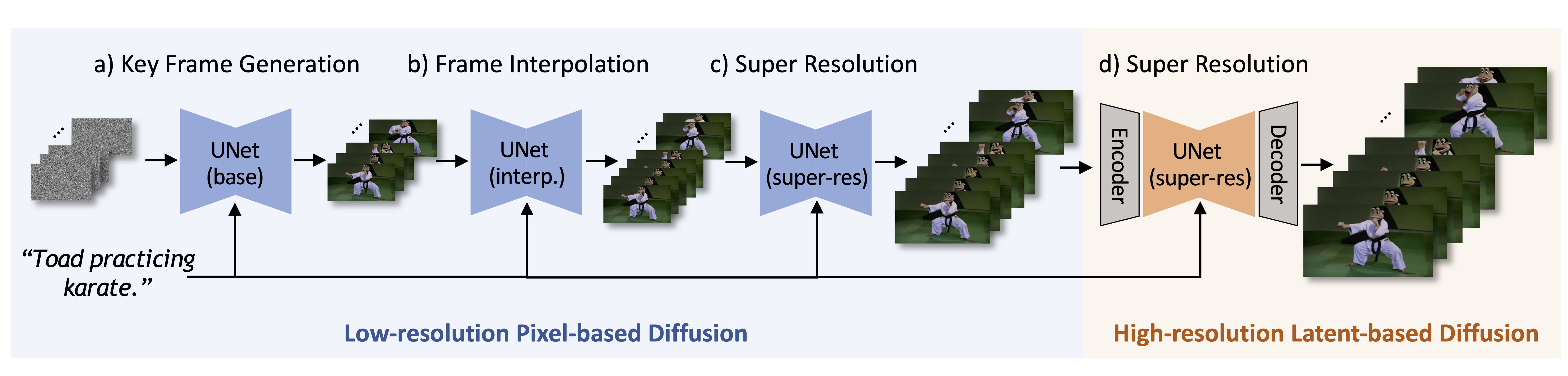

Significant advancements have been achieved in the realm of large-scale pre-trained text-to-video Diffusion Models (VDMs). However, previous methods either rely solely on pixel-based VDMs, which come with high computational costs, or on latent-based VDMs, which often struggle with precise text-video alignment. In this paper, we are the first to propose a hybrid model, dubbed as Show-1, which marries pixel-based and latent-based VDMs for text-to-video generation. Our model first uses pixel-based VDMs to produce a low-resolution video of strong text-video correlation. After that, we propose a novel expert translation method that employs the latent-based VDMs to further upsample the low-resolution video to high resolution. Compared to latent VDMs, Show-1 can produce high-quality videos of precise text-video alignment; Compared to pixel VDMs, Show-1 is much more efficient (GPU memory usage during inference is 15G vs 72G). We also validate our model on standard video generation benchmarks.

Method

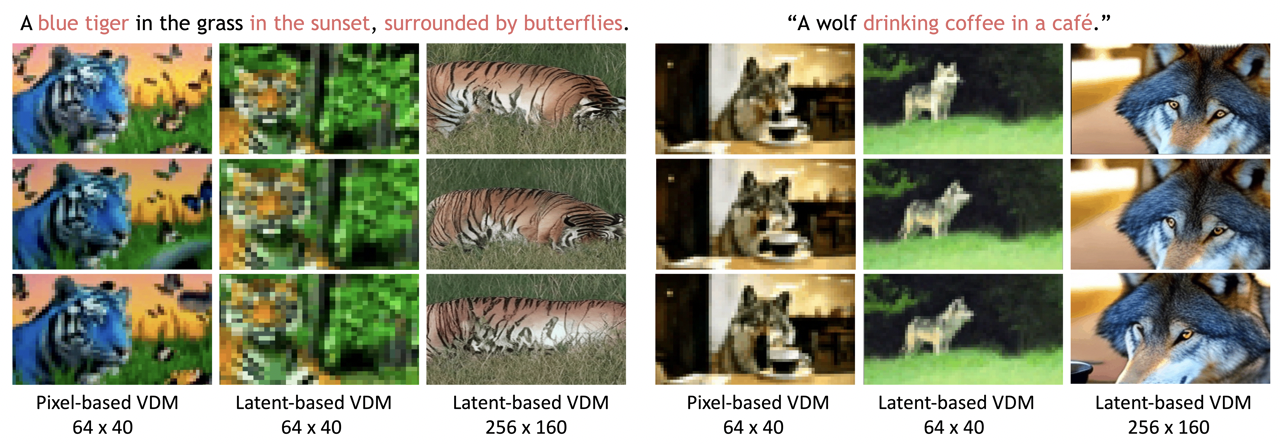

Pixel-based VDMs can generate motion accurately aligned with the textual prompt but typically demand expensive computational costs in terms of time and GPU memory, especially when generating high-resolution videos. Latent-based VDMs are more resource-efficient because they work in a reduced-dimension latent space.

But it is challenging for such small latent space (e.g., 64×40 for 256×160 videos) to cover rich yet necessary visual semantic details as described by the textual prompt. Therefore, as shown in above figure, the generated videos often are not well-aligned with the textual prompts. On the other hand, if the generated videos are of relatively high resolution (e.g., 256×160 videos), the latent model will focus more on spatial appearance but may also ignore the text-video alignment.

But it is challenging for such small latent space (e.g., 64×40 for 256×160 videos) to cover rich yet necessary visual semantic details as described by the textual prompt. Therefore, as shown in above figure, the generated videos often are not well-aligned with the textual prompts. On the other hand, if the generated videos are of relatively high resolution (e.g., 256×160 videos), the latent model will focus more on spatial appearance but may also ignore the text-video alignment.

To marry the strength and alleviate the weakness of pixel-based and latent-based VDMs, we introduce Show-1, an efficient text-to-video model that generates videos of not only decent video-text alignment but also high visual quality.

Citation

If you make use of our work, please cite our paper.

@misc{zhang2023show1,

title={Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation},

author={David Junhao Zhang and Jay Zhangjie Wu and Jia-Wei Liu and Rui Zhao and Lingmin Ran and Yuchao Gu and Difei Gao and Mike Zheng Shou},

year={2023},

eprint={2309.15818},

archivePrefix={arXiv},

primaryClass={cs.CV}

}