Text2Video-Zero

Official code for

Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators*

Levon Khachatryan,

Andranik Movsisyan,

Vahram Tadevosyan,

Roberto Henschel,

Zhangyang Wang, Shant Navasardyan, Humphrey Shi

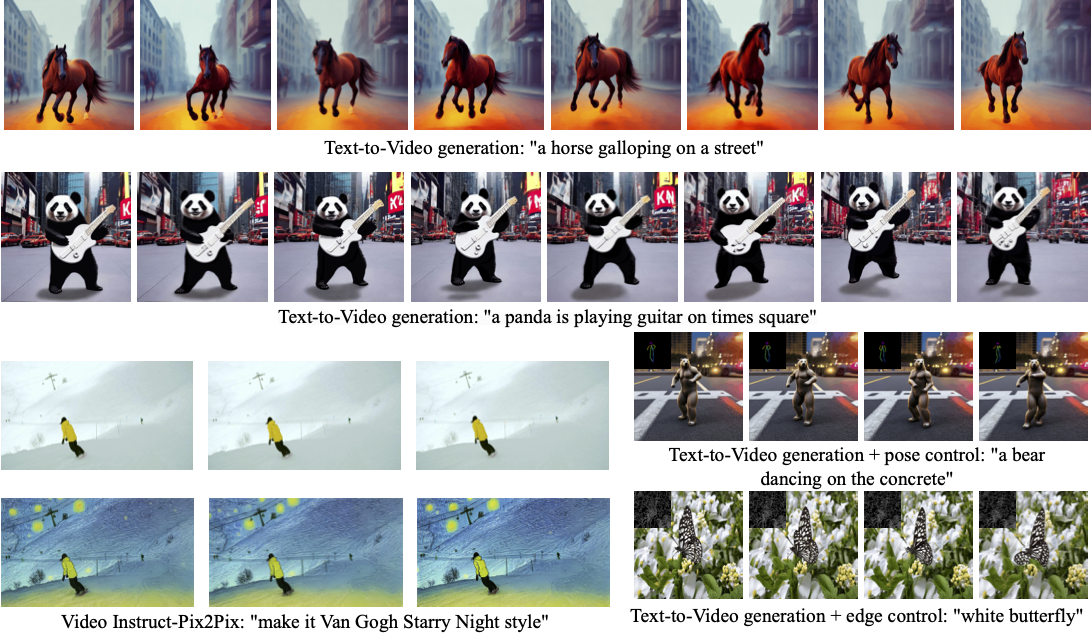

Our method Text2Video-Zero enables zero-shot video generation using (i) a textual prompt (see rows 1, 2), (ii) a prompt combined with guidance from poses or edges (see lower right), and (iii) Video Instruct-Pix2Pix, i.e., instruction-guided video editing (see lower left).

Results are temporally consistent and follow closely the guidance and textual prompts.

Related Links

- High-Resolution Image Synthesis with Latent Diffusion Models (a.k.a. LDM & Stable Diffusion)

- InstructPix2Pix: Learning to Follow Image Editing Instructions

- Adding Conditional Control to Text-to-Image Diffusion Models (a.k.a ControlNet)

- Diffusers

- Token Merging for Stable Diffusion

License

The code is published under the CreativeML Open RAIL-M license. The license provided in this repository applies to all additions and contributions we make upon the original stable diffusion code. The original stable diffusion code is under the CreativeML Open RAIL-M license, which can found here.

BibTeX

If you use our work in your research, please cite our publication:

@article{text2video-zero,

title={Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators},

author={Khachatryan, Levon and Movsisyan, Andranik and Tadevosyan, Vahram and Henschel, Roberto and Wang, Zhangyang and Navasardyan, Shant and Shi, Humphrey},

journal={arXiv preprint arXiv:2303.13439},

year={2023}

}