Readme

Non-Commercial use only!

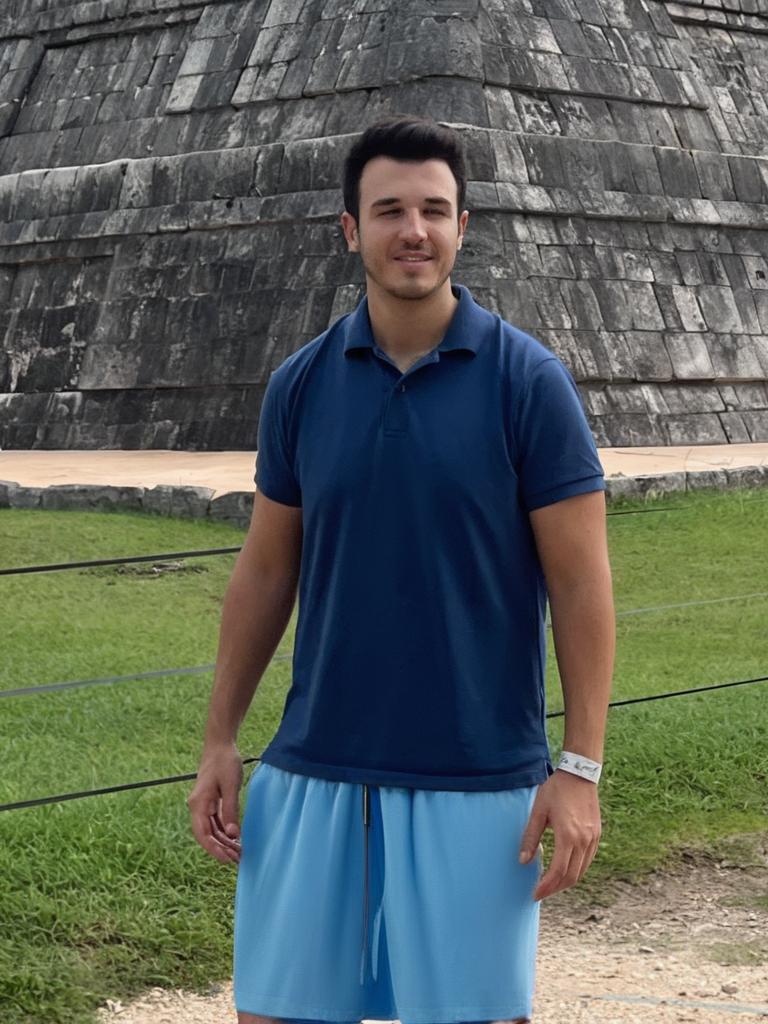

This is the current best-in-class virtual try-on model, created by the Korea Advanced Institute of Science & Technology (KAIST). It’s capable of virtual try-on “in the wild” which has notoriously been difficult for generative models to tackle, until now!

IDM-VTON : Improving Diffusion Models for Authentic Virtual Try-on in the Wild

This is an official implementation of paper ‘Improving Diffusion Models for Authentic Virtual Try-on in the Wild’ - paper - project page

TODO LIST

- [x] demo model

- [x] inference code

- [ ] training code

Acknowledgements

For the demo, auto masking generation codes are based on OOTDiffusion and DCI-VTON.

Parts of the code are based on IP-Adapter.

Citation

@article{choi2024improving,

title={Improving Diffusion Models for Virtual Try-on},

author={Choi, Yisol and Kwak, Sangkyung and Lee, Kyungmin and Choi, Hyungwon and Shin, Jinwoo},

journal={arXiv preprint arXiv:2403.05139},

year={2024}

}

License

The codes and checkpoints in this repository are under the CC BY-NC-SA 4.0 license.