Readme

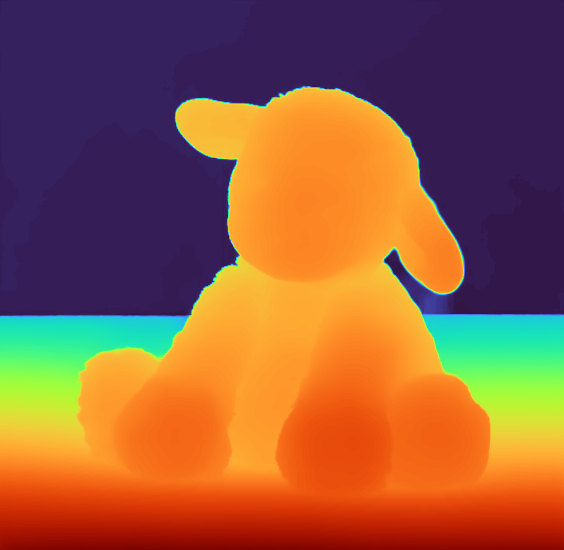

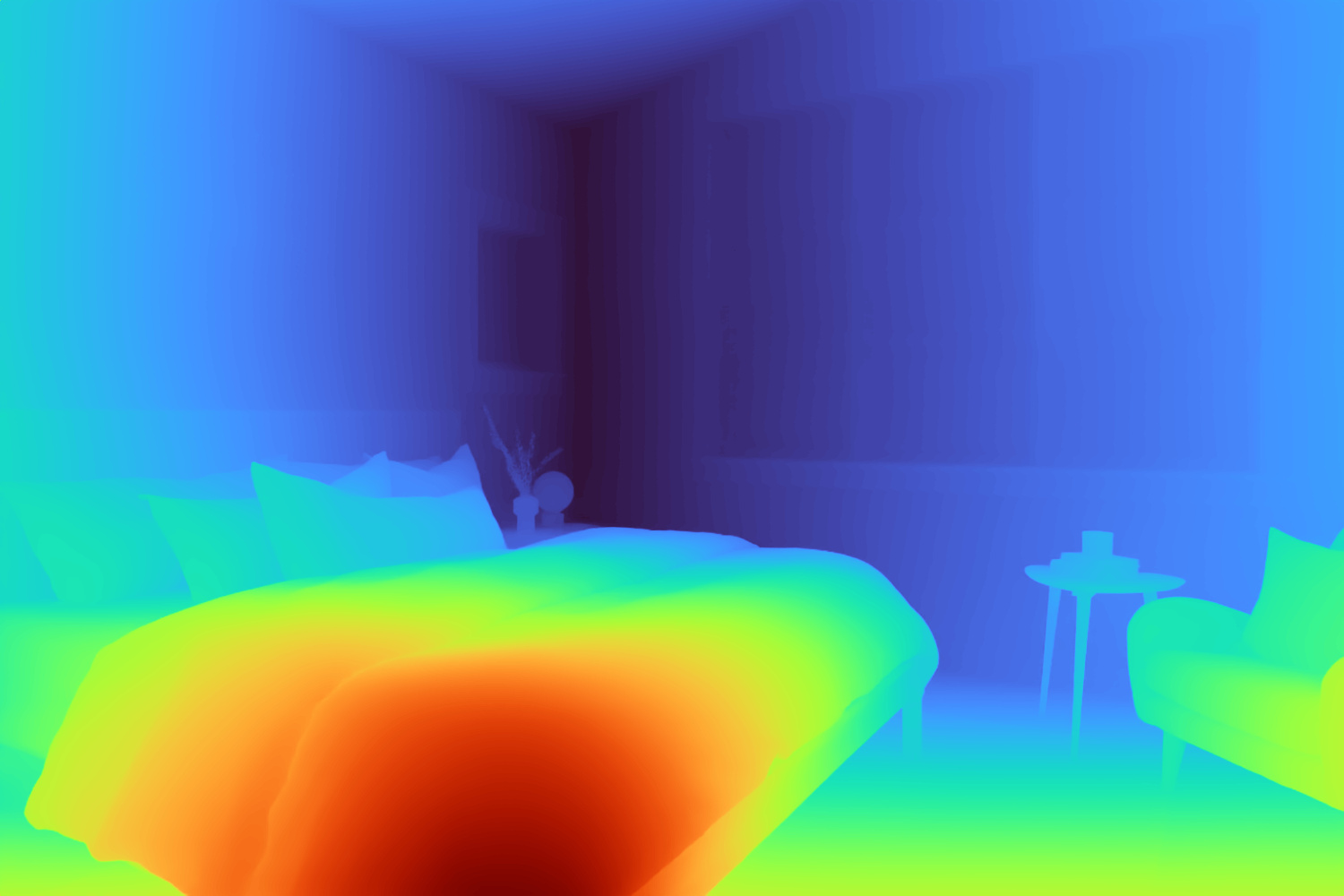

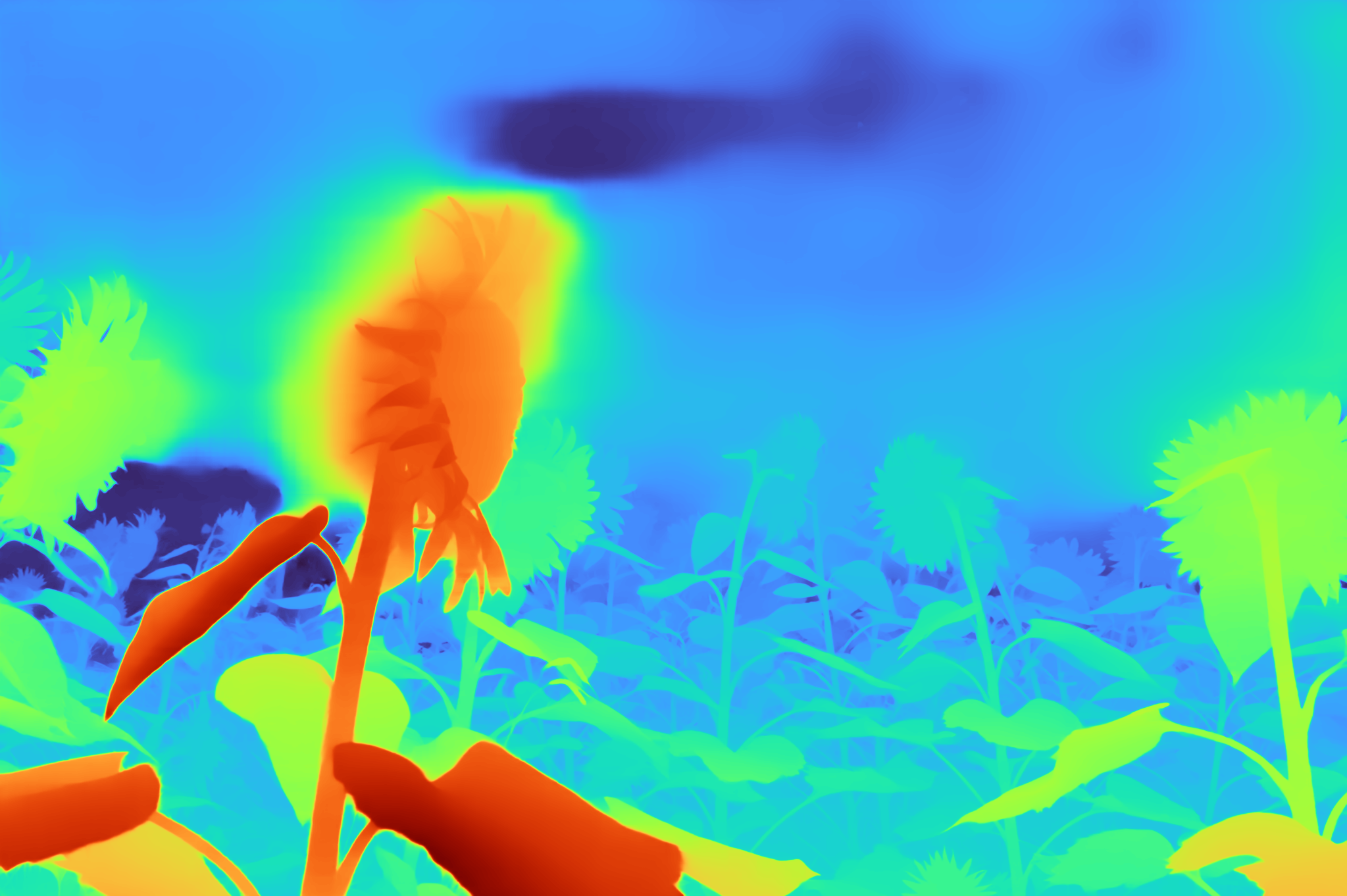

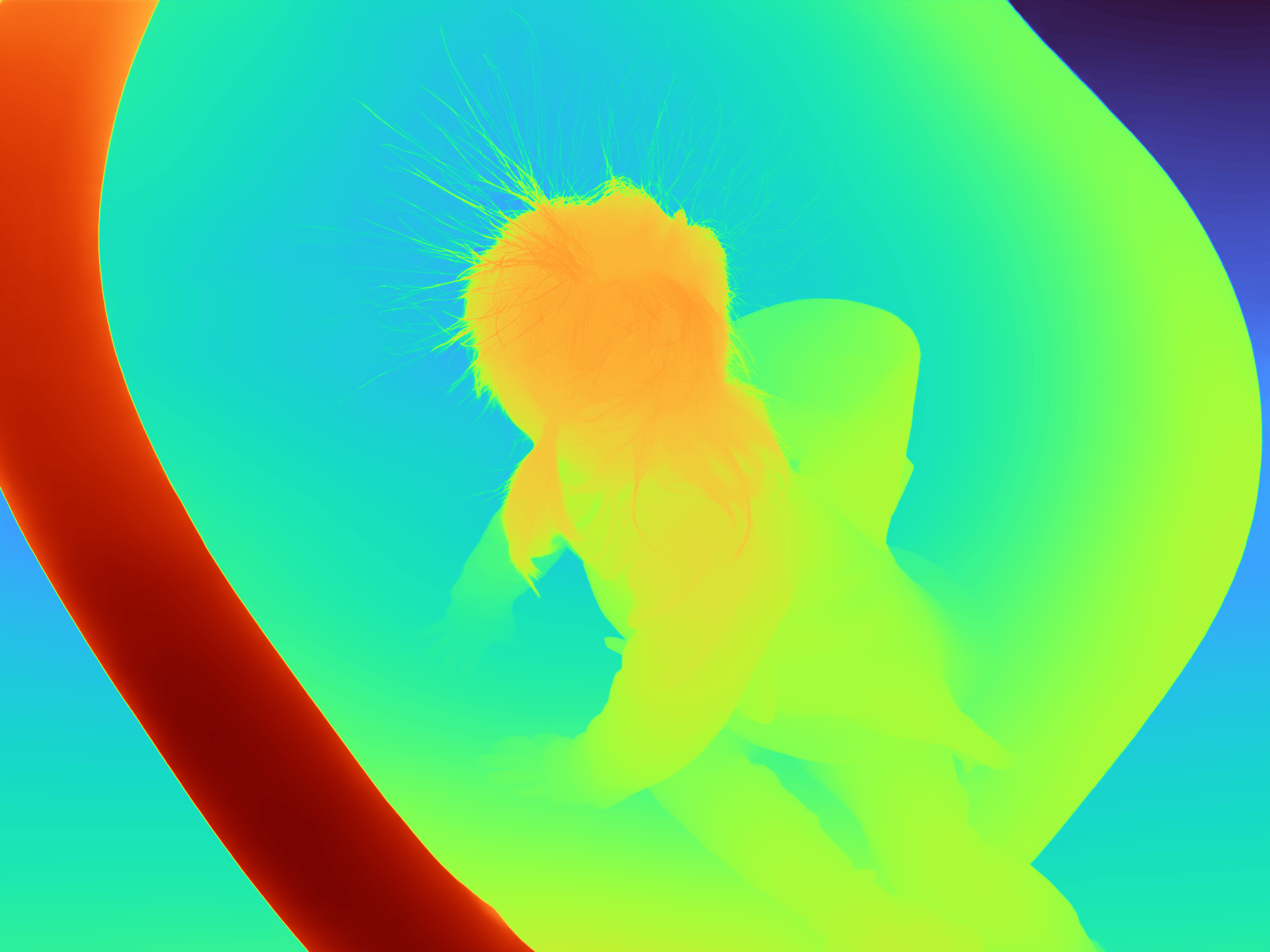

A foundation model for zero-shot metric monocular depth estimation. The model, Depth Pro, synthesizes high-resolution depth maps with unparalleled sharpness and high-frequency details. The predictions are metric, with absolute scale, without relying on the availability of metadata such as camera intrinsics. Additionally, the inference is fast. These characteristics are enabled by several technical contributions, including an efficient multi-scale vision transformer for dense prediction, a training protocol that combines real and synthetic datasets to achieve high metric accuracy alongside fine boundary tracing, dedicated evaluation metrics for boundary accuracy in estimated depth maps, and state-of-the-art focal length estimation from a single image.

Note, the public domain model and code is a reference implementation. Its performance is close to the model reported in the paper but does not match it exactly.

Please see the authors repo and paper for more details: https://github.com/apple/ml-depth-pro