Readme

Marigold Normals v1.1 – Surface Normals Estimation (Replicate Wrapper)

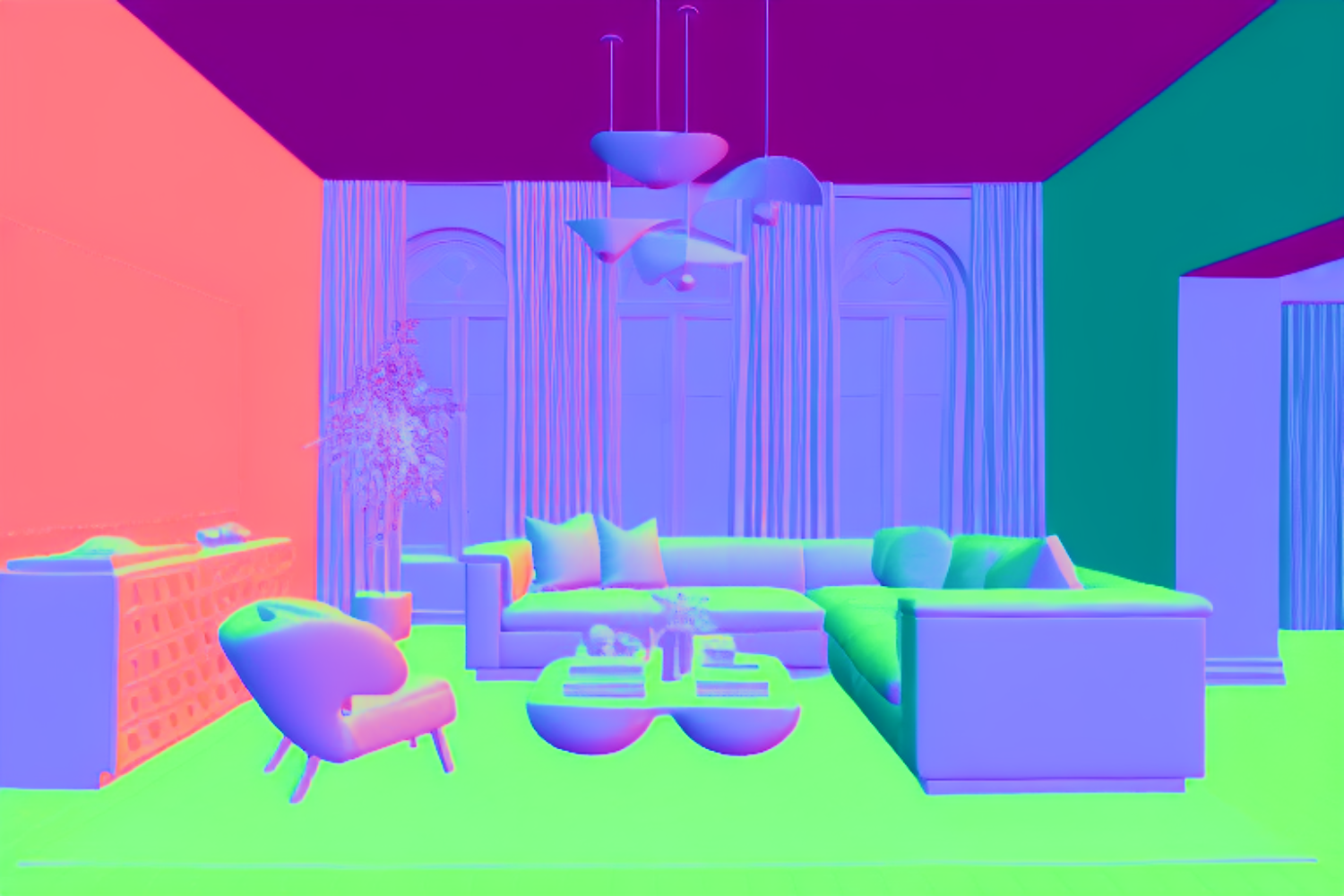

This model wraps prs-eth/marigold-normals-v1-1 and exposes it as a simple image-to-image API on Replicate for estimating per-pixel surface normals from a single RGB image. Hugging Face +1

Under the hood it uses the official MarigoldNormalsPipeline from 🤗 Diffusers and returns a visualized RGB normal map suitable for direct use in 3D / CV pipelines or for inspection in standard image tools.

predict

What this model does

Takes one RGB image as input.

Runs it through Marigold Normals v1.1, a diffusion-based foundation model trained to predict dense surface normals from in-the-wild images. Hugging Face +1

Outputs a PNG image where each pixel encodes the estimated surface normal in camera space, mapped to an RGB visualization in [0, 255].

Typical use cases:

Room / object understanding for 3D reconstruction.

Relighting, material editing, and intrinsic pipelines where normals are a prerequisite.

Measuring wall/floor orientation, surface smoothness, or geometric consistency.

As a building block in AR/VR or robotics perception systems.

Inputs image (required)

Type: image (file upload or URL on Replicate)

Description: RGB image of a single scene (interiors, exteriors, objects, etc.).

The predictor converts the image to RGB internally.

num_inference_steps (optional)

Type: integer

Default: 4

Description: Number of denoising steps for the diffusion process – higher can be marginally more accurate but slower. The original authors recommend 1–4 steps for a good speed / quality trade-off. Hugging Face

Outputs

The model returns a single PNG file:

Filename: normals_output.png

Type: image

Description:

Visualized surface normals in camera space.

Internally, Marigold predicts normals as 3-D unit vectors with values in [-1, 1]; this wrapper:

Converts them into a NumPy / Torch array.

Normalizes the values robustly into [0, 1].

Maps to an 8-bit RGB image in [0, 255].

Ensures shape is H × W × 3 before saving as PNG.

predict

You can treat the output as either:

A visual normal map to inspect in an image viewer, or

A data carrier: load the PNG back into your code and remap RGB values from [0, 255] → [-1, 1] if you need metric normals.

API usage examples Using Replicate’s Python client import replicate from pathlib import Path

image_path = “input.jpg”

output = replicate.run( “your-username/marigold-normals:latest”, input={ “image”: open(image_path, “rb”), “num_inference_steps”: 4, }, )

output should be a URL or a file reference to normals_output.png

print(“Normals map:”, output)

Using curl curl -s -X POST \ -H “Authorization: Token $REPLICATE_API_TOKEN” \ -H “Content-Type: application/json” \ -d ‘{ “version”: “YOUR_VERSION_HASH”, “input”: { “image”: “https://example.com/your_image.jpg”, “num_inference_steps”: 4 } }’ \ https://api.replicate.com/v1/predictions

Replace YOUR_VERSION_HASH with the version ID from your Replicate model page.

Implementation details (for power users)

Base model: prs-eth/marigold-normals-v1-1 loaded via MarigoldNormalsPipeline.from_pretrained. Hugging Face +1

Device & dtype:

Uses cuda if available, otherwise falls back to CPU.

Uses float16 on GPU and float32 on CPU to balance speed and memory.

predict

Progress bar: Disabled in the pipeline to keep logs clean in production.

Output handling:

Tries out.prediction, then out.pred_normals, then out.images[0] to be robust to small upstream changes in Diffusers.

predict

Normalizes to [0,1] based on observed min/max, handling both [-1,1] and [0,1] ranges.

Guarantees the result is a 3-channel RGB PIL.Image before saving as normals_output.png.

Limitations

Works best on natural images of real-world scenes; performance may degrade on cartoons, line drawings, or highly stylized content. Marigold Computer Vision +1

The normals are estimated up to model bias; they’re not guaranteed to be metrically perfect and may be noisy on weakly textured or heavily occluded regions.

Very low-resolution or extremely wide aspect ratio images can lead to less stable predictions.

Upstream model, license & credits

This Replicate model is a thin wrapper around the official Marigold Normals v1.1 checkpoint:

Upstream model: prs-eth/marigold-normals-v1-1 on Hugging Face Hugging Face

Original project: Marigold – Generative Computer Vision GitHub +1

License: The upstream weights are released under the CreativeML Open RAIL++-M License, as linked from the model card: https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL

Hugging Face +1

By using this Replicate model, you agree to comply with the original license terms, including any restrictions on commercial use, redistribution, and content/usage constraints. Please review the license carefully for your use case.

Citation

If you use this model in academic work, please cite the Marigold papers (see links in the model card): Hugging Face +1

Ke et al., “Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation”, CVPR 2024. Ke et al., “Marigold: Affordable Adaptation of Diffusion-Based Image Generators for Image Analysis”, journal extension.