Readme

Model description

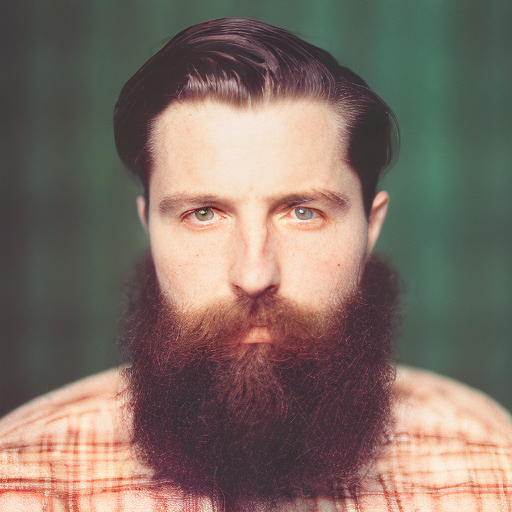

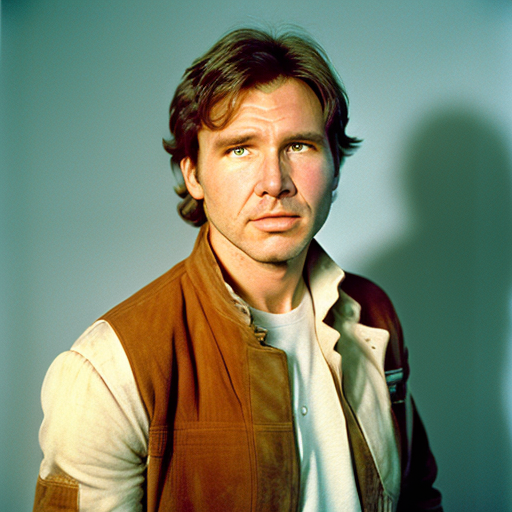

In your prompt, use the activation token: analog style

Based on wavymulder/Analog-Diffusion. Replicate deployment is adapted from the original lore-inference repo by cloneofsimo. I’m using this for running experiments easily without having to spin up a GPU server for automatic111 webui.

Main modifications are:

- Add RealESRGAN for upscaling

- Add Clip Interrogator for auto generating initial img2img prompt

- Add rembg for background removal from init image.

- Automatically resize adaptor condition image size to match output image.

- Modify replicate output schema to return additonal info - seed, clip interrogator generated prompt etc.[API works but breaks replicate web UI explorer]

- Modify the scripts for download weights, test and deploy to download and cache large model files. Bake the cache files into container image to avoid long wait for model downloads everytime container warms up.

…

Caveats and recommendations

- Set verbose_response: false in replicate web ui. Setting this to true this breaks the web ui.

- For viewing the output images in replicate web, use the version eff6035c

- To receive verbose response with additional fields - clip interrogator generated prompt, seed used etc. use the version 1924c521

…