Readme

Toward interpretable polyphonic sound event detection with attention maps based on local prototypes

Implementation of the model reported in:

Zinemanas, P.; Rocamora, M.; Fonseca, E.; Font, F.; Serra, X. Toward interpretable polyphonic sound event detection with attention maps based on local prototypes. Proceedings of the Detection and Classification of Acoustic Scenes and Events 2021 Workshop (DCASE2021). Barcelona, Spain.

Overview

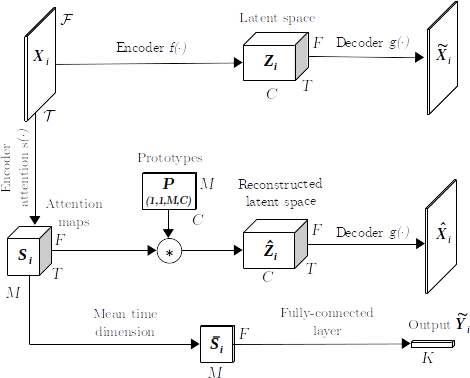

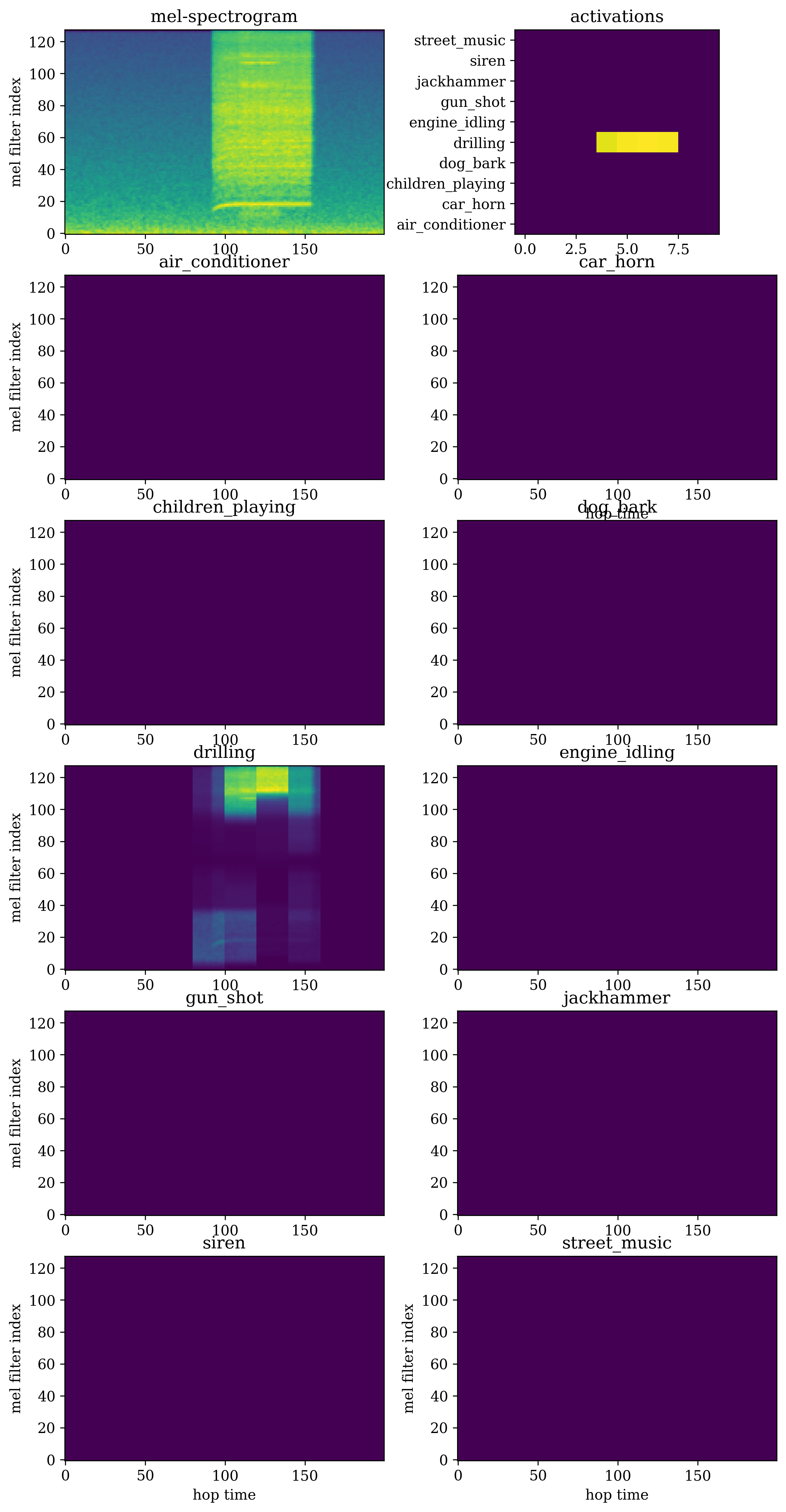

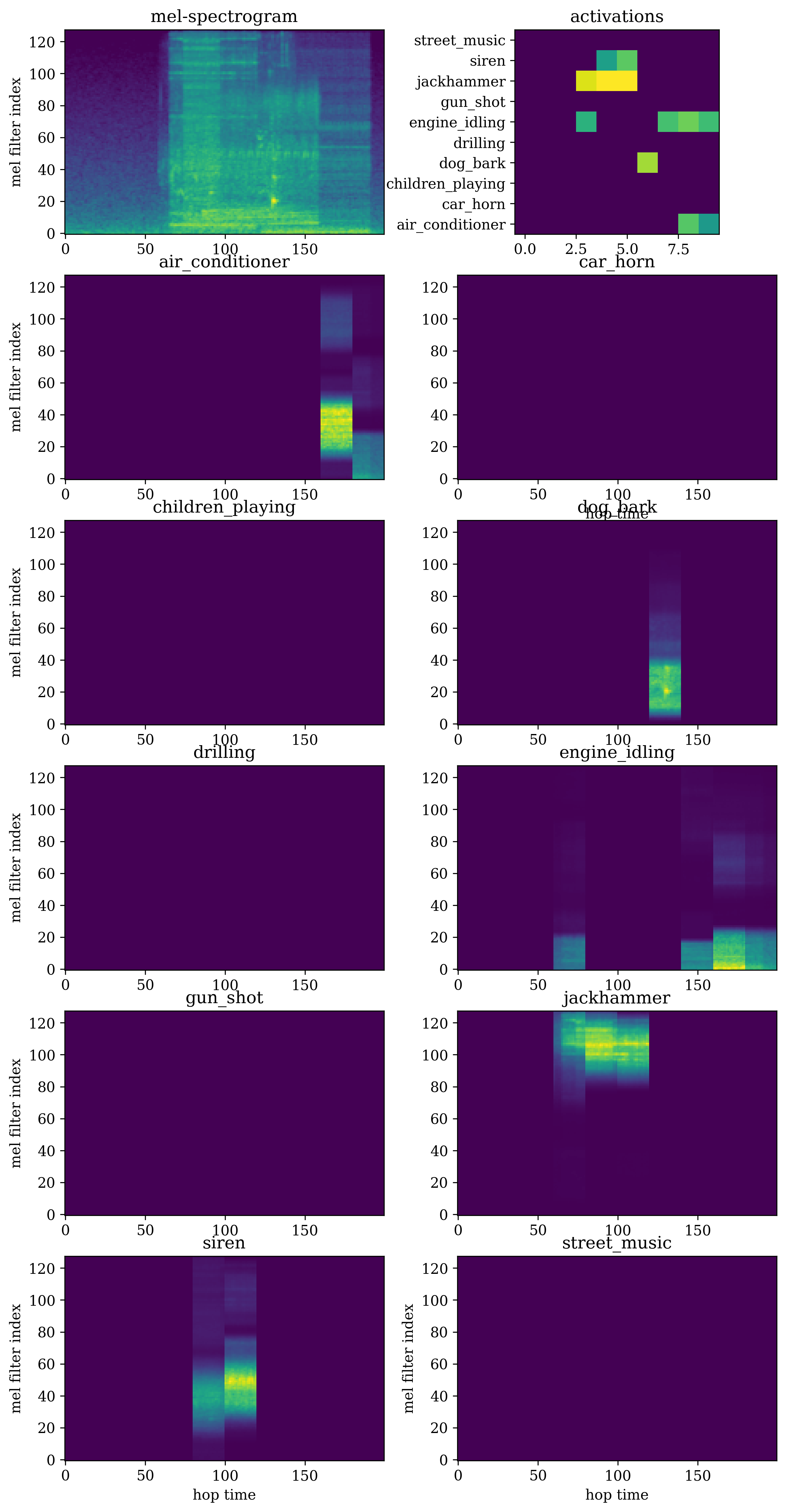

In this work, we present a novel interpretable model for polyphonic sound event detection. It tackles one of the limitations of our previous work, i.e. the difficulty to deal with a multi-label setting properly. The proposed architecture incorporates a prototype layer and an attention mechanism. The network learns a set of local prototypes in the latent space representing a patch in the input representation. Besides, it learns attention maps for positioning the local prototypes and reconstructing the latent space. Then, the predictions are solely based on the attention maps. Thus, the explanations provided are the attention maps and the corresponding local prototypes. Moreover, one can reconstruct the prototypes to the audio domain for inspection. The obtained results in urban sound event detection are comparable to that of two opaque baselines but with fewer parameters while offering interpretability.