[CVPR 2020] 3D Photography using Context-aware Layered Depth Inpainting

[Paper] [Project Website] [Google Colab]

Usage

Input an image to generate a 3D photo; you can select from a dropdown of special effects to apply to the output video.

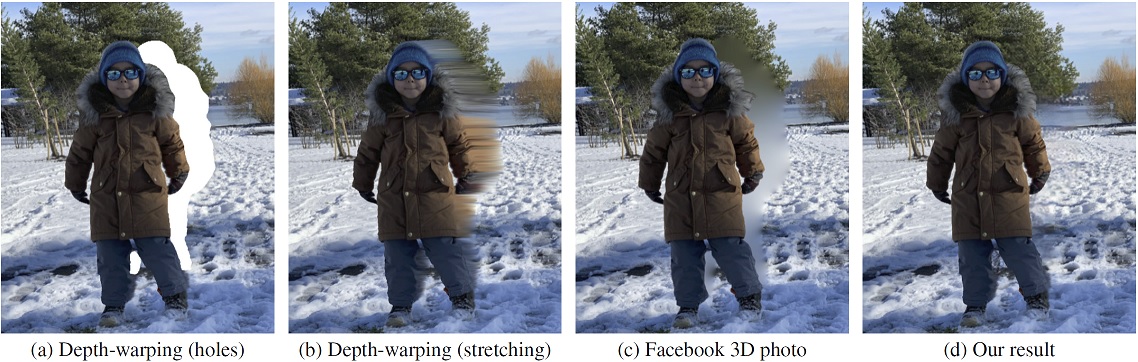

We propose a method for converting a single RGB-D input image into a 3D photo, i.e., a multi-layer representation for novel view synthesis that contains hallucinated color and depth structures in regions occluded in the original view. We use a Layered Depth Image with explicit pixel connectivity as underlying representation, and present a learning-based inpainting model that iteratively synthesizes new local color-and-depth content into the occluded region in a spatial context-aware manner. The resulting 3D photos can be efficiently rendered with motion parallax using standard graphics engines. We validate the effectiveness of our method on a wide range of challenging everyday scenes and show fewer artifacts when compared with the state-of-the-arts.

3D Photography using Context-aware Layered Depth Inpainting

Meng-Li Shih,

Shih-Yang Su,

Johannes Kopf, and

Jia-Bin Huang

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

License

This work is licensed under MIT License. See LICENSE for details.

If you find our code/models useful, please consider citing our paper:

@inproceedings{Shih3DP20,

author = {Shih, Meng-Li and Su, Shih-Yang and Kopf, Johannes and Huang, Jia-Bin},

title = {3D Photography using Context-aware Layered Depth Inpainting},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2020}

}

Acknowledgments

- We thank Pratul Srinivasan for providing clarification of the method Srinivasan et al. CVPR 2019.

- We thank the author of Zhou et al. 2018, Choi et al. 2019, Mildenhall et al. 2019, Srinivasan et al. 2019, Wiles et al. 2020, Niklaus et al. 2019 for providing their implementations online.

- Our code builds upon EdgeConnect, MiDaS and pytorch-inpainting-with-partial-conv