Readme

This is a cog implementation of https://github.com/XPixelGroup/HAT

HAT [Paper Link]

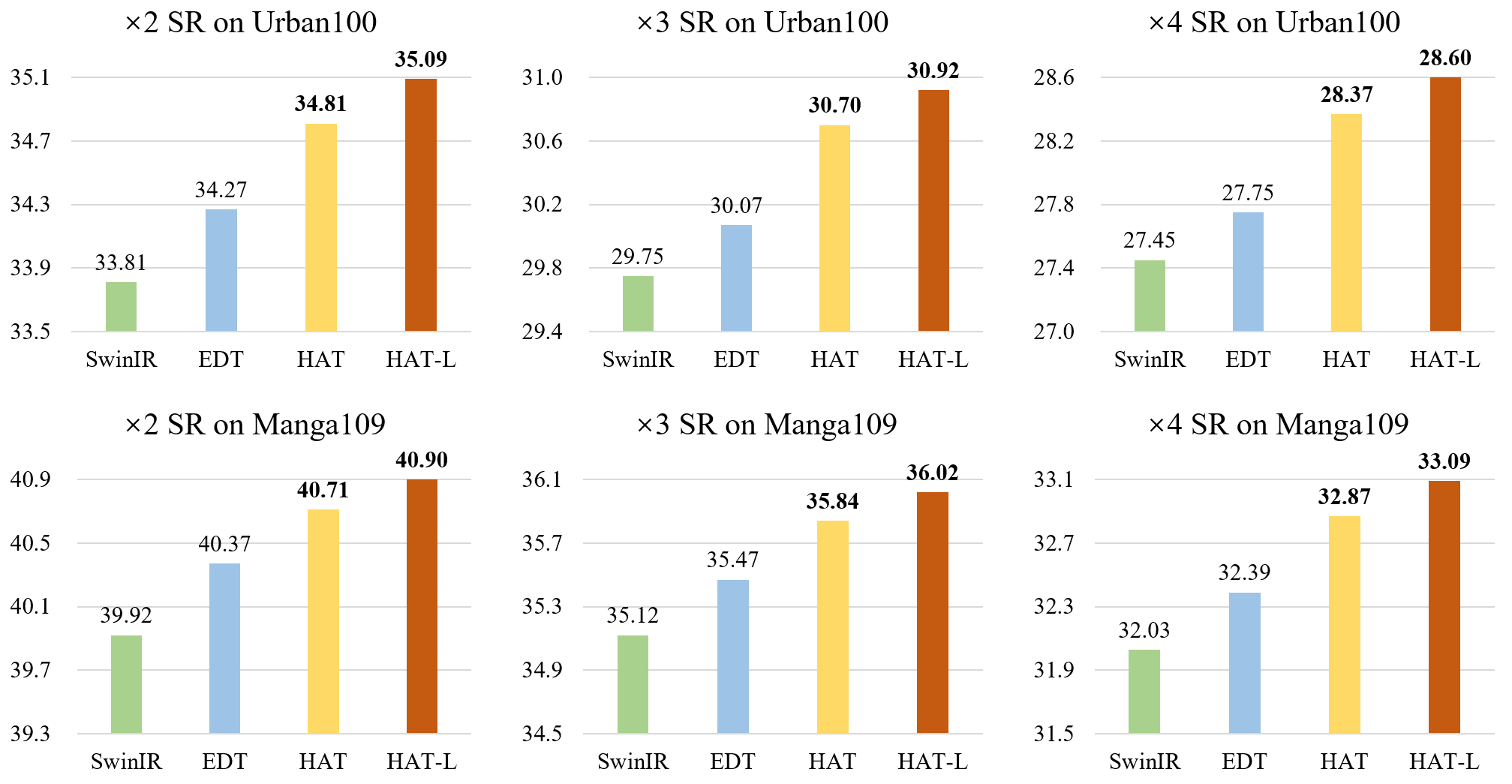

Activating More Pixels in Image Super-Resolution Transformer

Xiangyu Chen, Xintao Wang, Jiantao Zhou and Chao Dong

BibTeX

@article{chen2022activating,

title={Activating More Pixels in Image Super-Resolution Transformer},

author={Chen, Xiangyu and Wang, Xintao and Zhou, Jiantao and Dong, Chao},

journal={arXiv preprint arXiv:2205.04437},

year={2022}

}

Contact

If you have any question, please email chxy95@gmail.com or join in the Wechat group of BasicSR to discuss with the authors.