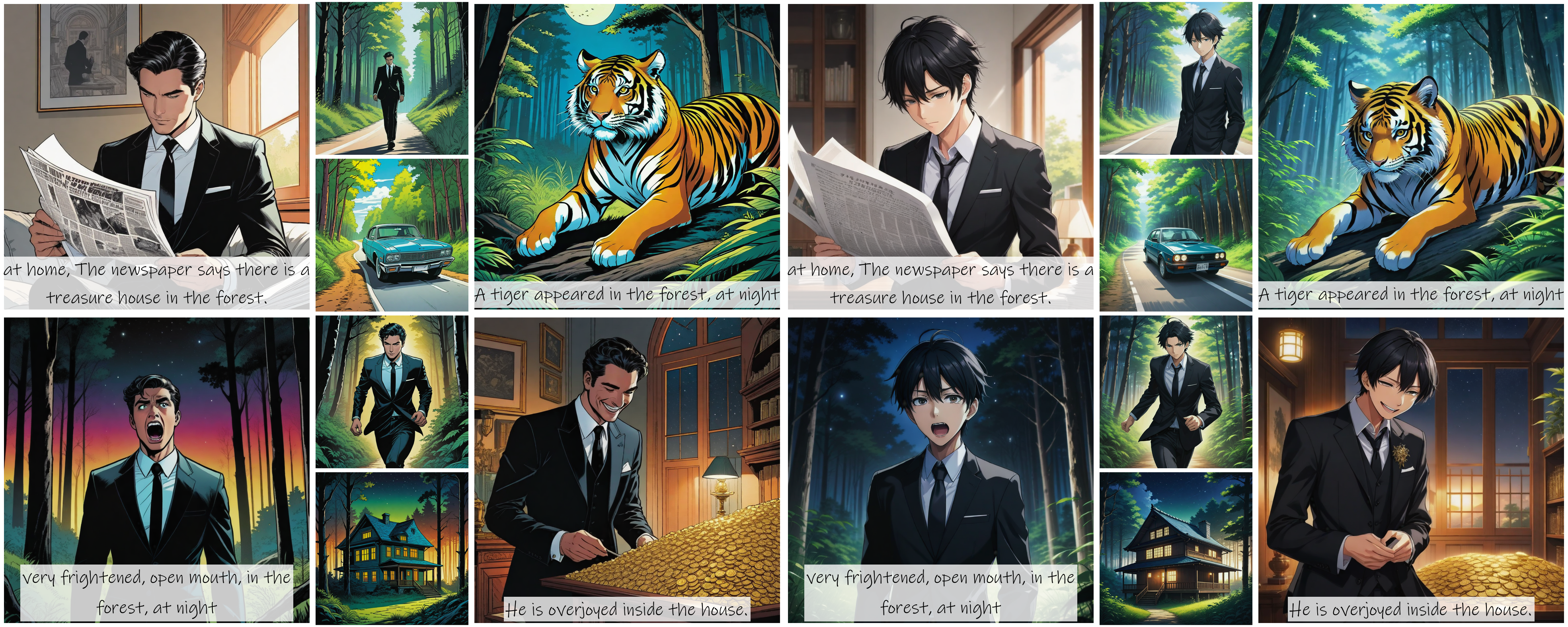

camenduru/story-diffusion

StoryDiffusion: Consistent Self-Attention for Long-Range Image and Video Generation

Public

1.3K

runs