Readme

Demo for deepseek-math-7b-instruct: https://replicate.com/cjwbw/deepseek-math-7b-instruct

Introduction

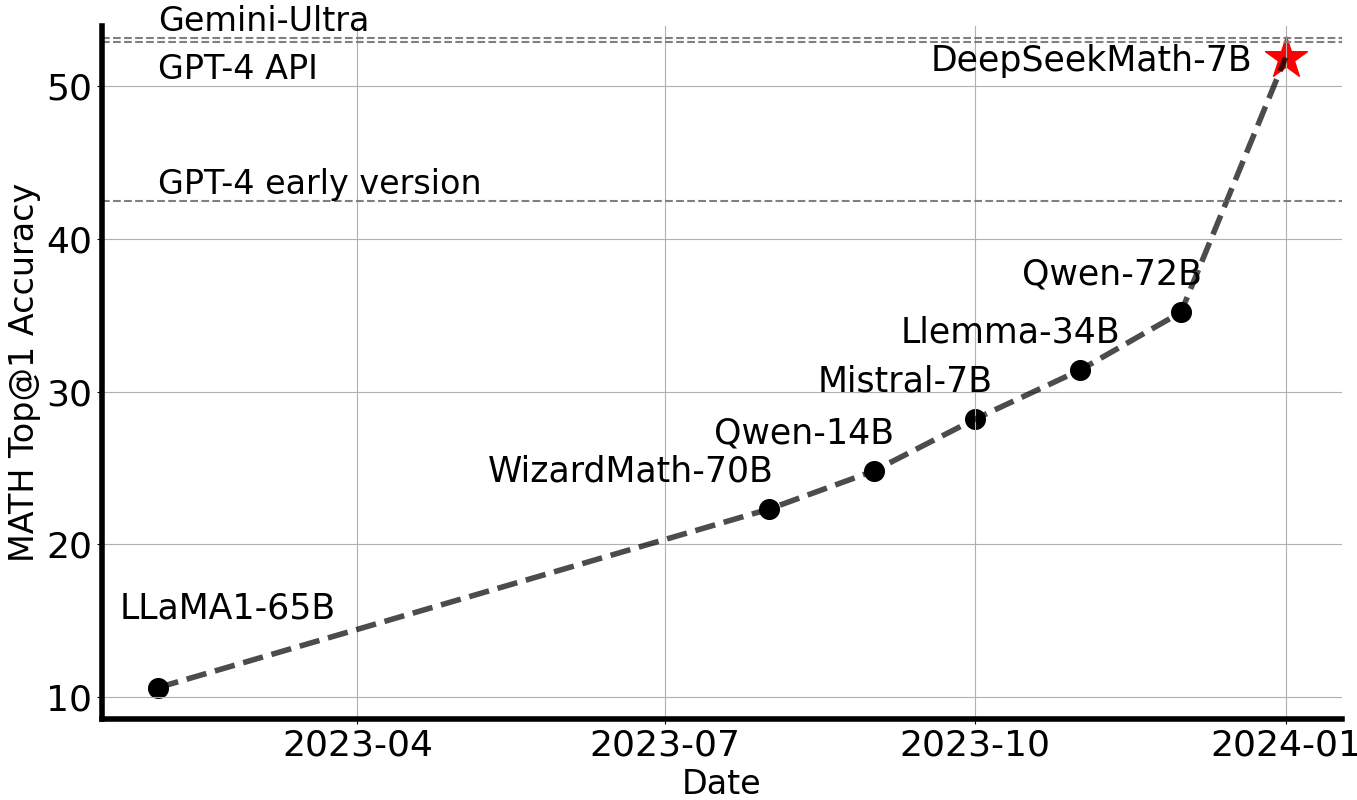

DeepSeekMath is initialized with DeepSeek-Coder-v1.5 7B and continues pre-training on math-related tokens sourced from Common Crawl, together with natural language and code data for 500B tokens. DeepSeekMath 7B has achieved an impressive score of 51.7% on the competition-level MATH benchmark without relying on external toolkits and voting techniques, approaching the performance level of Gemini-Ultra and GPT-4. For research purposes, we release checkpoints of base, instruct, and RL models to the public.

License

This code repository is licensed under the MIT License. The use of DeepSeekMath models is subject to the Model License. DeepSeekMath supports commercial use.

See the LICENSE-CODE and LICENSE-MODEL for more details.

Citation

@misc{deepseek-math,

author = {Zhihong Shao, Peiyi Wang, Qihao Zhu, Runxin Xu, Junxiao Song, Mingchuan Zhang, Y.K. Li, Y. Wu, Daya Guo},

title = {DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models},

journal = {CoRR},

volume = {abs/2402.03300},

year = {2024},

url = {https://arxiv.org/abs/2402.03300},

}

Contact

If you have any questions, please raise an issue or contact us at service@deepseek.com.