Readme

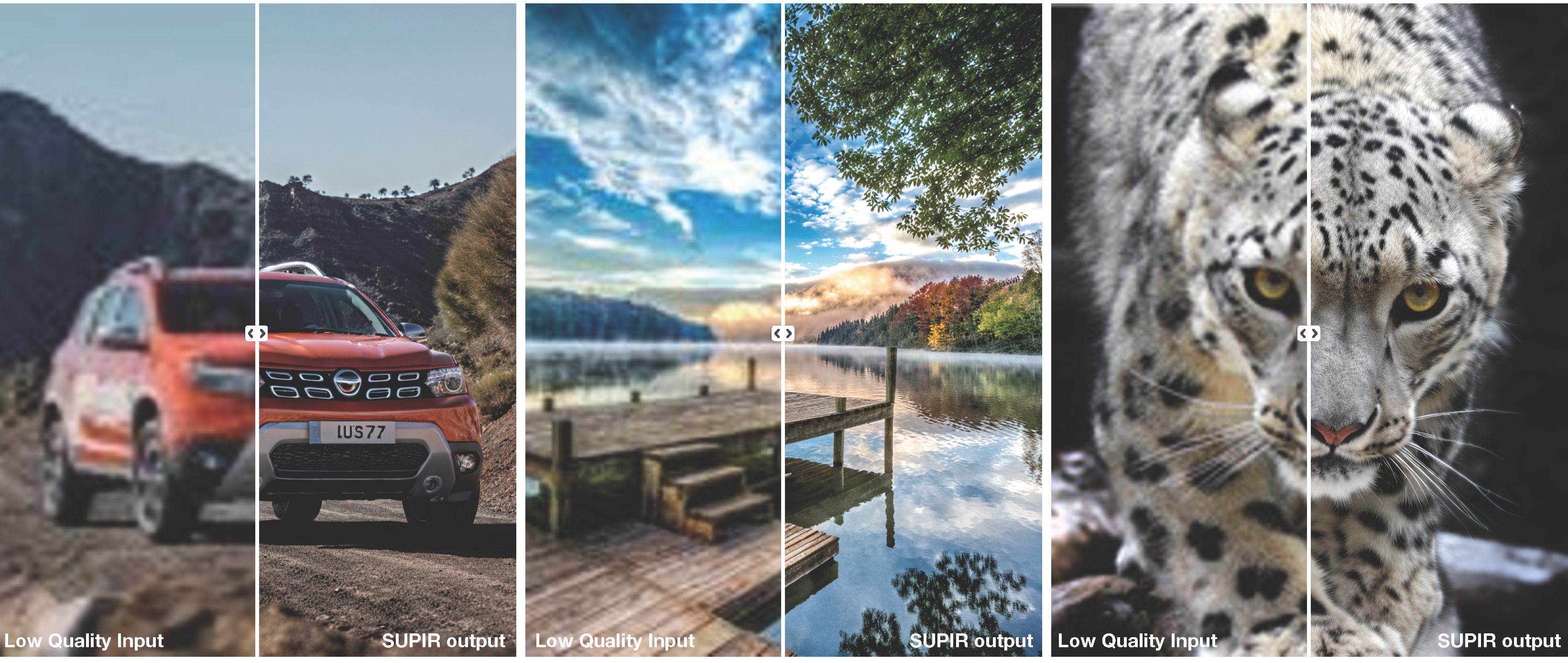

Scaling Up to Excellence: Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild

NOTE: Due to the memory requirement for LLaVA-13b and both SUPIR-v0F and SUPIR-v0Q, this model is posted on 80G A100. To run on 40G A40, try models https://replicate.com/cjwbw/supir-v0q or https://replicate.com/cjwbw/supir-v0f that do not include LLaVA-13b.

SUPIR-v0Q: Default training settings with paper. High generalization and high image quality in most cases.SUPIR-v0F: Training with light degradation settings. Stage1 encoder of SUPIR-v0F remains more details when facing light degradations.

BibTeX

@misc{yu2024scaling,

title={Scaling Up to Excellence: Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild},

author={Fanghua Yu and Jinjin Gu and Zheyuan Li and Jinfan Hu and Xiangtao Kong and Xintao Wang and Jingwen He and Yu Qiao and Chao Dong},

year={2024},

eprint={2401.13627},

archivePrefix={arXiv},

primaryClass={cs.CV}

}