Readme

Model by Álvaro Barbero Jiménez.

Model Information

Current image generation methods, such as Stable Diffusion, struggle to position objects at specific locations. While the content of the generated image (somewhat) reflects the objects present in the prompt, it is difficult to frame the prompt in a way that creates an specific composition. For instance, take a prompt expressing a complex composition such as:

A charming house in the countryside on the left, in the center a dirt road in the countryside crossing pastures, on the right an old and rusty giant robot lying on a dirt road, by jakub rozalski, sunset lighting on the left and center, dark sunset lighting on the right elegant, highly detailed, smooth, sharp focus, artstation, stunning masterpiece

Out of a sample of 20 Stable Diffusion generations with different seeds, the generated images that align best with the prompt are the following:

|

|

|

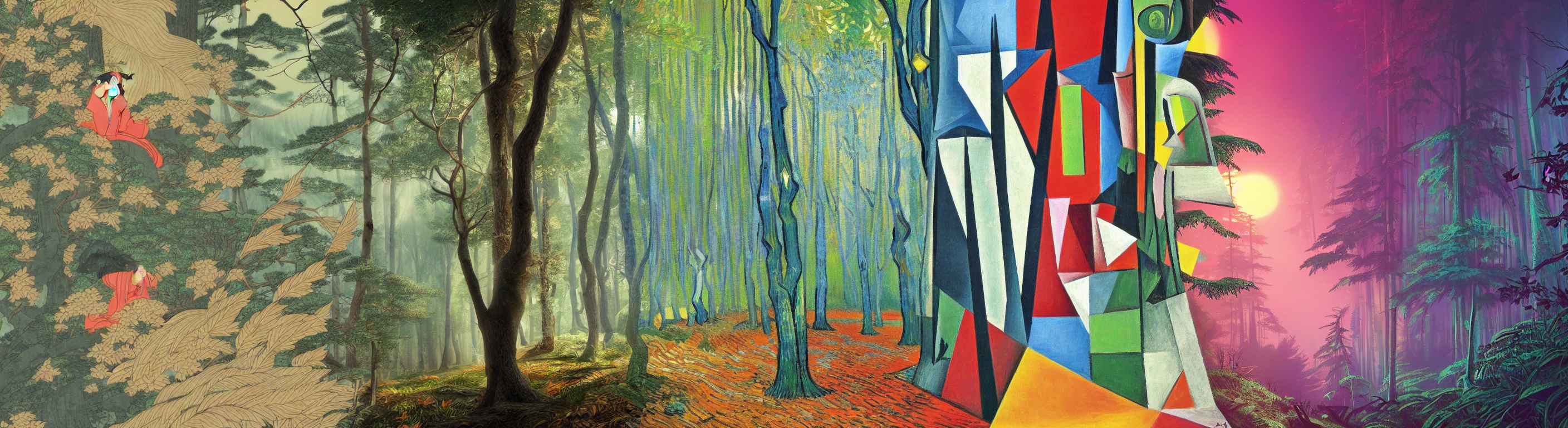

The method proposed here strives to provide a better tool for image composition by using several diffusion processes in parallel, each configured with a specific prompt and settings, and focused on a particular region of the image. For example, the following are three outputs from this method, using the following prompts from left to right:

- “A charming house in the countryside, by jakub rozalski, sunset lighting, elegant, highly detailed, smooth, sharp focus, artstation, stunning masterpiece”

- “A dirt road in the countryside crossing pastures, by jakub rozalski, sunset lighting, elegant, highly detailed, smooth, sharp focus, artstation, stunning masterpiece”

- “An old and rusty giant robot lying on a dirt road, by jakub rozalski, dark sunset lighting, elegant, highly detailed, smooth, sharp focus, artstation, stunning masterpiece”

The mixture of diffusion processes is done in a way that harmonizes the generation process, preventing “seam” effects in the generated image.

Using several diffusion processes in parallel has also practical advantages when generating very large images, as the GPU memory requirements are similar to that of generating an image of the size of a single tile.

Usage

First, specify a height and width in pixels for your canvas. Then, divide the canvas into multiple square regions. Each region is a box defined by the upper left hand corner (y0, x0) (or (row0, col0), if you prefer) and lower right hand corner (y1, x1). Regions can overlap to blend images between regions.

For example, the demo image above specifies three vertical regions which are as tall as the canvas and overlap horizontally, defined as follows: region_1: (0,0), (640, 640) region_2: (0, 384), (640, 1024) region_3: (0, 768), (640, 1408)

These regions are passed into the model like so:

y0_values = 0;0;0,

x0_values = 0;384;768,

y1_values = 640;640;640,

x1_values = 640;1024;1408

Finally, pass in a series of prompts, one for each region, again separated by semicolons. Keep in mind that canvas regions not covered by prompts generate noise, so please cover them all.

A Quick Example

The inputs below will create a 50x100 image with a dog in the region specified by the box (0,0,50,50) and a cat in the region specified by the box - (0,50,50,100).

canvas_height=50

canvas_width=100

prompts='dog;cat;'

y0_values='0;0'

x0_values='0;50'

y1_values='50;50'

x1_values='50;100'

Citation

@misc{https://doi.org/10.48550/arxiv.2302.02412,

doi = {10.48550/ARXIV.2302.02412},

url = {https://arxiv.org/abs/2302.02412},

author = {Jiménez, Álvaro Barbero},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Artificial Intelligence (cs.AI), Machine Learning (cs.LG), FOS: Computer and information sciences, FOS: Computer and information sciences, I.2.6},

title = {Mixture of Diffusers for scene composition and high resolution image generation},

publisher = {arXiv},

year = {2023},

copyright = {Creative Commons Attribution 4.0 International}

}