Readme

You’ve heard of Stable Diffusion, the open-source AI model that generates images from text?

photograph of an astronaut riding a horse

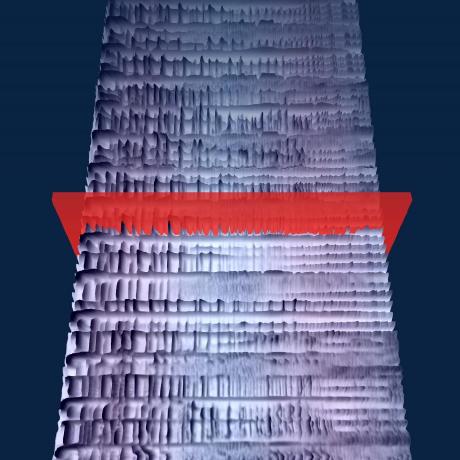

Well, we fine-tuned the model to generate images of spectrograms, like this:

funk bassline with a jazzy saxophone solo

The magic is that this spectrogram can then be converted to an audio clip. (See demo above.)

This is the v1.5 stable diffusion model with no modifications, just fine-tuned on images of spectrograms paired with text. Audio processing happens downstream of the model.

It can generate infinite variations of a prompt by varying the seed. All the same web UIs and techniques like img2img, inpainting, negative prompts, and interpolation work out of the box.

Read more about it here: https://www.riffusion.com/about

Citation:

@software{Forsgren_Martiros_2022,

author = {Forsgren, Seth* and Martiros, Hayk*},

title = {{Riffusion - Stable diffusion for real-time music generation}},

url = {https://riffusion.com/about},

year = {2022}

}