Readme

DeOldify

The easiest way to colorize images. DeOldify Image Colorization on DeepAI

The most advanced version of DeOldify image colorization is available here, exclusively. Try a few images for free! MyHeritage In Color

Get more updates on Twitter.

About DeOldify

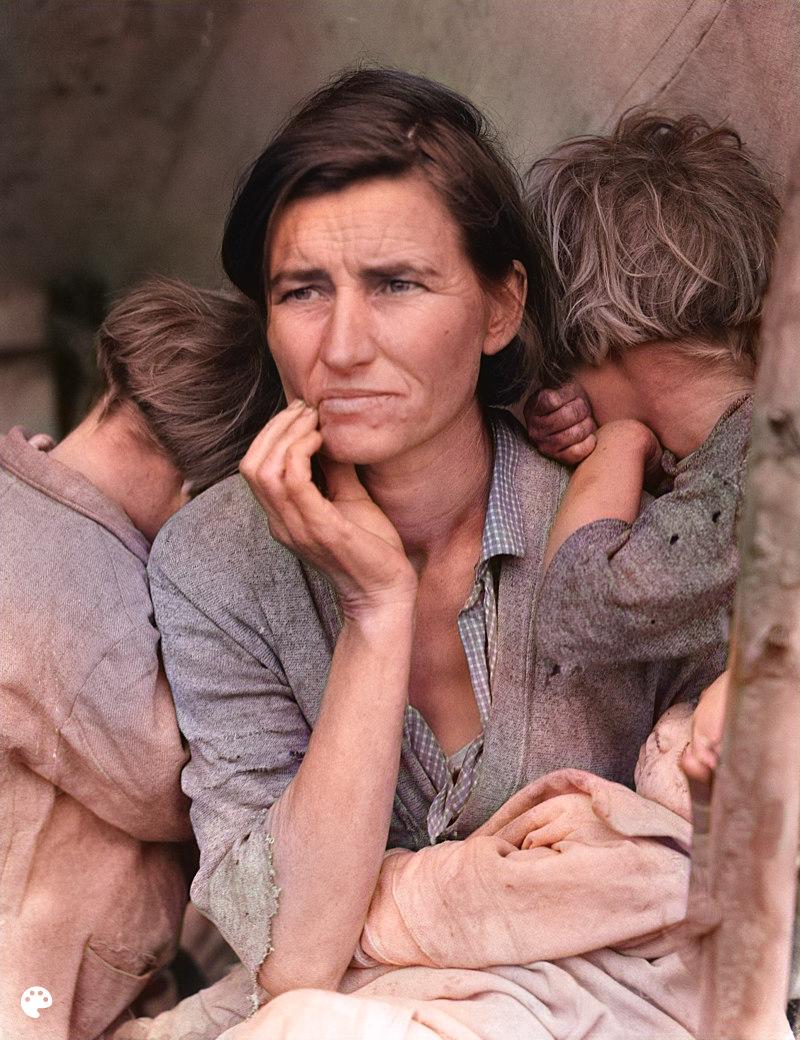

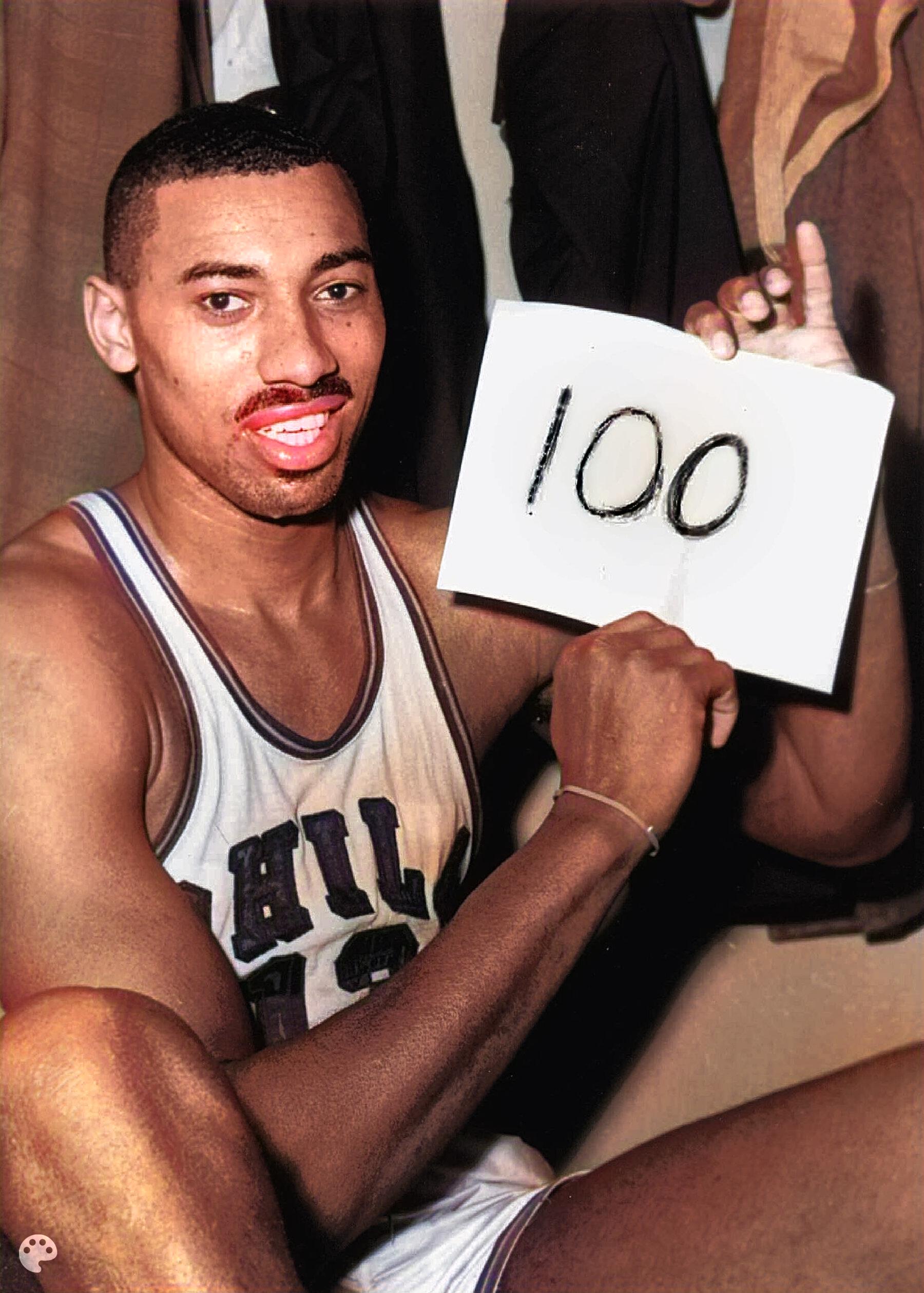

Simply put, the mission of this project is to colorize and restore old images and film footage. We’ll get into the details in a bit, but first let’s see some pretty pictures and videos!

Something to keep in mind- historical accuracy remains a huge challenge!

About the demo

The model have two available models:

-

Artistic: This model achieves the highest quality results in image coloration, in terms of interesting details and vibrance. The most notable drawback however is that it’s a bit of a pain to fiddle around with to get the best results (you have to adjust the rendering resolution or render_factor to achieve this). Additionally, the model does not do as well as stable in a few key common scenarios- nature scenes and portraits. The model uses a resnet34 backbone on a UNet with an emphasis on depth of layers on the decoder side. This model was trained with 5 critic pretrain/GAN cycle repeats via NoGAN, in addition to the initial generator/critic pretrain/GAN NoGAN training, at 192px. This adds up to a total of 32% of Imagenet data trained once (12.5 hours of direct GAN training).

-

Stable: This model achieves the best results with landscapes and portraits. Notably, it produces less “zombies”- where faces or limbs stay gray rather than being colored in properly. It generally has less weird miscolorations than artistic, but it’s also less colorful in general. This model uses a resnet101 backbone on a UNet with an emphasis on width of layers on the decoder side. This model was trained with 3 critic pretrain/GAN cycle repeats via NoGAN, in addition to the initial generator/critic pretrain/GAN NoGAN training, at 192px. This adds up to a total of 7% of Imagenet data trained once (3 hours of direct GAN training).

License

All code in this repository is under the MIT license as specified by the LICENSE file.