Extract text from documents and images with Datalab Marker and OCR

Datalab’s state-of-the-art document parsing and text extraction models are now on Replicate.

Marker turns PDF, DOCX, PPTX, images (and more!) into markdown or JSON. It formats tables, math, and code, extracts images, and can pull specific fields when you pass a JSON Schema.

OCR detects text in ninety languages from images and documents, and returns reading order and table grids.

The Marker model is based on the popular open source Marker project (29k Github stars) and OCR is based on Surya (19k Github stars).

Run Marker and OCR on Replicate:

Run Marker

import replicate

output = replicate.run(

"datalab-to/marker",

input={

"file": open("report.pdf", "rb"),

"mode": "balanced", # fast / balanced / accurate

"include_metadata": True, # return page-level JSON metadata

},

)

print(output["markdown"][:400])Run OCR

import replicate

output = replicate.run(

"datalab-to/ocr",

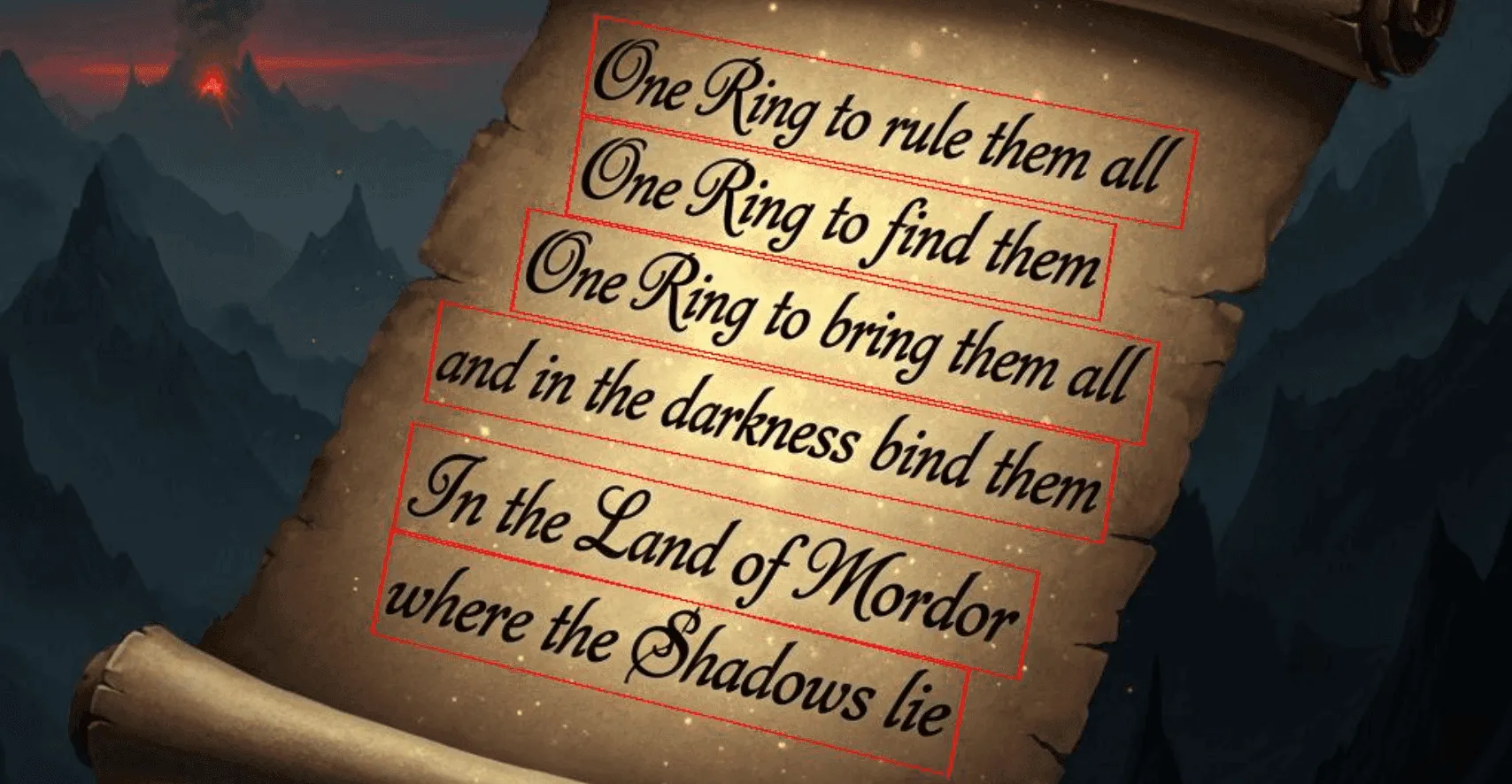

input={

"file": open("receipt.jpg", "rb"),

"visualize": True, # return the input image with red polygons around detected text

"return_pages": True, # return layout data

},

)

print(output["text"][:200])Visit the models on Replicate for code snippets in other languages.

These models are both fast and accurate. They outperform established tools like Tesseract, with short processing times. Marker processes a page in about 0.18 seconds and can hit 120 pages per second when batched.

Structured extraction

One particularly powerful feature of Marker is structured extraction. For example, you can extract specific fields from an invoice:

import json

import replicate

schema = {

"type": "object",

"properties": {

"vendor": {"type": "string"},

"invoice_number": {"type": "string"},

"date": {"type": "string"},

"total": {"type": "number"}

}

}

output = replicate.run(

"datalab-to/marker",

input={

"file": "https://multimedia-example-files.replicate.dev/replicator-invoice.1page.pdf",

"page_schema": json.dumps(schema),

}

)

structured_data = json.loads(output["extraction_schema_json"])

print(structured_data)Performance

Marker performance was evaluated using the olmOCR-Bench benchmark, a dataset of 1,403 PDF files with 7,010 unit test cases that evaluate the ability of OCR systems to accurately convert PDF documents to markdown format while preserving critical textual and structural information.

Marker outperforms all models tested, including GPT-4o, Deepseek OCR, Mistral OCR, and olmOCR.

| Model | ArXiv | Old Scans Math | Tables | Old Scans | Headers and Footers | Multi column | Long tiny text | Base | Overall |

|---|---|---|---|---|---|---|---|---|---|

| Datalab Marker (Balanced mode) | 81.4 | 80.3 | 89.4 | 50.0 | 88.3 | 81.0 | 91.6 | 99.9 | 82.7 ± 0.9 |

| Datalab Marker (Fast mode) | 83.8 | 69.7 | 74.8 | 32.3 | 86.6 | 79.4 | 85.7 | 99.6 | 76.5 ± 1.0 |

| Mistral OCR API | 77.2 | 67.5 | 60.6 | 29.3 | 93.6 | 71.3 | 77.1 | 99.4 | 72.0 ± 1.1 |

| Deepseek OCR | 75.2 | 67.9 | 79.1 | 32.9 | 96.1 | 66.3 | 78.5 | 97.7 | 74.2 ± 1.0 |

| Nanonets OCR | 67.0 | 68.6 | 77.7 | 39.5 | 40.7 | 69.9 | 53.4 | 99.3 | 64.5 ± 1.1 |

| GPT-4o (Anchored) | 53.5 | 74.5 | 70.0 | 40.7 | 93.8 | 69.3 | 60.6 | 96.8 | 69.9 ± 1.1 |

| Gemini Flash 2 (Anchored) | 54.5 | 56.1 | 72.1 | 34.2 | 64.7 | 61.5 | 71.5 | 95.6 | 63.8 ± 1.2 |

| Qwen 2.5 VL (No Anchor) | 63.1 | 65.7 | 67.3 | 38.6 | 73.6 | 68.3 | 49.1 | 98.3 | 65.5 ± 1.2 |

| olmOCR v0.3.0 | 78.6 | 79.9 | 72.9 | 43.9 | 95.1 | 77.3 | 81.2 | 98.9 | 78.5 ± 1.1 |

Pricing

Marker costs

- $4 per 1000 pages without

page_schemain fast and balanced modes. - $6 per 1000 pages when doing structured extraction with

page_schema. $ $6 per 1000 pages inaccuratemode.

OCR costs $2 per 1000 pages.