-

How to prompt Seedream 5.0

Seedream 5.0 brings multi-step reasoning, example-based editing, and deep domain knowledge to image generation. Here's what you should know.

February 24, 2026 -

Recraft V4: image generation with design taste

Recraft V4 generates art-directed images — and actual editable SVGs — with strong composition, accurate text rendering, and what the Recraft team calls "design taste." Four models are available on Replicate now.

February 18, 2026 -

Run Isaac 0.1 on Replicate

Isaac 0.1 is a lightweight, grounded vision-language model built for real-world perception.

November 26, 2025 -

Run FLUX.2 on Replicate

FLUX.2 brings professional-grade image generation and editing with unprecedented detail, multi-reference support, and enterprise efficiency.

November 25, 2025 -

How to prompt Nano Banana Pro

Nano Banana Pro brings powerful new capabilities in image generation and editing. Here are the main prompt tricks you should know.

November 20, 2025 -

Retro Diffusion's pixel art models are now on Replicate

Generate game assets, sprites, tiles, and pixel art with Retro Diffusion's suite of carefully crafted models.

November 19, 2025 -

Replicate is joining Cloudflare

November 17, 2025 -

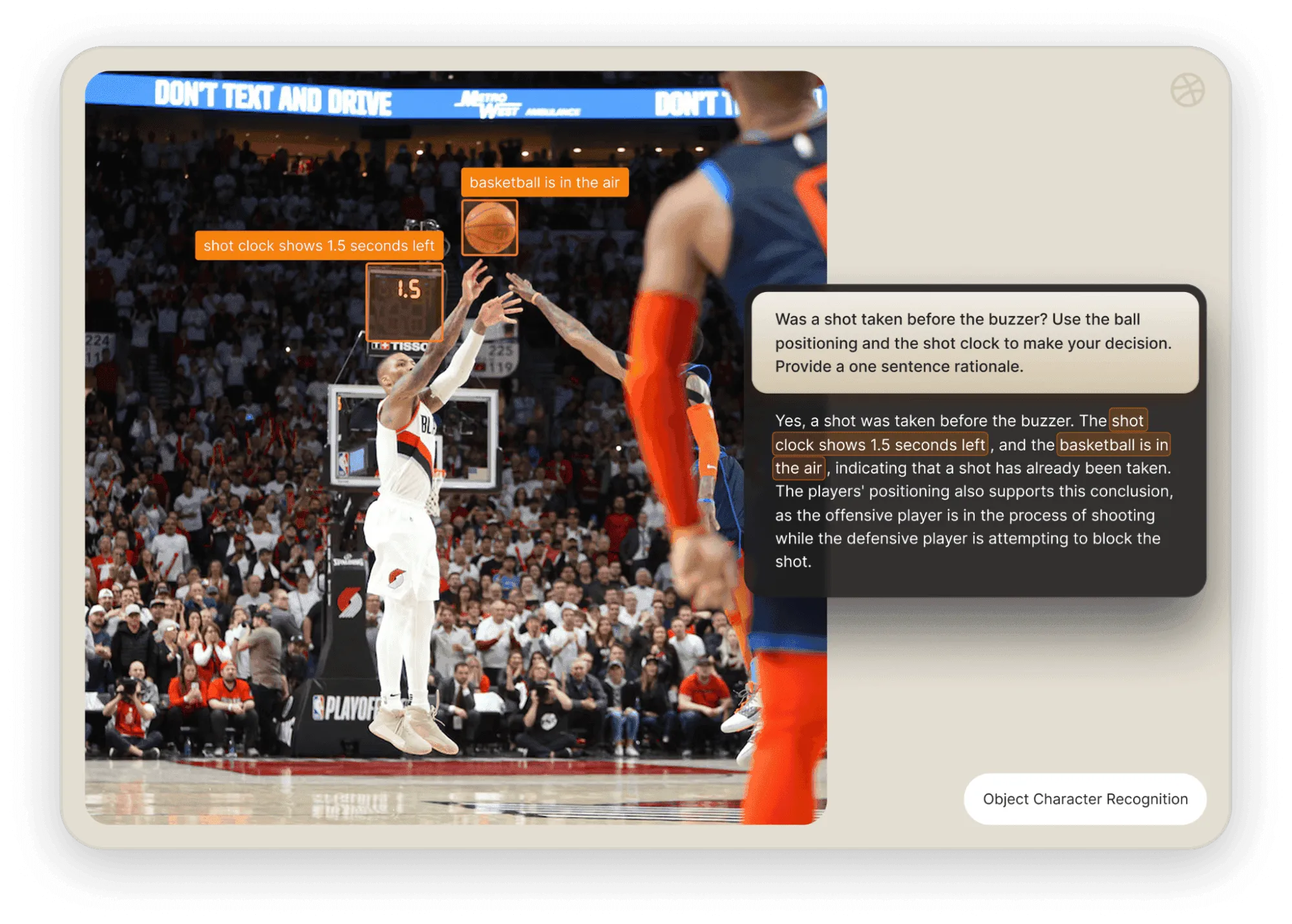

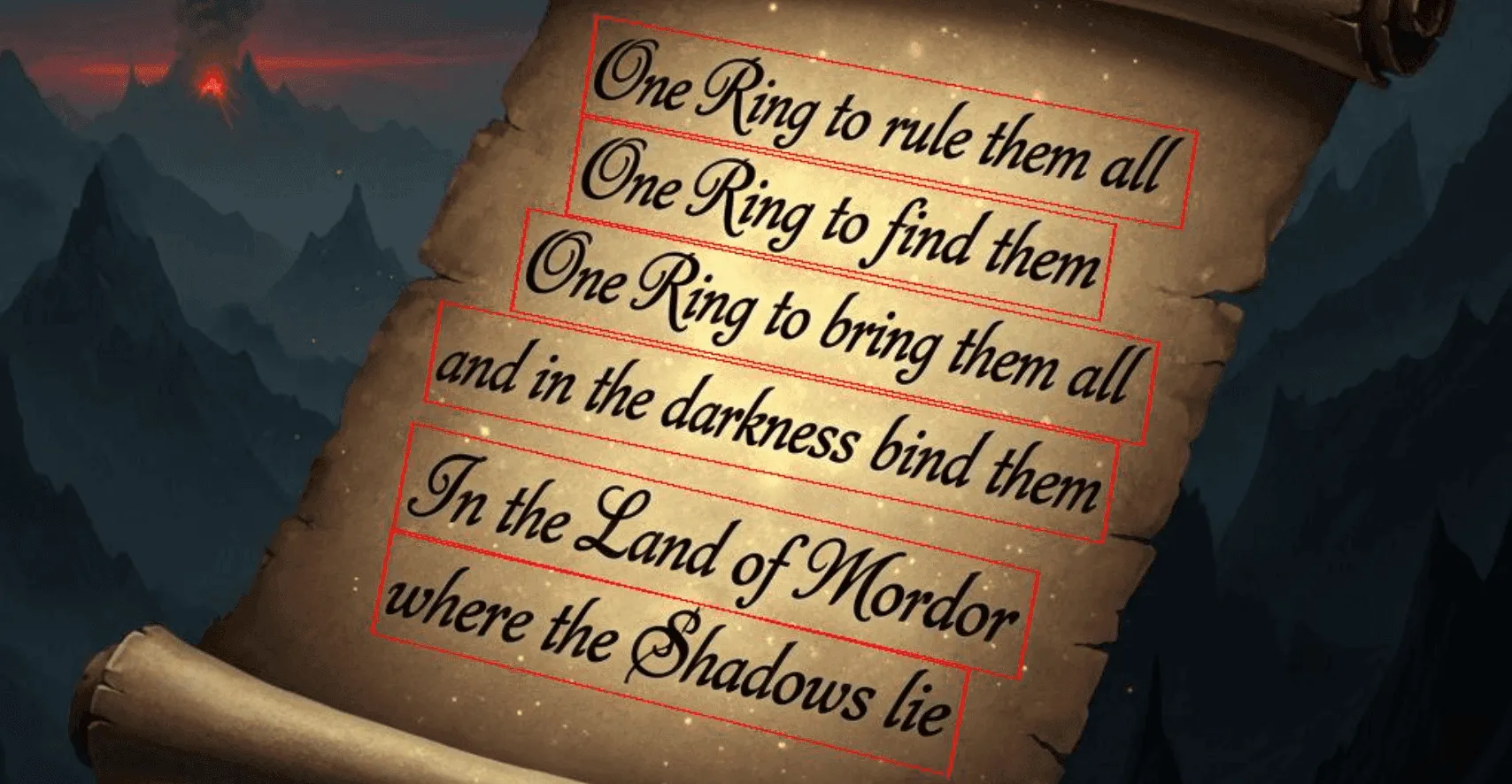

Extract text from documents and images with Datalab Marker and OCR

Turn whole documents into markdown or grab line-level polygons with two new models from Datalab.

October 21, 2025 -

How to prompt Veo 3.1

Google's Veo 3.1 brings powerful new video generation capabilities including reference images, first/last frame control, and enhanced image-to-video. Here's everything you need to know.

October 16, 2025 -

IBM's Granite 4.0 is now on Replicate

October 2, 2025 -

Which image editing model should I use?

Here is the ultimate comparison post on all the latest image editing models.

September 23, 2025 -

Introducing our new search API

Find the best models and collections with a single API call.

September 16, 2025 -

Torch compile caching for inference speed

Cache your compiled models for faster boot and inference times

September 8, 2025 -

Announcing Replicate's remote MCP server

Use our MCP to discover, compare, and run models from apps like Claude, Cursor, and VS Code.

August 10, 2025 -

How to prompt Veo 3 with images

You'll be surprised what you can do with AI video now.

August 1, 2025 -

Open source video is back

Wan 2.2 is our fastest, cheapest video model.

July 31, 2025 -

Generate consistent characters

We compare the best image models for generating consistent characters from a single reference image.

July 21, 2025 -

Bria is now on Replicate

We've partnered with Bria to bring a suite of commercial-grade image generation and editing models to Replicate. Built entirely on licensed data, Bria’s tools are designed for enterprises and developers building safely with visual AI.

July 17, 2025 -

![How we optimized FLUX.1 Kontext [dev]](/_content/assets/cover.F5B0oqug_Z1wYLhI.webp)

How we optimized FLUX.1 Kontext [dev]

A deep-dive into the Taylor Seer optimization technique

July 15, 2025 -

Compare AI video models

It's hard keeping up with every new video model. In this post we'll help you pick the best one for your needs.

July 7, 2025 -

The FLUX.1 Kontext hackathon

We hosted a hackathon with BFL for FLUX.1 Kontext. Here were the winners.

July 1, 2025 -

How to prompt Veo 3 for the best results

Learn expert prompting techniques to create stunning videos with Google's Veo 3.

June 10, 2025 -

Get the most from Google Veo 3

We're sharing our experiments and tips on Google's new Veo 3 model.

June 5, 2025 -

FLUX.1 Kontext from the community

FLUX.1 Kontext is everywhere - see what folks are cooking.

June 2, 2025 -

Use FLUX.1 Kontext to edit images with words

This is how to get the most from Black Forest Labs' new image editing model.

May 29, 2025 -

Generate incredible images with Google's Imagen 4

Google's flagship image generation model, Imagen 4, is now available for you to try on Replicate. Create images with fine detail, versatile styles, and improved typography.

May 22, 2025 -

Run OpenAI’s latest models on Replicate

OpenAI's latest models are now available on Replicate, including GPT-4.1, GPT-4o, and the o-series.

May 22, 2025 -

NVIDIA H100 GPUs are here

NVIDIA H100 GPUs are here, with better performance and lower cost.

May 16, 2025 -

Run 30,000+ LoRAs on Hugging Face with Replicate

We've partnered with Hugging Face to bring Replicate inference to their platform.

May 15, 2025 -

Ideogram 3.0 on Replicate

Ideogram 3.0 is packed with powerful design, style transfer, and realism capabilities.

May 7, 2025 -

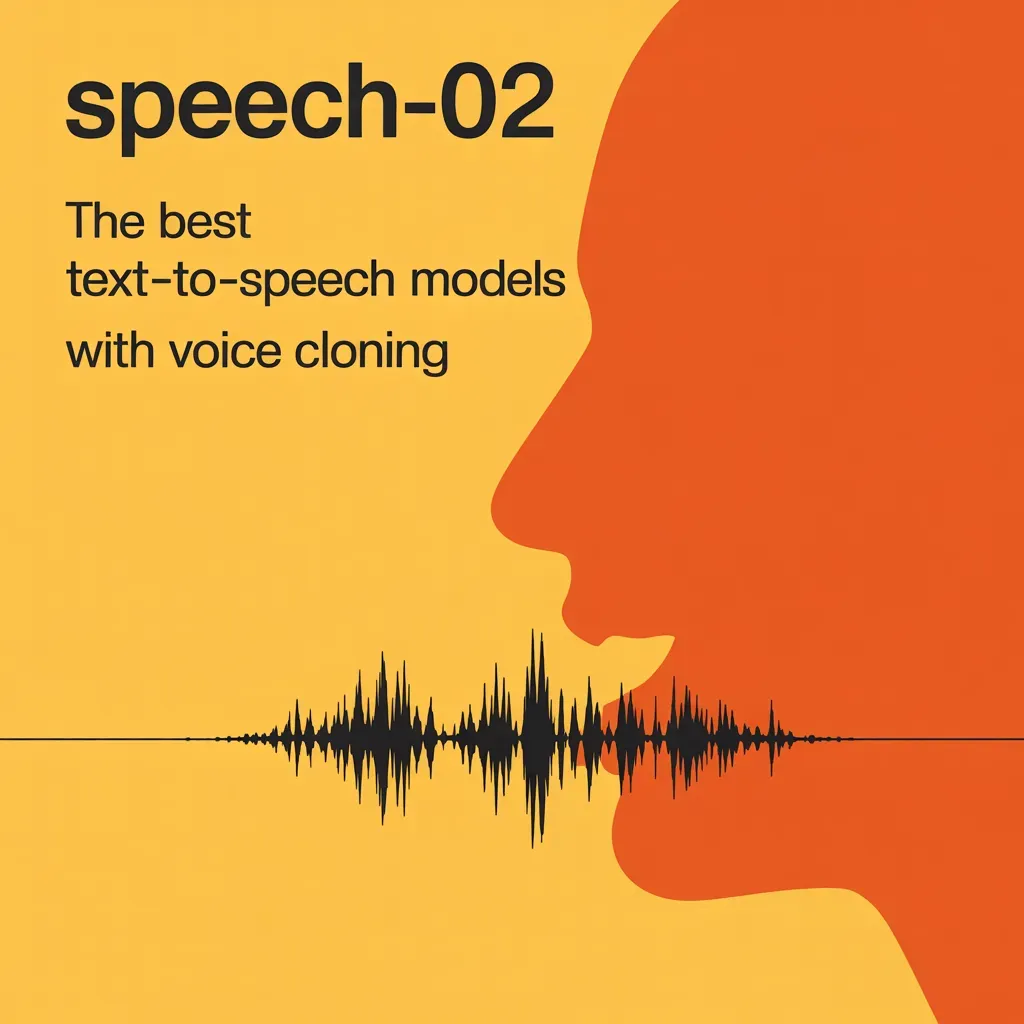

Run MiniMax Speech-02 models with an API

MiniMax's Speech-02 models give you high-quality text-to-speech with voice cloning, emotional expression, and multilingual support.

May 6, 2025 -

Easel AI is now on Replicate

Advanced face swap and AI avatars from Easel AI are now on Replicate.

April 16, 2025 -

Stylized video with Wan2.1

One of the most fun ways to use Wan2.1 is video style transfer. Learn how here.

April 1, 2025 -

Creative roundup: avatars, lightsabers, and LoRA tricks

We take a quick look at the latest creative models, experiments, and community projects.

March 28, 2025 -

Wan2.1: generate videos with an API

Wan2.1 is the most capable open-source video generation model, producing coherent and high-quality outputs. Learn how to run it in the cloud with a single line of code.

March 5, 2025 -

Wan2.1 parameter sweep

We've been playing with Alibaba's WAN2.1 text-to-video model lately. What happens when you tweak those mysterious parameters? Let's find out.

March 5, 2025 -

You can now fine-tune open-source video models

Train your own versions of Tencent's HunyuanVideo for style, motion, and characters on Replicate.

January 24, 2025 -

Generate short videos with the Replicate playground

Create AI videos with a convenient workflow.

January 17, 2025 -

AI video is having its Stable Diffusion moment

There are lots of models that are as good as OpenAI's Sora now.

December 16, 2024 -

FLUX fine-tunes are now fast

We've made running fine-tunes on Replicate much faster, and the optimizations are open-source.

November 26, 2024 -

FLUX.1 Tools – Control and steerability for FLUX

A new set of image generation capabilities for FLUX models, including inpainting, outpainting, canny edge detection, and depth maps.

November 21, 2024 -

NVIDIA L40S GPUs are here

NVIDIA L40S GPUs are here, with better performance and lower cost.

November 15, 2024 -

Ideogram v2 is an outstanding new inpainting model

We've partnered with Ideogram to bring their inpainting model to Replicate's API.

October 22, 2024 -

Stable Diffusion 3.5 is here

Stability AI's latest text-to-image model is now available on Replicate and you can run it with an API.

October 22, 2024 -

FLUX is fast and it's open source

FLUX is now much faster on Replicate, and we’ve made our optimizations open-source so you can see exactly how they work and build upon them.

October 10, 2024 -

![FLUX1.1 [pro] is here](/_content/assets/cover.KlUZSq-3_Z1hn3EN.webp)

FLUX1.1 [pro] is here

Black Forest Labs continue to push boundaries with their latest release of FLUX.1 image generation model.

October 3, 2024 -

Using synthetic training data to improve Flux finetunes

It's easy to fine-tune Flux, but sometimes you need to do a little more work to get the best results. This post covers techniques you can use to improve your fine-tuned Flux models.

September 20, 2024 -

Fine-tune FLUX.1 with an API

Create and run your own fine-tuned Flux models programmatically using Replicate's HTTP API.

September 9, 2024 -

Fine-tune FLUX.1 to create images of yourself

Create your own fine-tuned Flux model to generate new images of yourself.

August 30, 2024 -

Replicate Intelligence #12

Flux LoRAs, Hot Zuck, and Replicate on Lex Fridman

August 23, 2024 -

Replicate Intelligence #11

Fine tune FLUX.1, generative video games, a vision for the metaverse

August 16, 2024 -

Fine-tune FLUX.1 with your own images

We've added fine-tuning (LoRA) support to FLUX.1 image generation models. You can train FLUX.1 on your own images with one line of code using Replicate's API.

August 15, 2024 -

Replicate Intelligence #10

Flux developments, Minecraft bot, Streamlit cookbook with Zeke

August 9, 2024 -

FLUX.1: First Impressions

We explore FLUX.1's unique strengths and aesthetics to see what we can generate.

August 2, 2024 -

Replicate Intelligence #9

Open source frontier image model, cut objects from videos, new Python web framework from Jeremy Howard

August 2, 2024 -

Run FLUX with an API

FLUX.1 is a new text-to-image model from Black Forest Labs, the creators of Stable Diffusion, that exceeds the capabilities of previous open-source models.

August 1, 2024 -

Replicate Intelligence #8

A top-tier open-ish language model, new safety classifiers, model search API

July 26, 2024 -

Run Meta Llama 3.1 405B with an API

Llama 3.1 405B: is the most powerful open-source language model from Meta. Learn how to run it in the cloud with one line of code.

July 23, 2024 -

Replicate Intelligence #7

Data curation, data generation, data data data

July 12, 2024 -

Replicate Intelligence #6

Google's Gemma2 models, language model leaderboard, tips for Stable Diffusion 3

June 28, 2024 -

Replicate Intelligence #5

Really good coding model, AI search breakthroughs, Discord support bot

June 21, 2024 -

How to get the best results from Stable Diffusion 3

We show you how to use Stable Diffusion 3 to get the best images, including new techniques for prompting.

June 18, 2024 -

Run Stable Diffusion 3 on your Apple Silicon Mac

A step-by-step guide to generating images with Stable Diffusion 3 on your M-series Mac using MPS acceleration.

June 18, 2024 -

Push a custom version of Stable Diffusion 3

Create your own custom version of Stability's latest image generation model and run it on Replicate via the web or API.

June 14, 2024 -

Replicate Intelligence #4

Find concepts in GPT models, real-time speech to text in the browser, H100s are coming

June 14, 2024 -

Run Stable Diffusion 3 on your own machine with ComfyUI

Copy and paste a few commands into terminal to play with Stable Diffusion 3 on your own GPU-powered machine.

June 14, 2024 -

H100s are coming to Replicate

We'll soon support NVIDIA's H100 GPUs for predictions and training. Let us know if you want early access.

June 12, 2024 -

Run Stable Diffusion 3 with an API

Stable Diffusion 3 is the latest text-to-image model from Stability, with improved image quality, typography, prompt understanding, and resource efficiency. Learn how to run it in the cloud with one line of code.

June 12, 2024 -

Replicate Intelligence #3

Garden State Llama, applied LLMs guide, real-time image generation

June 7, 2024 -

Replicate Intelligence #2

Faster image generation, AI-powered world simulator, insights on AI dataset complexity

May 31, 2024 -

Replicate Intelligence #1

DIY Llama 3 implementation, open-source smart glasses, steering language models with dictionary learning

May 24, 2024 -

Shared network vulnerability disclosure

May 23, 2024 -

Run Snowflake Arctic with an API

Arctic is a new open-source language model from Snowflake. Learn how to run it in the cloud with one line of code.

April 23, 2024 -

Run Meta Llama 3 with an API

Llama 3 is the latest language model from Meta. Learn how to run it in the cloud with one line of code.

April 18, 2024 -

Run Code Llama 70B with an API

Code Llama 70B is one of the powerful open-source code generation models. Learn how to run it in the cloud with one line of code.

January 30, 2024 -

How to create an AI narrator for your life

Or, how I met a virtual David Attenborough.

December 6, 2023 -

Clone your voice using open-source models

We’ve added fine-tuning for realistic voice cloning (RVC). You can train RVC on your own dataset from a YouTube video with a few lines of code using Replicate's API.

December 6, 2023 -

Businesses are building on open-source AI

We've raised a $40 million Series B led by a16z.

December 5, 2023 -

How to run Yi chat models with an API

The Yi series models are large language models trained from scratch by developers at 01.AI. Learn how to run them in the cloud with one line of code.

November 23, 2023 -

Scaffold Replicate apps with one command

We've added a CLI command that makes it easy to get started with Replicate.

November 22, 2023 -

Using open-source models for faster and cheaper text embeddings

An interactive example showing how to embed text using a state-of-the-art embedding model that beats OpenAI's embeddings API on price and performance.

November 10, 2023 -

Generate music from chord progressions and text prompts with MusicGen-Chord

We’ve added chord conditioning to Meta’s MusicGen model, so you can create automatic backing tracks in any style using text prompts and chord progressions.

November 8, 2023 -

Generate images in one second on your Mac using a latent consistency model

How to run a latent consistency model on your M1 or M2 Mac

October 25, 2023 -

How to use retrieval augmented generation with ChromaDB and Mistral

In this post we'll explore the basics of retrieval augmented generation by creating an example app that uses bge-large-en for embeddings, ChromaDB for vector store, and mistral-7b-instruct for language model generation.

October 17, 2023 -

Fine-tune MusicGen to generate music in any style

We’ve added fine-tuning support to MusicGen. You can train the small, medium and melody models on your own audio files using Replicate.

October 13, 2023 -

Jet-setting with Llama 2 + Grammars

How to use Llama 2 models with grammars for information extraction tasks.

October 9, 2023 -

How to run Mistral 7B with an API

Mistral 7B is an open-source large language model. Learn what it's good at and how to run it in the cloud with one line of code.

October 6, 2023 -

Make smooth AI generated videos with AnimateDiff and an interpolator

Combine AnimateDiff and the ST-MFNet frame interpolator to create smooth and realistic videos from a text prompt

October 4, 2023 -

Fine-tuned models now boot in less than one second

We've made some dramatic improvements to cold boots for fine-tuned models.

September 6, 2023 -

Painting with words: a history of text-to-image AI

With the recent release of Stable Diffusion XL fine-tuning on Replicate, and today being the 1-year anniversary of Stable Diffusion, now feels like the perfect opportunity to take a step back and reflect on how text-to-image AI has improved over the last few years.

August 22, 2023 -

We're cutting our prices in half

The price of public models is being cut in half, and soon we'll start charging new users for setup and idle time on private models.

August 16, 2023 -

A guide to prompting Llama 2

Learn the art of the Llama prompt.

August 14, 2023 -

Streaming output for language models

Our API now supports server-sent event streams for language models. Learn how to use them to make your apps more responsive.

August 14, 2023 -

Fine-tune SDXL with your own images

We’ve added fine-tuning (Dreambooth, Textual Inversion and LoRA) support to SDXL 1.0. You can train SDXL on your own images with one line of code using the Replicate API.

August 8, 2023 -

Run Llama 2 with an API

Llama 2 is the first open source language model of the same caliber as OpenAI’s models. Learn how to run it in the cloud with one line of code.

July 27, 2023 -

Run SDXL with an API

How to run Stable Diffusion XL 1.0 using the Replicate API

July 26, 2023 -

A comprehensive guide to running Llama 2 locally

How to run Llama 2 on Mac, Linux, Windows, and your phone.

July 22, 2023 -

Fine-tune Llama 2 on Replicate

So you want to train a llama...

July 20, 2023 -

What happened with Llama 2 in the last 24 hours? 🦙

A roundup of recent developments from the llamaverse following the second major release of Meta's open-source large language model.

July 19, 2023 -

Make any large language model a better poet

Prompt engineering and training are often the first solutions we reach for to improve language model behavior, but they're not the only way.

May 26, 2023 -

Status page

We've added a status page to provide real-time updates on the health of Replicate.

May 18, 2023 -

Language model roundup, April 2023

A roundup of recent developments from the world of open-source language models.

April 21, 2023 -

AutoCog — Generate Cog configuration with GPT-4

Give it a machine learning directory and AutoCog will create predict.py and cog.yaml until it successfully runs a prediction

April 19, 2023 -

Language models are on Replicate

April 5, 2023 -

How to use Alpaca-LoRA to fine-tune a model like ChatGPT

March 23, 2023 -

Week 3 of LLaMA 🦙

A roundup of recent developments from the llamaverse.

March 18, 2023 -

Fine-tune LLaMA to speak like Homer Simpson

With a small amount of data and an hour of training you can make LLaMA output text in the voice of the dataset.

March 17, 2023 -

Train and run Stanford Alpaca on your own machine

We'll show you how to train Alpaca, a fine-tuned version of LLaMA that can respond to instructions like ChatGPT.

March 16, 2023 -

Machine learning needs better tools

Lots of people want to build things with machine learning, but they don't have the expertise to use it.

February 21, 2023 -

Introducing LoRA: A faster way to fine-tune Stable Diffusion

It's like DreamBooth, but much faster. And you can run it in the cloud on Replicate.

February 7, 2023 -

Train and deploy a DreamBooth model on Replicate

With just a handful of images and a single API call, you can train a model, publish it to Replicate, and run predictions on it in the cloud.

November 21, 2022 -

Run Stable Diffusion on your M1 Mac’s GPU

How to run Stable Diffusion locally so you can hack on it

August 31, 2022 -

Run Stable Diffusion with an API

How to use Replicate to integrate Stable Diffusion into hacks, apps, and projects

August 29, 2022 -

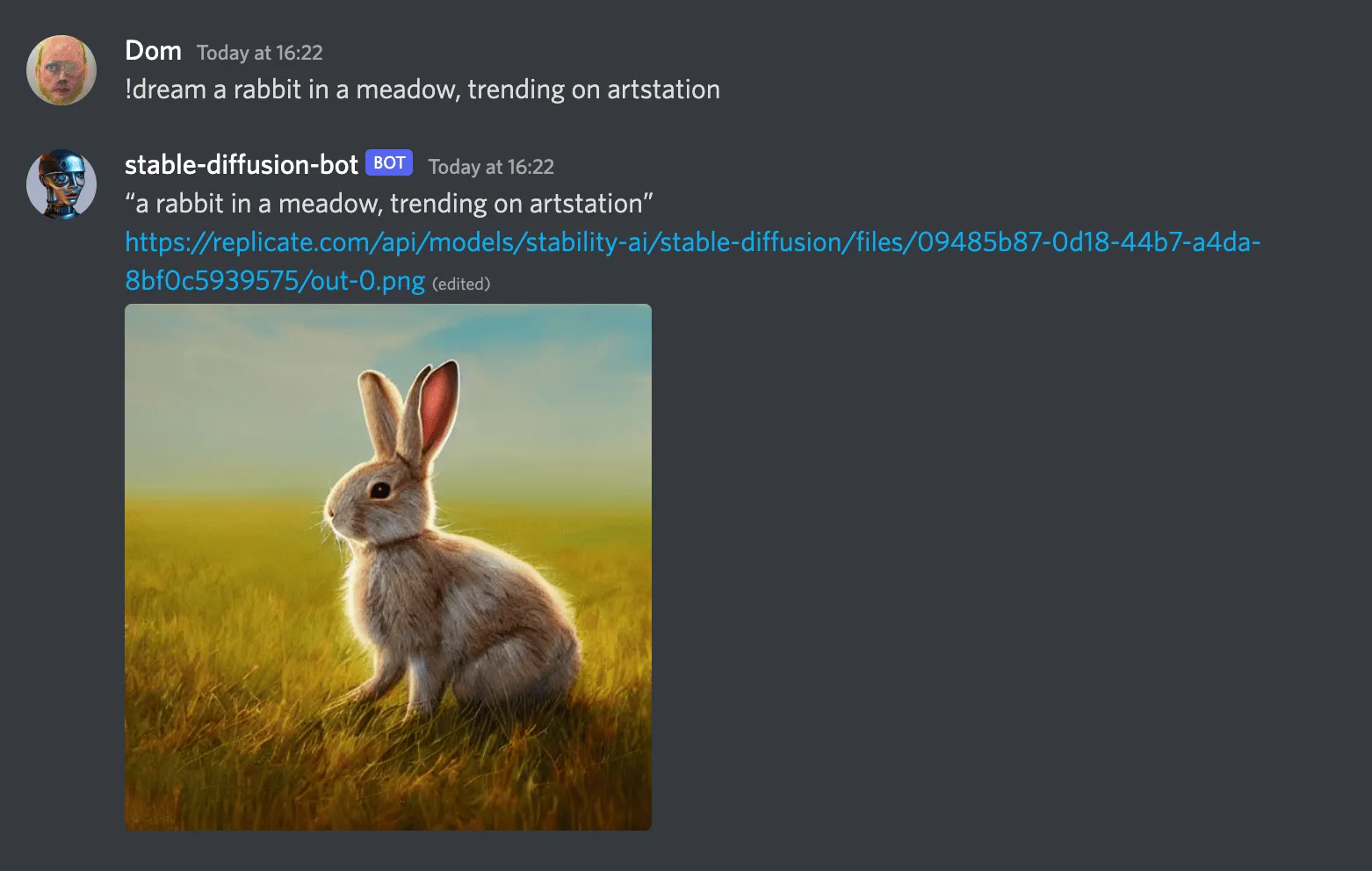

Build a robot artist for your Discord server with Stable Diffusion, Replicate, and Fly.io

A tutorial for building a chat bot that replies to prompts with the output of a text-to-image model.

August 25, 2022 -

Join us at Uncanny Spaces

We're bringing people together to explore what's being created with machine learning.

August 11, 2022 -

Automating image collection

Using CLIP and LAION5B to collect thousands of captioned images.

August 5, 2022 -

Exploring text to image models

The basics of using the API to create your own images from text.

July 18, 2022 -

A new template for model READMEs

Inspired by model cards, we've created templates for documenting models on Replicate.

July 5, 2022 -

Constraining CLIPDraw

An introduction to differentiable programming and the process of refining generative art models.

May 27, 2022 -

Hello, world!

We're a small team of engineers and machine learning enthusiasts working to make machine learning more accessible.

May 16, 2022