How to use retrieval augmented generation with ChromaDB and Mistral

Over the last few months, Retrieval Augmented Generation (RAG) has emerged as a popular technique for getting the most out of Large Language Models (LLMs) like Llama-2-70b-chat.

In this post, we’ll explore the creation of an example RAG “app” which helps you generate click-worthy titles for Hacker News submissions. All you need to do is provide a working title, idea, or phrase, and even the most boring of words will be transformed into a title destined for the front page of Hacker News.

Admittedly, this is a basic toy idea. It’s not revolutionary, and it may not land your post on the front page of Hacker News. That’s okay, because that’s not the point: the point is to provide you with a practical hands-on feel for how RAG works, and give you the understanding you need to use this technique in your own projects and systems.

Okay, so what is retrieval augmented generation?

Retrieval augmented generation is a technique of enriching your language model outputs by retrieving contextual information from an external data source, and including that information as part of your language model prompts. The idea is that when you augment a language model prompt with meaningful external data, the language model is able to respond with deeper understanding and relevance.

This sort of pattern effectively extends the functional context length of a given language model, because instead of being limited to 4,096 tokens (5-ish pages of text), you can query an entire 1,000 page book for meaningful passages, and pull in just the handful of sentences needed to create high-quality responses.

RAG is very flexible in that you can use the same data sources across multiple language models or upgrade to the latest language models without needing to re-train or fine-tune any specific models.

Other techniques such as fine-tuning can provide great results for specific use-cases like setting the style/tone of a language model, or teaching a language model how to perform a task or skill that is difficult or too large to explain in every prompt. But if your goal is to integrate an LLM with data, RAG is the way.

The basics of retrieval augmented generation

You may have heard that you need to use embeddings and a vector store to build a RAG application, but that is not entirely true. Those components can be helpful, and we will cover them in this post, but they are not strictly required.

At its most basic level, RAG is really just pasting additional context into the text of your language model prompt. It can be as simple as retrieving the current weather for San Francisco from a realtime weather API, and then passing the JSON response forward in the text of the prompt. Nothing fancy.

In the example prompt below, we instruct the mistral-7b-instruct model to read the input JSON data and respond with a weather report, as if it were a human meteorologist:

[INST]

Given the following JSON weather data, write a weather report:

{

"location": "San Francisco, CA",

"temperature: "65ºF",

"forecast_high": "81ºF",

"forecast_low": "63ºF",

"time": "2023-10-09 10:00"

}

You must only report on the data that is provided in the JSON. Do not mention any weather details that are outside the scope of the provided JSON. Do not mention the JSON context data.

All responses must sound like a human meteorologist.

[/INST]Responds with:

Good morning! Today in San Francisco, CA, the temperature is currently 65 degrees Fahrenheit.

The forecast for the day calls for a high of 81 degrees and a low of 63 degrees.

It's a beautiful day to explore the city and enjoy the outdoors.

Make sure to stay hydrated and wear sunscreen if you plan on spending time in the sun.

Have a great day!The ability to pass arbitrary textual information into an LLM prompt is part of what make them so interesting and exciting. You could include a small CSV table, passages from a book, code, quotes from inspiring philosophers, or even application state. Anything, really, as long as it is smaller than the language model maximum context length, which is 4,096 tokens for Llama2.

Let’s outline what we’re building

Now that we’re on the same page and understand that RAG will solve all of your problems 🤭, let’s explore how to actually use it with a hands-on example.

As mentioned above, we’re going to build an example that converts titles, ideas, or phrases into hackernews titles. To make this example a reality, we’ll tap into the wisdom of the crowd by indexing titles of top stories from hackernews into a vector store, and making them queryable via embedding similarity search.

The idea is that inspiration from titles with a proven track record will help to create a better title suggestion.

The general flow of how this will work together goes something like:

- Construct a dataset that can be indexed and queried.

- Load the dataset into ChromaDB (a vector store).

- Run some test queries against ChromaDB and visualize what is in the database.

- Bring it all together. Query ChromaDB for 10 related popular titles, then prompt mistral-7b-instruct on Replicate to suggest new titles, inspired by the related popular titles.

1. Construct a dataset that can be indexed and queried

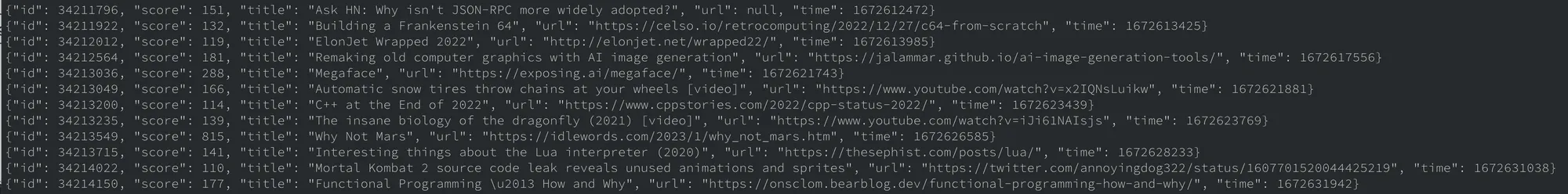

The first thing we need to do is create a dataset of Hacker News titles. Creating this sort of dataset from scratch is kind of annoying and surprisingly complicated, so I’ve done the heavy lifting of scraping the Hacker News API and distilling stories down to a dataset of 13,509 top stories, each of which have received 100+ upvotes from January 2023 to early October 2023.

The dataset consists of id, score, title, url, and time fields.s

You can download the data from the git repo for this blog post, here: 13509-hn-topstories-2023.jsonl

I won’t bore you with all of the details, but I have included the two scripts I hacked together to build this nice jsonl file. The first script scrapes the hackernews API by story ID, and saves all of the stories from a specific id range to an sqlite database. The second script queries the sqlite database for stories with 100 or more upvotes, and writes them to a .jsonl file.

For our purposes, we really only care about the title fields, since we’ll be using that field for embeddings, and all of the entries are “popular” because they each have greater than 100 upvotes.

2. Load the dataset into ChromaDB

Great, now that we have a JSONL dataset, we need to generate embeddings for each of the 13,509 titles and then load everything in a vector store.

But let’s break that down, because the whole idea behind embeddings and vector stores can be confusing.

What are embeddings?

Embedding vectors are numerical representations of content, formatted as a large list of floating point numbers. I like to think of embeddings as some sort of mind-boggling coordinate system. Sort of like how GPS maps have 2 coordinates known as latitude and longitude. Embeddings are kind of the same thing, except instead of 2 dimensions, there are 1024 dimensions 🤯.

When embedding vectors begin to cluster together, the documents they represent tend to be similar in semantic meaning, syntax, or style.

Don’t worry if this doesn’t make sense yet, we’ll try to help you visualize things later on in the post.

There are many embedding models to choose from. For this post, we will generate embeddings by using the the bge-large-en-v1.5 model on Replicate. This model was released in September 2023, and at the time of this writing it’s the top performing embedding model on HuggingFace’s embeddings model leaderboard.

We’ve put together a collection of embedding models for you to try on Replicate. These include more text embedding models such as all-mpnet-base-v2, image embedding models such as clip-features, and even multi-modal embeddings that can generate embeddings for text, images, and audio in the same space like imagebind.

What is a vector store?

Vector stores are a special type of database where we can store and query embeddings, and their associated documents. Vector stores really shine when you are searching for things that are “semantically similar”. For example, if you wanted to search for documents similar to “cat with a hat,” you might find results related to cats, hats, or other animals wearing hats.

For this post, we will be using ChromaDB, an open-source vector store database. Our usage patterns of chroma are very basic, and you could easily swap in a variety of other vector store databases instead.

Some popular alternatives are Pinecone, Weaviate, and pgvector.

Let’s write some code

Before running any code, you’ll need to install a few Python dependencies.

pip install replicate chromadb tqdmIn the following script, we perform a handful of operations.

- Initialize the ChromaDB on disk, at the

./chromadbdirectory. This is where the database files will live. - Load all of the

JSONLentries into a list of dictionaries. - In batches of 250 entries:

- Generate 250 embedding vectors with a single Replicate prediction.

- Construct ChromaDB friendly lists of inputs for ids, titles, metadata, and embeddings.

- Upsert 250 entries to ChromaDB, along with all of their embeddings and metadata.

import json

import replicate

import chromadb

from tqdm.auto import tqdm

# Initialize the chromadb directory, and client.

client = chromadb.PersistentClient(path="./chromadb")

collection = client.get_or_create_collection(

name=f"hackernews-topstories-2023"

)

# Create an empty list which we will populate with jsonl entries.

hn_dataset = []

# Load the 13509-hn-topstories-2023.jsonl into a list of dictionaries

with open("13509-hn-topstories-2023.jsonl", "r") as f:

for line in f:

hn_dataset.append(json.loads(line))

# Generate embeddings, and index titles in batches of 250.

batch_size = 250

# Use tqdm to show a friendly progress bar.

for i in tqdm(range(0, len(hn_dataset), batch_size)):

# set end position of batch

i_end = min(i + batch_size, len(hn_dataset))

# Get next batch of 250 lines

batch = hn_dataset[i : i + batch_size]

# When storing data in Chromadb, we construct a list of titles, ids, and

# metadata.

# NOTE: It is important that each of these lists is the same size, and that

# each list index position corresponds with the others.

batch_titles = [story["title"] for story in batch]

batch_ids = [str(story["id"]) for story in batch]

batch_metadata = [dict(score=story["score"], time=story['time']) for story in batch]

# Generate embeddings, 250 titles at a time.

batch_embeddings = replicate.run(

"nateraw/bge-large-en-v1.5:9cf9f015a9cb9c61d1a2610659cdac4a4ca222f2d3707a68517b18c198a9add1",

input={"texts": json.dumps(batch_titles)},

)

# Upsert all of the embeddings, ids, metadata, and title strings into Chromadb.

collection.upsert(

ids=batch_ids,

metadatas=batch_metadata,

documents=batch_titles,

embeddings=batch_embeddings,

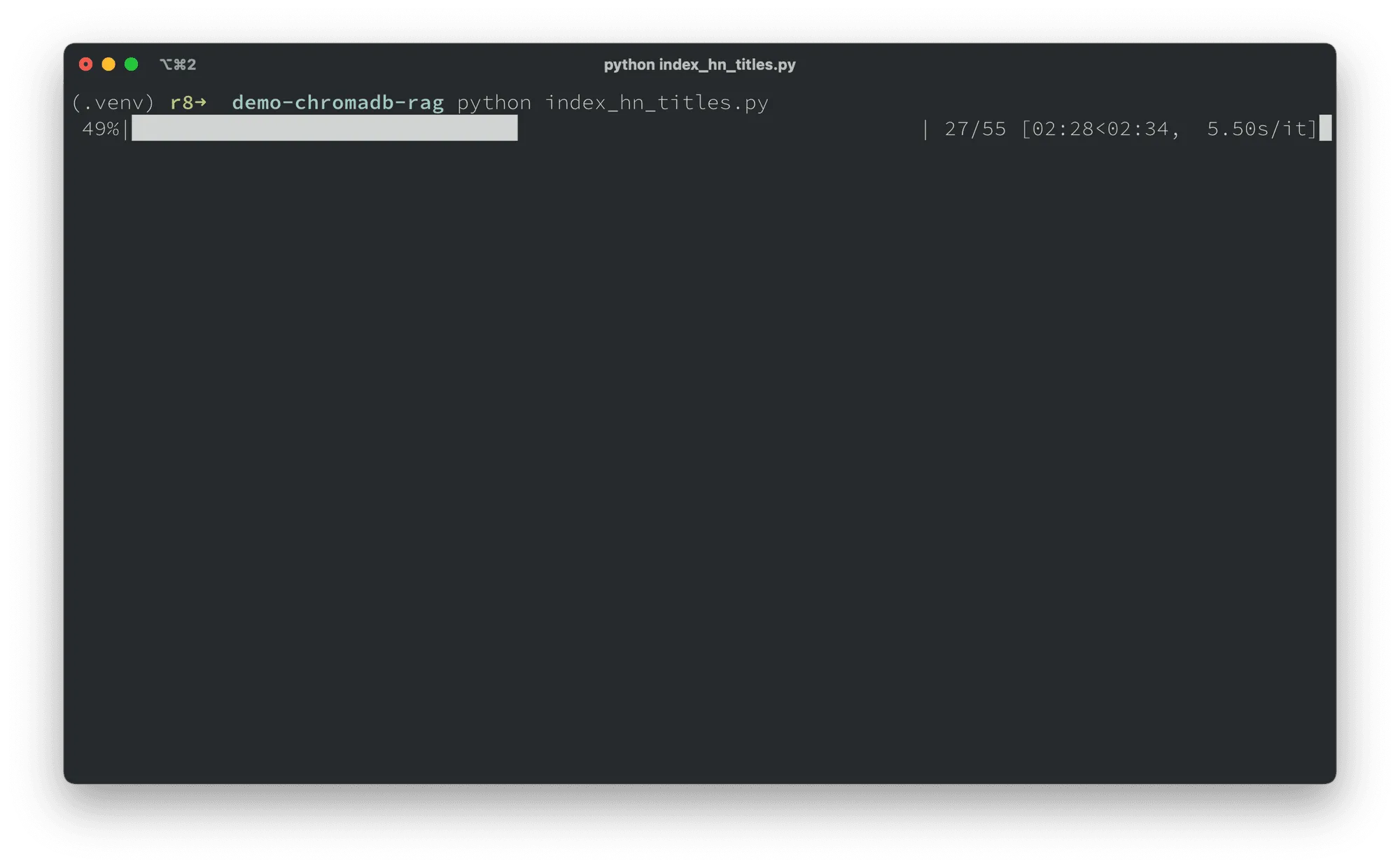

)The script uses tqdm to display a progress bar, along with a time estimate. It takes ~5.5s to generate embeddings for and insert all of the metadata for 250 titles. The whole process should take about 5 minutes.

When you run the script with python index_hn_titles.py, you’ll find that the ChromaDB data is persisted to the ./chromadb directory.

3. Running example queries with Chromadb

Awesome. Now that we have a populated vector store database, how can we verify that everything worked as expected? There are two ways I like to test out indexed embeddings.

First, and easiest, I like to actually just make a query with an example string, and see if the results look correct. Second, I like to visualize the embeddings in 2D or 3D space, to see if they are clustering together in a meaningful way.

In this script, we perform the following operations:

- Initialize the chromadb client

- Query the chromadb with a test string

- Print out the results

import json

import chromadb

import replicate

from chromadb import Documents

# This function will be used to convert the query string to embeddings, so we can

# perform a similarity search against the embedding space.

#

# This is configured to use the bge-large-en-v1.5 embeddings model

def generate_embeddings(texts: Documents):

return replicate.run(

"nateraw/bge-large-en-v1.5:9cf9f015a9cb9c61d1a2610659cdac4a4ca222f2d3707a68517b18c198a9add1",

input={"texts": json.dumps(texts)},

)

# Initialize Chromadb client

client = chromadb.PersistentClient(path="./chromadb")

# Note the `embedding_function` keyword argument. When supplied like this,

# Chromadb will seamlessly convert a query string to embedding vectors, which get

# used for similarity search.

collection = client.get_or_create_collection(

name=f"hackernews-topstories-2023", embedding_function=generate_embeddings

)

# We will be searching for results that are similar to this string

query_string = "how to create a sqlite extension"

# Perform the Chromadb query.

results = collection.query(

query_texts=[query_string],

n_results=10,

)

# Create a string from all of the results

results = '\n'.join(results['documents'][0])

# Print the results

print(results)This code prints the following titles, which all seem to be in roughly similar to the test query string "how to create a sqlite extension"

Making SQLite extensions pip install-able

SQLite-Utils

sqlean: A set of SQLite extensions

Lightweight SQLite Editor for Windows

Libgsqlite: A SQLite extension which loads a Google Sheet as a virtual table

SQLite 3.43

Code Generator for SQLite

SQLite 3.42.0

Mycelite: SQLite extension to synchronize changes across SQLite instances

Cloud Backed SQLite🤓 Sidebar tangent: visualizing embeddings

As a sidebar, I like to build intuition about complex subjects by thinking about them visually.

The easiest way to visualize what is happening inside of embedding space is to plot embedding vectors on a chart as if they are points in a 2D or 3D chart. To do this, we need to first project our embedding vectors from 768 dimensions down to 3 dimensions. We can use dimensionality reduction techniques like t-SNE (t-distributed stochastic neighbor embedding) or UMAP (uniform manifold approximation and projection) to perform this reduction down to 3D space, which we can then use to easily display on screen.

My favorite way to display this sort of data is to use the Tensorflow Projector tool to visualize and interact with embedding vectors in 3D space.

Even at first glance of the video below, you can visually see that the points are clumping together. When clustering occurs like this, it represents that the clustered points are semantically similar to each other.

If there was no visual clumping, and it was just a massive mess of dots with no discernible pattern, it would suggest that something is not quite right with your embeddings.

The video below contains a visualization of the t-SNE dimension reduction process evolving into clusters over the course of a few hundred iterations. Towards the end of the video, you can see that when I click on a point that is about sqlite, all of the nearest neighbors are also about sqlite.

This always blows my mind, and feels like magic.

To use Tensorflow Projector, you need to convert your metadata and embeddings to a specific TSV format. I won’t cover how to do this step by step, but I’ve included a script in the git repo which translates an entire Chromadb collection into two files (embeddings.tsv and metadata.tsv), which can be loaded into Tensorflow Projector, and visualized in an interactive way.

4. Bring it all together

Now we’ll bring it all together by passing in a working title, querying ChromaDB for 10 related popular titles, then prompting mistral-7b-instruct to suggest new titles that have been inspired by the 10 related popular titles.

import json

import sys

import chromadb

import replicate

from chromadb import Documents

# Use bge-large-en-v1.5 on Replicate to generate embeddings.

def generate_embeddings(texts: Documents):

return replicate.run(

"nateraw/bge-large-en-v1.5:9cf9f015a9cb9c61d1a2610659cdac4a4ca222f2d3707a68517b18c198a9add1",

input={"texts": json.dumps(texts)},

)

# Instantiate the chromadb client, with embedding function

client = chromadb.PersistentClient(path="./chromadb")

collection = client.get_or_create_collection(

name=f"hackernews-topstories-2023", embedding_function=generate_embeddings

)

# Accept a user prompt from the first command line argument.

user_prompt = sys.argv[1]

# Query Chromadb for the 10 most similar titles to the user prompt.

results = collection.query(

query_texts=[user_prompt],

n_results=10,

)

# Concatenate the results into a single string, which we will shove into the prompt.

successful_titles = '\n'.join(results['documents'][0])

# LLM Prompt template.

# NOTE: The [INST] and [/INST] tags are required for mistral-7b-instruct to leverage instruction fine-tuning.

PROMPT_TEMPLATE = f'''[INST]

You are an expert in all things hackernews. Your goal is to help me write the most click worthy hackernews title that will get the most upvotes. You will be given a USER_PROMPT, and a series of SUCCESSFUL_TITLES. You will respond with 5 suggestions for better hackernews titles.

All of your suggestions should be structured in the same format and tone as the previously successful SUCCESSFUL_TITLES. Make sure you do not include specific versions from the SUCCESSFUL_TITLES in your suggestions.

USER_PROMPT: {user_prompt}

SUCCESSFUL_TITLES: {successful_titles}

SUGGESTIONS:

[/INST]

'''

# Prompt the mistral-7b-instruct LLM

mistral_response = replicate.run(

"a16z-infra/mistral-7b-instruct-v0.1:83b6a56e7c828e667f21fd596c338fd4f0039b46bcfa18d973e8e70e455fda70",

input={

"prompt": PROMPT_TEMPLATE,

"temperature": 0.75,

'max_new_tokens': 2048,

},

)

# Concatenate the response into a single string.

suggestions = ''.join([str(s) for s in mistral_response])

# Print the suggestions.

print(suggestions)

print('====')

print('PROMPT_TEMPLATE', PROMPT_TEMPLATE)I named this script hnify.py, so I’m able to run it with the following command python hnify.py "teaching Elixir to my toddler". The script will print out 5 suggested titles, followed by the entire text prompt that was sent to mistral-7b-instruct.

When experimenting with new RAG prompts like this, it is always beneficial to inspect the fully populated prompt template. Reading the entire prompt will help you understand what the vector store is returning, and give you a feel for how your language model of choice is responding to the retrieval-sourced data.

Replicate makes iteratively tweaking prompts very easy. For example, after I ran this script I was able to find the single prediction where the language model prompt was being run, in the Replicate dashboard . Here’s my link: https://replicate.com/p/aosuuqlb43zvxbsptfylyewknq

On that link you can click the “Replicate” button, and quickly test prompt changes in the text field on the page. This workflow makes it very fast to iterate on language model prompts while using realistic vector store context.

Here is what my entire prompt looks like:

# Prompt template that is sent to mistral-7b-instruct

[INST]

You are an expert in all things hackernews. Your goal is to help me write the most click worthy hackernews title that will get the most upvotes. You will be given a USER_PROMPT, and a series of SUCCESSFUL_TITLES. You will respond with 5 suggestions for better hackernews titles.

All of your suggestions should be structured in the same format and tone as the previously successful SUCCESSFUL_TITLES. Make sure you do not include specific versions from the SUCCESSFUL_TITLES in your suggestions.

USER_PROMPT: teaching Elixir to my toddler

SUCCESSFUL_TITLES: Elixir for Humans Who Know Python

Scripting with Elixir

Teaching ChatGPT to speak my son’s invented language

Physical Knobs and Elixir

Unpacking Elixir: Syntax

The Comprehensive Guide to Elixir's List Comprehension (2022)

From Python to Elixir Machine Learning

How to sell Elixir again

Elixir is still safe

Unpacking Elixir: Real-Time and Latency

Elixir for Ruby developers: the three most important differences

Show HN: Learn Python with Minecraft

Single File Elixir Scripts

Unpacking Elixir: Resilience

Unpacking Elixir: Concurrency

Elixir – Why the dot when calling anonymous functions?

Elixir and Rust is a good mix

Owl: A toolkit for writing command-line user interfaces in Elixir

A Breakdown of HTTP Clients in Elixir

Visual programming with Elixir: Learning to write binary parsers (2019)

SUGGESTIONS:

[/INST]

Finally, the script prints out these following hackernews-ified titles:

python hnify.py "teaching Elixir to my toddler"

1. Elixir for Toddlers: Teaching the Basics of Functional Programming

2. A Beginner's Guide to Elixir: Teaching Your Toddler to Code

3. Elixir for Kids: A Step-by-Step Guide to Learning Functional Programming

4. Teaching Elixir to Your Toddler: A Fun and Easy Approach

5. Elixir for Toddlers: An Introduction to Functional Programming for BeginnersThese are pretty good! I’ve found that in general it tends to suggest Unleashing and Revolutionizing , or marketing buzzwords too frequently. We’ll leave this as an exercise to the reader, but what changes would you make to the prompt to get it to be less marketing-y?

That’s a wrap! It is now time to post to Hacker News and see if the our new retrieval augmented generation overlords have helped me get internet famous.

Next steps

- Try mistralai/mistral-7b-v0.1 and mistralai/mistral-7b-instruct-v0.1 on Replicate. It is shocking how well this model works, for only having 7b parameters.

- The Mistral team released a paper in October 2023 describing how they built the model in a way that performs better than larger models.

- Test out some of the interesting new mistral fine-tunes on Replicate. For example, nateraw/mistral-7b-openorca was fine-tuned on the Open Orca dataset for chat, and could make for an ideal large language model for retrieval augmented generation.

- Check out the associated git repo on GitHub that contains all of the code from the post, as well as some additional scripts for the creating the hackernews dataset, and exporting chromadb embeddings to Tensorflow Projector tsv files.

- If you found this post helpful, let us know on X at @replicate, or join our community Discord.