FLUX.1 Tools – Control and steerability for FLUX

The team at Black Forest Labs is back with FLUX.1 Tools, a new set of models that add control and steerability to their FLUX text-to-image model.

The FLUX.1 Tools lineup includes four new features:

- Fill: Inpainting and outpainting, like a magic AI paintbrush for precise edits.

- Canny: Use edge detection to generate images with precise structure.

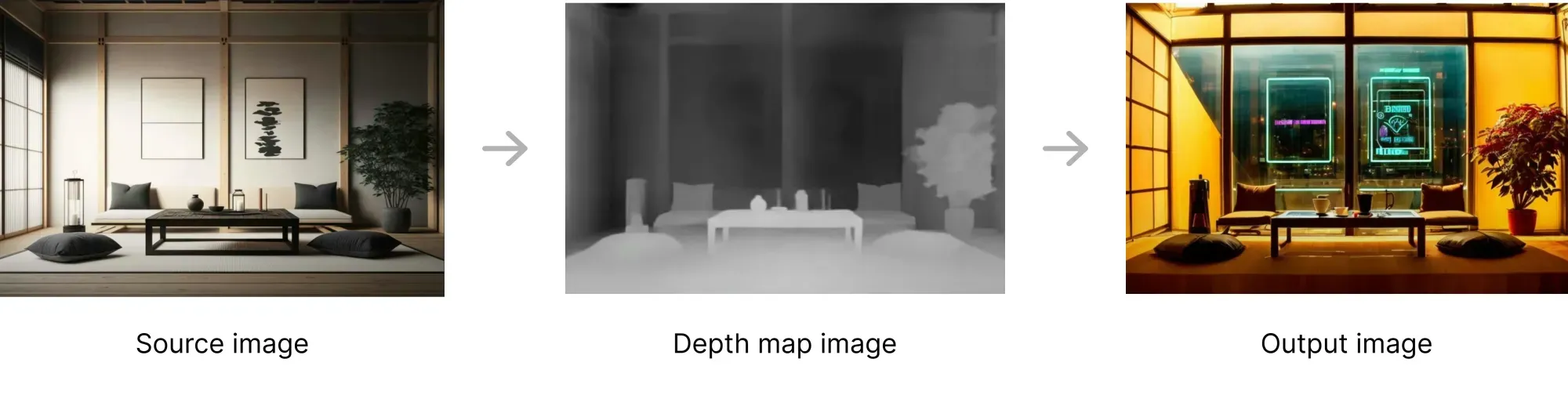

- Depth: Use depth maps to generate images with realistic perspective.

- Redux: An adapter for the FLUX.1 base models that you can use to create variations of images.

Each of these new features is available for both the FLUX.1 [dev] and FLUX.1 [pro] models, with Redux also available for FLUX.1 [schnell]. All of these models are now on Replicate.

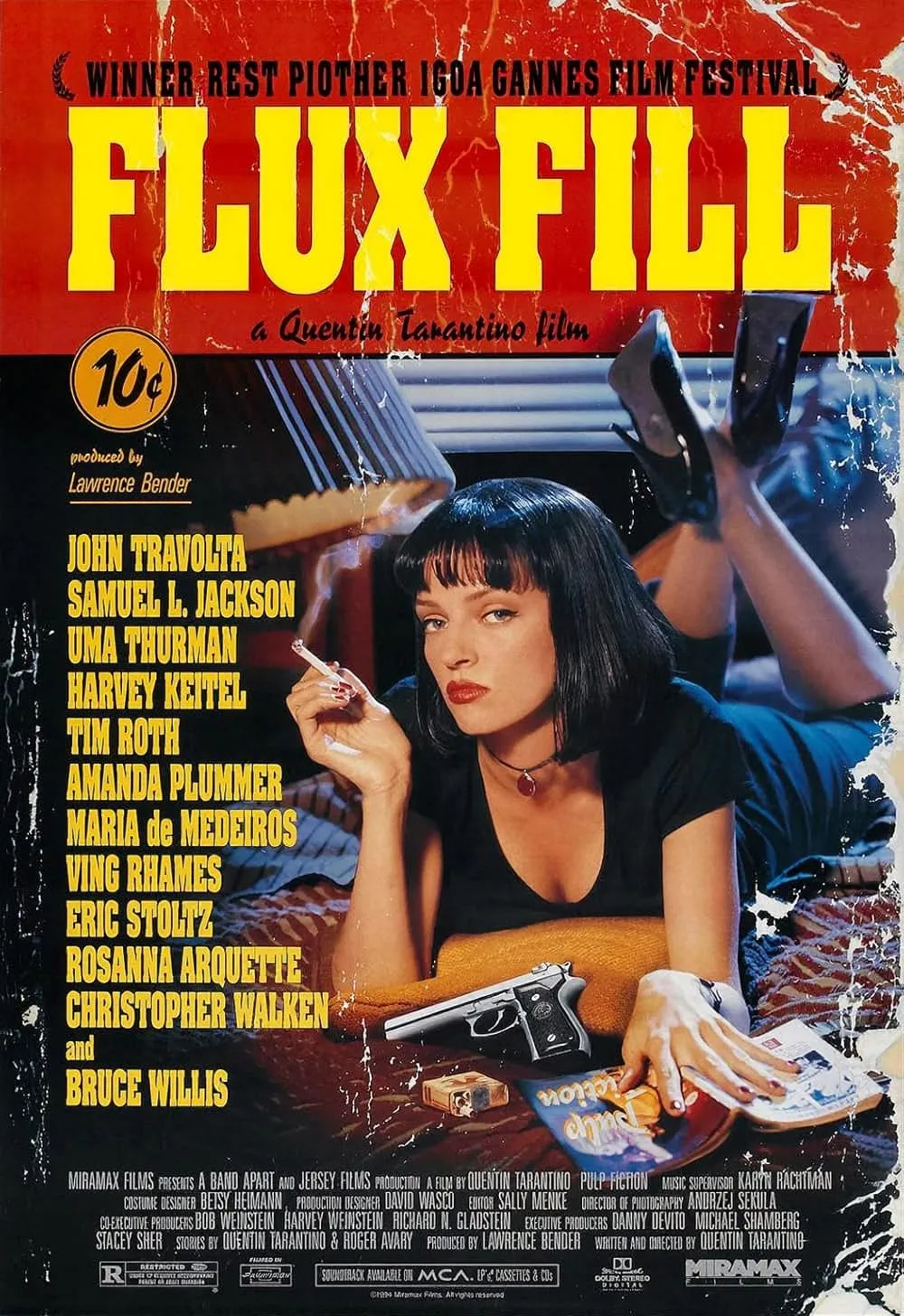

FLUX Fill is great at text inpainting

One of the most exciting new features is FLUX Fill’s ability to inpaint text. Simply mask out the text you want to change and prompt for new words. FLUX Fill will inpaint that text while also matching the style of the original.

In this example we used FLUX Fill to quickly change the text “FLUX Dev” to “Fill Dev”. Our mask covered the word “FLUX”, and our prompt was:

a photo of misty woods with the text “FILL DEV”

It just works.

All the new models

FLUX.1 [dev] models:

FLUX.1 [pro] models:

FLUX.1 [schnell] models:

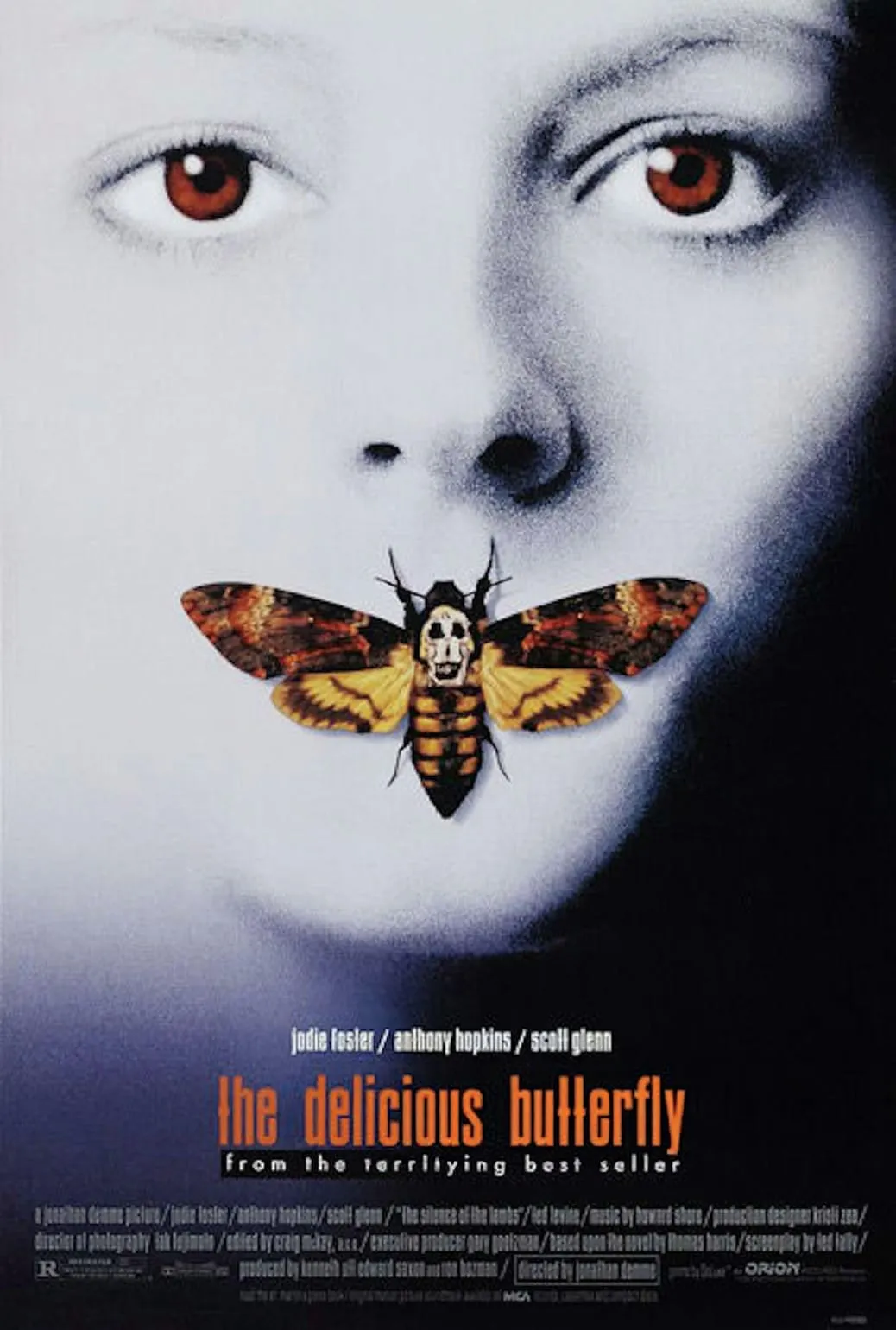

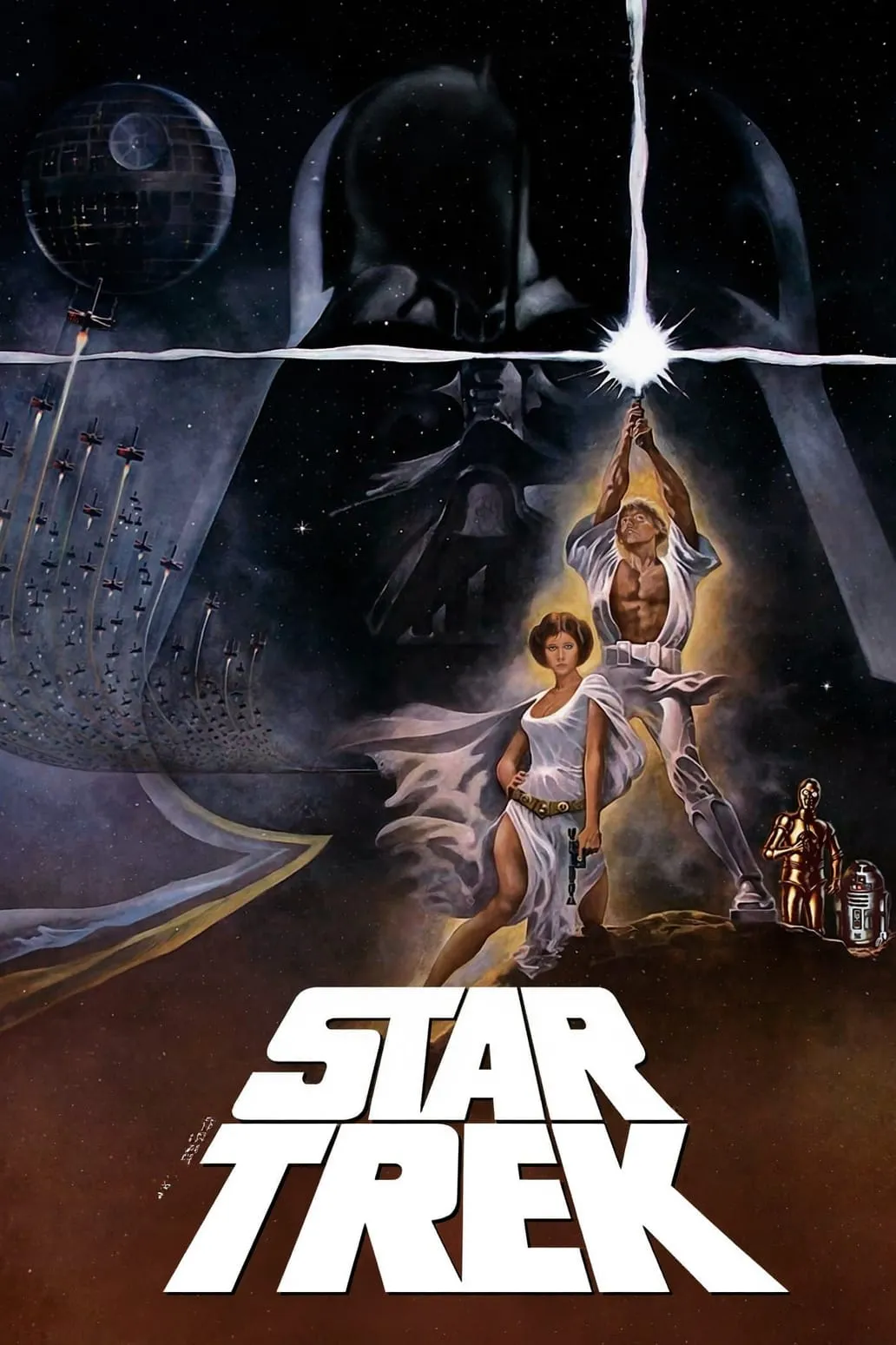

FLUX Redux

You can use FLUX Redux to generate variations of images. When you give it an input image, it will generate a new version with subtle differences, while maintaining the core elements of the original.

You can also customize the output by adding a text prompt, allowing for creative restyling of existing images.

Redux is available as its own model for FLUX.1 [dev] and FLUX.1 [schnell], and will soon be available as a new feature on the pro, pro 1.1 and pro 1.1 ultra models.

What is ControlNet?

ControlNet is an open-source tool that lets you use “conditioning images” to guide image generation models.

Combined with a text prompt, conditioning images steer the model toward producing specific results, like replicating the structure of an outline or the depth of a scene, while still allowing for creativity in the generated details.

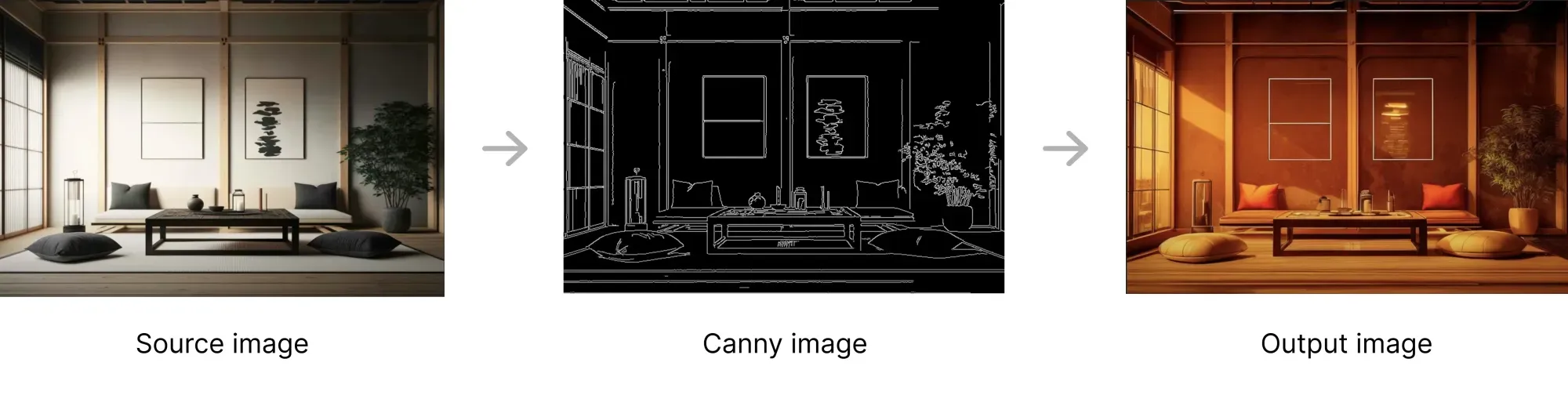

What is ControlNet Canny?

The Canny method is a way to detect edges in an image. It simplifies a picture into lines and boundaries that show the shapes of objects. ControlNet can use these edges as a conditioning image to ensure the generated output matches the structure or outline provided. Canny edge detection is great for turning sketches or outlines into fleshed-out, detailed images. You can also use canny edge detection to preserve the edges of an existing image while generating a new variation on that image.

What is ControlNet Depth?

A depth map is a visual representation where darker and lighter areas show how far or close parts of an image are. ControlNet can use depth maps to guide a model in generating images with realistic perspective, making sure objects are arranged and sized correctly in the output.

Further reading

To learn more, check out our ControlNet guide.