Introducing our new search API

We’ve added a new search API to help you find the best models. This API is currently in beta, but it’s already available to all users in our TypeScript and Python SDKs, and our MCP servers.

Here’s an example of how to use it with cURL:

curl -s \

-H "Authorization: Bearer $REPLICATE_API_TOKEN" \

"https://api.replicate.com/v1/search?query=lip+sync"Here’s a video of the search API in action using our MCP server with Claude Desktop:

More metadata

The new search API returns results for models, collections, and documentation pages that match your query.

{

query: "lip sync",

models: [

{model: { url, run_count, etc }, metadata: { tags, score, etc }},

{model: { url, run_count, etc }, metadata: { tags, score, etc }},

{model: { url, run_count, etc }, metadata: { tags, score, etc }},

],

collections: [

{name, slug, description},

{name, slug, description},

],

pages: [

{name, href},

{name, href},

],

}For model results, the API returns a model object with all the data you would normally expect like url, description, and run_count, but it also returns a new metadata object for each model that includes properties like a longer and more detailed generated_description, tags, and more.

Here’s an example using cURL and jq to get specific data for Google’s 🍌 Nano Banana 🍌 model:

curl -s \

-H "Authorization: Bearer $REPLICATE_API_TOKEN" \

"https://api.replicate.com/v1/search?query=nano+banana" \

| jq ".models[0] | {url: .model.url, description: .model.description, run_count: .model.run_count, tags: .metadata.tags, generated_description: .metadata.generated_description}"And here’s the response:

{

"url": "https://replicate.com/google/nano-banana",

"description": "Google's latest image editing model in Gemini 2.5",

"run_count": 5426257,

"tags": [

"text-to-image",

"image-editing",

"image-to-image"

],

"generated_description": "Generate and edit images from a text prompt and one or more reference images. Combine multiple inputs (multi-image fusion) to integrate objects into new scenes, transfer styles, and perform targeted edits—object removal, background blur, pose changes, and colorization—using natural language. Maintain character, object, and style consistency across a series for branding and storytelling. Follow complex, multi-step instructions for fast, conversational workflows. Outputs a single image with SynthID watermarking."

}Search with MCP

The new search API is already supported in our remote and local MCP servers, so you can discover and explore the best models and collections using your favorite tools like Claude Desktop, Claude Code, VS Code, Cursor, OpenAI Codex CLI, and Google’s Gemini CLI.

Our MCP server does sophisticated API response filtering to keep your LLM’s context window from getting overloaded by large response objects. It dynamically constructs a jq filter query based on each API operation’s response schema, and only returns the most relevant parts of the response. Here’s an example of the kind of filter query it uses for the search API:

{

`query`: `lip sync`,

`jq_filter`: `.models[] | {name: .model.name, owner: .model.owner, description: .model.description, run_count: .model.run_count, tags: .metadata.tags}`

}To get started using Replicate’s MCP server, read our MCP announcement blog post or head over to mcp.replicate.com.

TypeScript SDK support

The new search API is available in the latest alpha release of our TypeScript SDK as replicate.search().

To use it, install the latest alpha from npm:

npm install replicate@alphaThen use it like this:

import Replicate from "replicate"

const replicate = new Replicate()

const query = "lip sync"

const { models } = await replicate.search({ query })

for (const { model, metadata } of models) {

console.log(model.url)

console.log(metadata.tags)

console.log(metadata.generated_description)

console.log("\n\n--------------------------------\n\n")

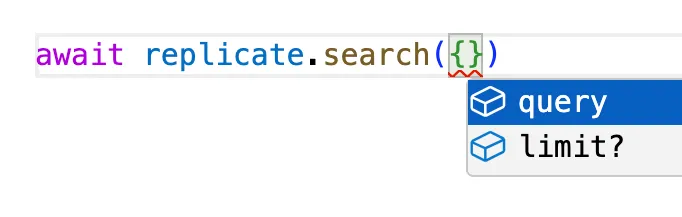

}The TypeScript SDK also includes type hints for the search API, so your editor can guide you through the writing the method signature and handling the response schema:

Python SDK support

The new search API is available in the latest alpha release of our Python SDK as replicate.search().

To use it, install the latest pre-release from PyPI:

pip install --pre replicateThen, you can use it like this:

import replicate

query = "lip sync"

results = replicate.search(query=query)

for result in results.models:

print(result.model)

print(result.metadata)

print("\n---------\n")🐍 Check out the Google Colab notebook for a Python example you can run right in your browser.

API documentation

You can find docs for the new search API on our HTTP API reference page at replicate.com/docs/reference/http#search

If you prefer structured reference documentation, you can also find the docs in our OpenAPI schema at api.replicate.com/openapi.json

Backwards compatibility

The old QUERY /v1/models search endpoint is still active and will continue to work, but we recommend using the new GET /v1/search endpoint instead for better search results.

You can still use the old search endpoint via the HTTP API directly, or with our Python or TypeScript SDKs.

The old search endpoint is now disabled in our MCP server, in favor of the new search endpoint.

Feedback

This new search API is still in beta, so we’d love to hear your feedback.

If you encounter any issues or unexpected search results, please let us know.