Generate music from chord progressions and text prompts with MusicGen-Chord

MusicGen-Chord is a model that generates music in any style based on a text prompt, a chord progression, and a tempo. It is based on Meta’s MusicGen model, where we have changed the melody input to accept chords as text or audio.

For example, here are three different generations, each with the repeating chord progression “F:maj7 G E:min A:min”:

”90s euro trance, uplifting, ibiza” in 140bpm

“british jangle pop, the smiths, 1980s” in 113 bpm:

“sacred chamber choir, choral”, based on a fine-tuned model:

How does it work?

MusicGen-Chord is built on top of Meta’s MusicGen-Melody model. MusicGen-Melody conditions the generation on both a text prompt and an audio file.

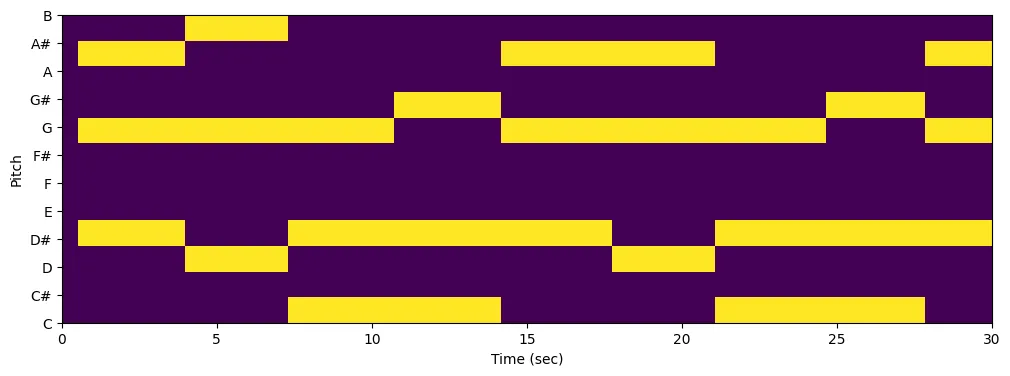

In MusicGen-Melody, the audio file is source separated to remove drums and bass, and the melody is extracted by picking the most prominent pitch in the chromagram for each time step. This results in a matrix of one-hot encoded chroma vectors (i.e. vectors where each element is 0 except a single 1).

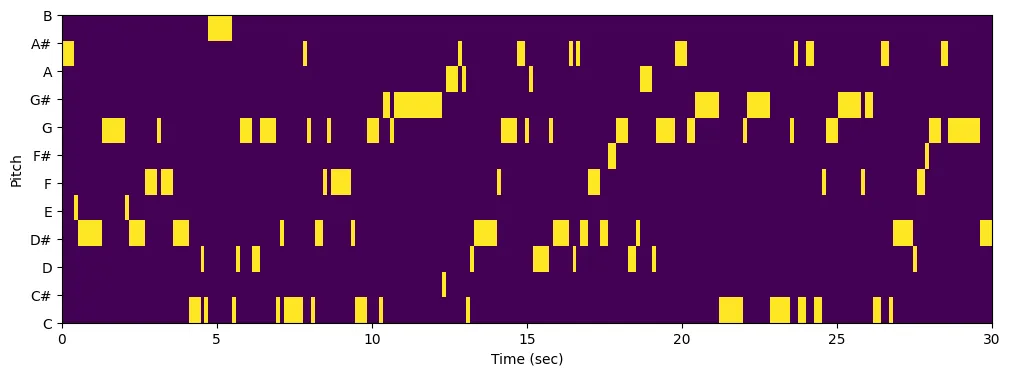

MusicGen-Chord repurposes the melody input to pass in “multi-hot” encoded chord vectors. At each time step, the pitches belonging to the desired chord are set to 1, and the remaining pitches are 0. For example, in the chromagram below there is an Eb chord (Eb, G, Bb) followed by a G chord (D, G, B), followed by a C minor chord (C, Eb, G), etc.

As in MusicGen-Melody, MusicGen-Chord passes the chromagram as additional conditioning along with the text prompt to MusicGen. Somewhat surprisingly, this “trick” works remarkably well — MusicGen is able to render chord progressions that are consistent with the style given by the prompt.

Chord input formats

You can input chords either as text (e.g. C D:min G:7 C) or audio. If you input chords as text you also have control over tempo and time signature.

The syntax for the text_chords input is a list of chords separated by spaces. Each chord lasts for a single bar, but you can add multiple chords in a bar by separating them with commas. A chord is defined by ROOT:TYPE. Valid chord types are maj, min, dim, aug, min6, maj6, min7, minmaj7, maj7, 7, dim7, hdim7, sus2 and sus4. If you omit the chord type it defaults to major. For example:

When you pass an input audio file, chords are recognized using A Bi-Directional Transformer for Musical Chord Recognition.

Run MusicGen-Chord

You can run MusicGen-Chord using Replicate’s API. Here’s an example using the replicate Python client:

import replicate

output = replicate.run(

"sakemin/musicgen-chord:c940ab4308578237484f90f010b2b3871bf64008e95f26f4d567529ad019a3d6",

input={

"prompt": "deep house",

"text_chords": "A:min A:min E:min D:min",

"bpm": 140,

"time_sig": "4/4",

}

)

print(output) # outputs a URL to the generated audioAnd of course you can run it on the web as well, at replicate.com/sakemin/musicgen-chord.

We’d love to hear what you create with MusicGen-Chord, whether you use it to generate new music, as a backing track for vocals, for remixes, or something completely different.