Run Meta Llama 3.1 405B with an API

Llama 3.1 is the latest language model from Meta. It features a massive 405 billion parameter model that rivals GPT-4 in quality, with a context window of 8000 tokens.

With Replicate, you can run Llama 3.1 in the cloud with one line of code.

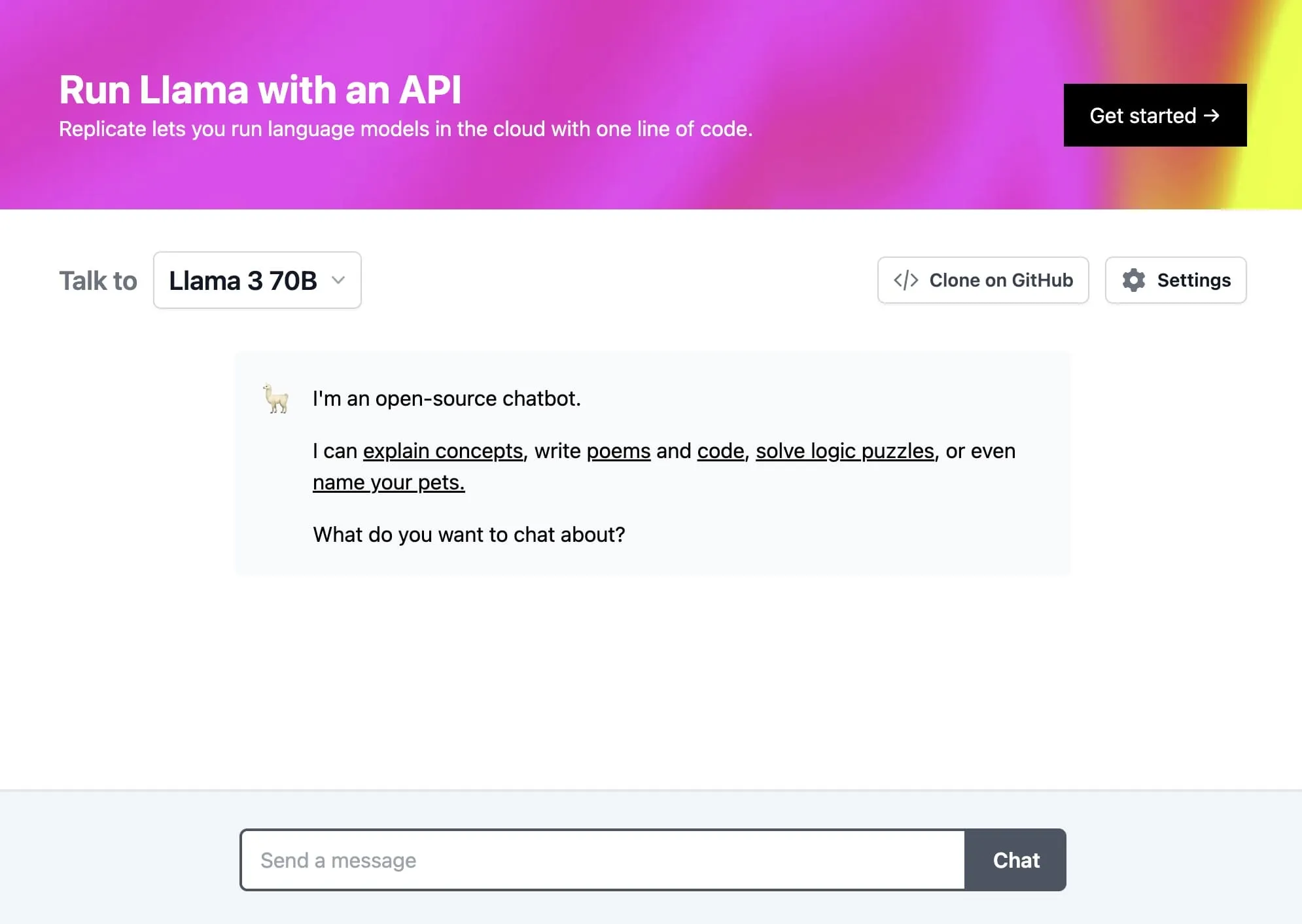

Try Llama 3.1 in our API playground

Before you dive in, try Llama 3.1 in our API playground.

Try tweaking the prompt and see how Llama 3.1 responds. Most models on Replicate have an interactive API playground like this, available on the model page: https://replicate.com/meta/meta-llama-3.1-405b-instruct

The API playground is a great way to get a feel for what a model can do, and provides copyable code snippets in a variety of languages to help you get started.

Running Llama 3.1 with JavaScript

You can run Llama 3.1 with our official JavaScript client:

Install Replicate’s Node.js client library

npm install replicateSet the REPLICATE_API_TOKEN environment variable

export REPLICATE_API_TOKEN=r8_9wm**********************************(You can generate an API token in your account. Keep it to yourself.)

Import and set up the client

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});Run meta/meta-llama-3.1-405b-instruct using Replicate’s API. Check out the model’s schema for an overview of inputs and outputs.

const input = {

prompt: "Although you can hear and feel me but not see or smell me, everybody has a taste for me. I can be learned once, but only remembered after that. What exactly am I?"

};

for await (const event of replicate.stream("meta/meta-llama-3.1-405b-instruct", { input })) {

process.stdout.write(event.toString());

};To learn more, take a look at the guide on getting started with Node.js.

Running Llama 3.1 with Python

You can run Llama 3.1 with our official Python client:

Install Replicate’s Python client library

pip install replicateSet the REPLICATE_API_TOKEN environment variable

export REPLICATE_API_TOKEN=r8_9wm**********************************(You can generate an API token in your account. Keep it to yourself.)

Import the client

import replicateRun meta/meta-llama-3.1-405b-instruct using Replicate’s API. Check out the model’s schema for an overview of inputs and outputs.

# The meta/meta-llama-3.1-405b-instruct model can stream output as it's running.

for event in replicate.stream(

"meta/meta-llama-3.1-405b-instruct",

input={

"prompt": "Although you can hear and feel me but not see or smell me, everybody has a taste for me. I can be learned once, but only remembered after that. What exactly am I?"

},

):

print(str(event), end="")To learn more, take a look at the guide on getting started with Python.

Running Llama 3.1 with cURL

Your can call the HTTP API directly with tools like cURL:

Set the REPLICATE_API_TOKEN environment variable

export REPLICATE_API_TOKEN=r8_9wm**********************************(You can generate an API token in your account. Keep it to yourself.)

Run meta/meta-llama-3.1-405b-instruct using Replicate’s API. Check out the model’s schema for an overview of inputs and outputs.

curl -s -X POST\

-H "Authorization: Bearer $REPLICATE_API_TOKEN"\

-H "Content-Type: application/json"\

-H "Prefer: wait"\

-d $'{

"input": {

"prompt": "Although you can hear and feel me but not see or smell me, everybody has a taste for me. I can be learned once, but only remembered after that. What exactly am I?"

}

}'\

https://api.replicate.com/v1/models/meta/meta-llama-3.1-405b-instruct/predictionsTo learn more, take a look at Replicate’s HTTP API reference docs.

You can also run Llama using other Replicate client libraries for Go, Swift, and others.

About Llama 3.1 405B

Llama 3.1 405B is currently the only variant available on Replicate. This model represents the cutting edge of open-source language models:

- 405 billion parameters: This massive model size allows for unprecedented capabilities in an open-source model.

- Instruction-tuned: Optimized for chat and instruction-following tasks.

- GPT-4 level quality: In many benchmarks, Llama 3.1 405B approaches or matches the performance of GPT-4.

- Multilingual support: Trained on 8 languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

- Extensive training: Trained on over 15 trillion tokens of data.

Responsible AI and Safety

Llama 3.1 comes with a strong focus on responsible AI development. Meta has introduced several tools and resources to help developers use the model safely and ethically:

- Purple Llama: An open-source project that includes safety tools and evaluations for generative AI models.

- Llama Guard 3: An updated input/output safety model.

- Code Shield: A tool to help prevent unsafe code generation.

- Responsible Use Guide: Guidelines for ethical use of the model.

We recommend reviewing these resources when building applications with Llama 3.1. For more information, check out the Purple Llama GitHub repository.

Example chat app

If you want a place to start, we’ve built a demo chat app in Next.js that can be deployed on Vercel:

Try it out on llama3.replicate.dev. Take a look at the GitHub README to learn how to customize and deploy it.

Keep up to speed

Happy hacking! 🦙