Run Meta Llama 3 with an API

Llama 3 is the latest language model from Meta. It has state of the art performance and a context window of 8000 tokens, double Llama 2’s context window.

With Replicate, you can run Llama 3 in the cloud with one line of code.

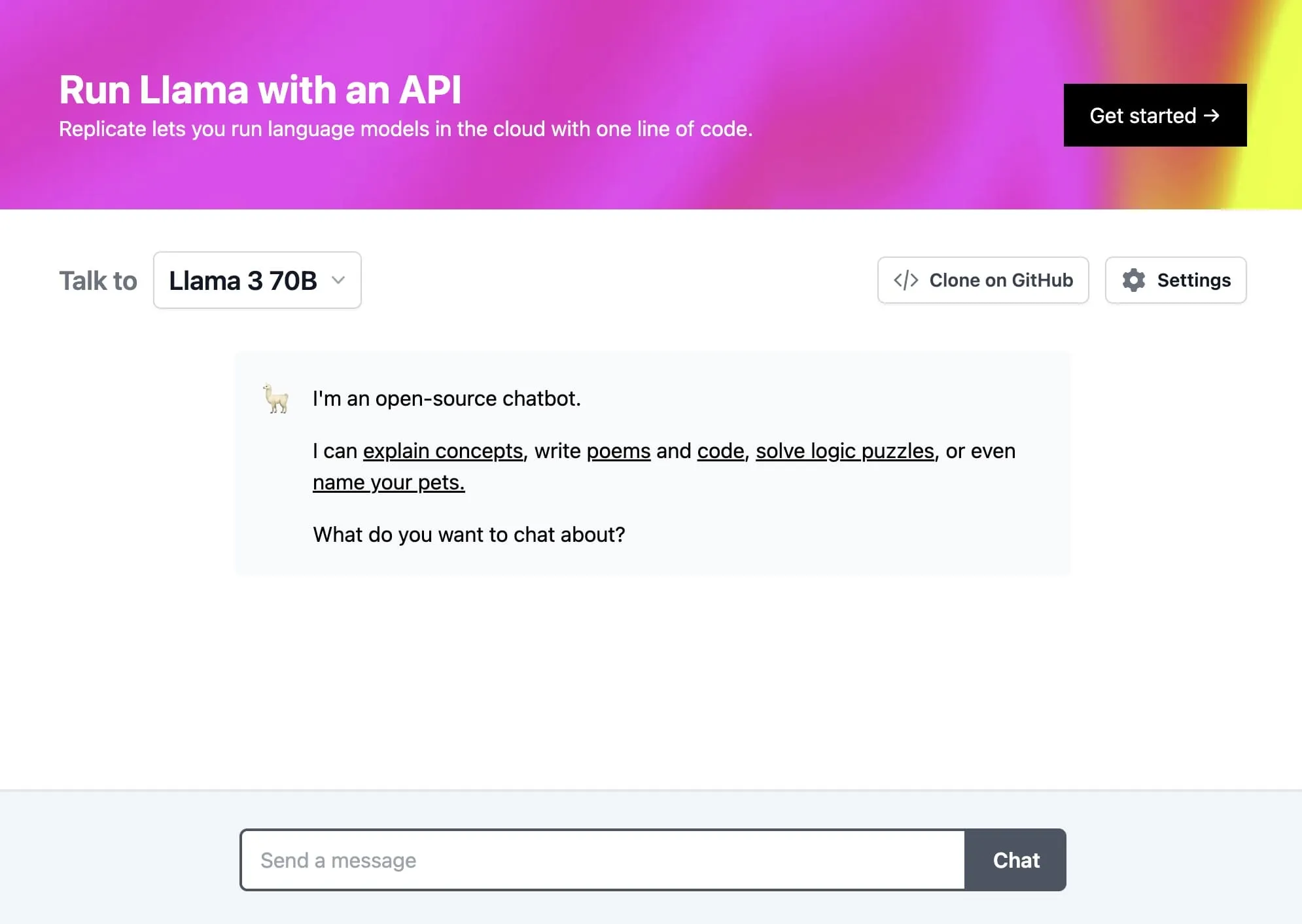

Try Llama 3 in our API playground

Before you dive in, try Llama 3 in our API playground.

Try tweaking the prompt and see how Llama 3 responds. Most models on Replicate have an interactive API playground like this, available on the model page: https://replicate.com/meta/meta-llama-3-70b-instruct

The API playground is a great way to get a feel for what a model can do, and provides copyable code snippets in a variety of languages to help you get started.

Running Llama 3 with JavaScript

You can run Llama 3 with our official JavaScript client:

Install Replicate’s Node.js client library

npm install replicateSet the REPLICATE_API_TOKEN environment variable

export REPLICATE_API_TOKEN=r8_9wm**********************************(You can generate an API token in your account. Keep it to yourself.)

Import and set up the client

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});Run meta/meta-llama-3-70b-instruct using Replicate’s API. Check out the model’s schema for an overview of inputs and outputs.

const input = {

prompt: "Can you write a poem about open source machine learning?"

};

for await (const event of replicate.stream("meta/meta-llama-3-70b-instruct", { input })) {

process.stdout.write(event.toString());

};To learn more, take a look at the guide on getting started with Node.js.

Running Llama 3 with Python

You can run Llama 3 with our official Python client:

Install Replicate’s Python client library

pip install replicateSet the REPLICATE_API_TOKEN environment variable

export REPLICATE_API_TOKEN=r8_9wm**********************************(You can generate an API token in your account. Keep it to yourself.)

Import the client

import replicateRun meta/meta-llama-3-70b-instruct using Replicate’s API. Check out the model’s schema for an overview of inputs and outputs.

# The meta/meta-llama-3-70b-instruct model can stream output as it's running.

for event in replicate.stream(

"meta/meta-llama-3-70b-instruct",

input={

"prompt": "Can you write a poem about open source machine learning?"

},

):

print(str(event), end="")To learn more, take a look at the guide on getting started with Python.

Running Llama 3 with cURL

Your can call the HTTP API directly with tools like cURL:

Set the REPLICATE_API_TOKEN environment variable

export REPLICATE_API_TOKEN=r8_9wm**********************************(You can generate an API token in your account. Keep it to yourself.)

Run meta/meta-llama-3-70b-instruct using Replicate’s API. Check out the model’s schema for an overview of inputs and outputs.

curl -s -X POST\

-H "Authorization: Bearer $REPLICATE_API_TOKEN"\

-H "Content-Type: application/json"\

-H "Prefer: wait"\

-d $'{

"input": {

"prompt": "Can you write a poem about open source machine learning?"

}

}'\

https://api.replicate.com/v1/models/meta/meta-llama-3-70b-instruct/predictionsTo learn more, take a look at Replicate’s HTTP API reference docs.

You can also run Llama using other Replicate client libraries for Go, Swift, and others.

Choosing which model to use

There are four variant Llama 3 models on Replicate, each with their own strengths. Llama 3 comes in two parameter sizes: 70 billion and 8 billion, with both base and chat tuned models.

- meta/meta-llama-3-70b-instruct: 70 billion parameter model fine-tuned on chat completions. If you want to build a chat bot with the best accuracy, this is the one to use.

- meta/meta-llama-3-8b-instruct: 8 billion parameter model fine-tuned on chat completions. Use this if you’re building a chat bot and would prefer it to be faster and cheaper at the expense of accuracy.

- meta/meta-llama-3-70b: 70 billion parameter base model. This is the 70 billion parameter model before the instruction tuning on chat completions.

- meta/meta-llama-3-8b: 8 billion parameter base model. This is the 8 billion parameter model before the instruction tuning on chat completions.

Example chat app

If you want a place to start, we’ve built a demo chat app in Next.js that can be deployed on Vercel:

Try it out on llama3.replicate.dev. Take a look at the GitHub README to learn how to customize and deploy it.

Keep up to speed

Happy hacking! 🦙