Readme

Hard Prompts Made Easy: Discrete Prompt Tuning for Language Models

This code is the official implementation of Hard Prompts Made Easy.

If you have any questions, feel free to email Yuxin (ywen@umd.edu).

About

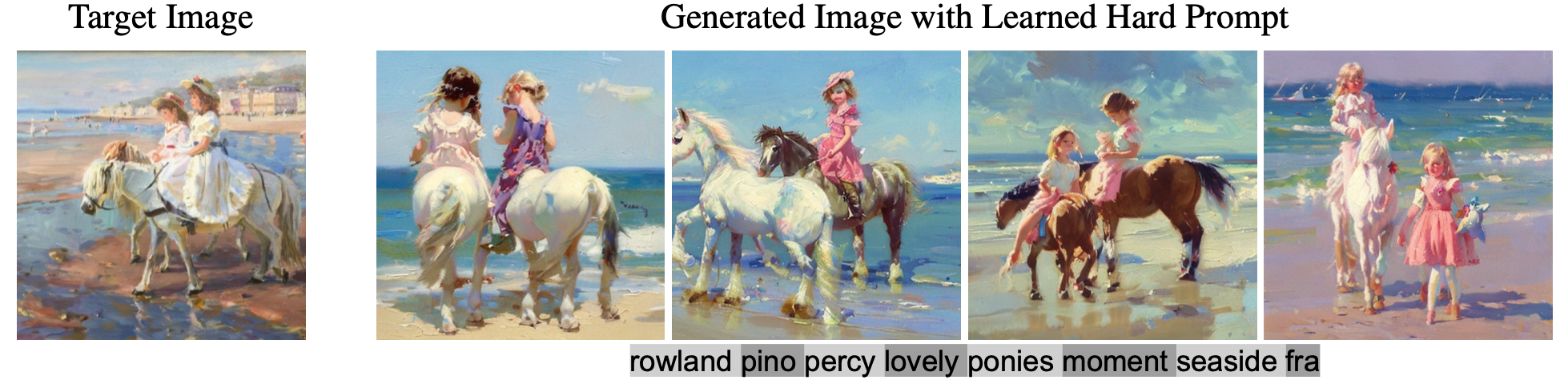

From a given image, we first optimize a hard prompt using the PEZ algorithm and CLIP encoders. Then, we take the optimized prompts and feed them into Stable Diffusion to generate new images. The name PEZ (hard Prompts made EaZy) was inspired from the PEZ candy dispenser.