Readme

Model card: https://huggingface.co/kandinsky-community/kandinsky-2-2-controlnet-depth

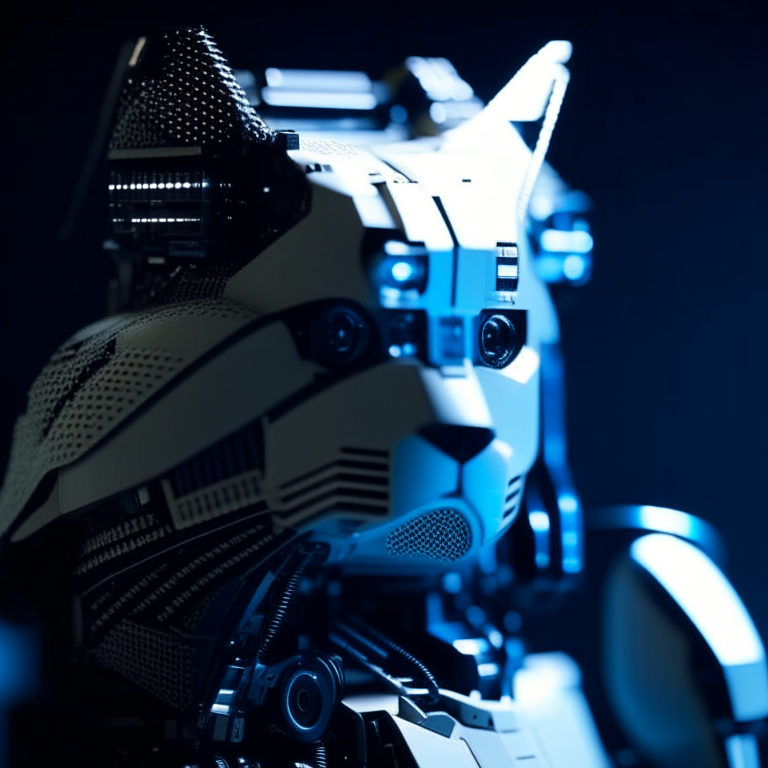

img2img controlnet keeps a lot more details from the original image compared to the text2img controller.

Hight and weight of the output should be set similar to the input image.