Run Stable Diffusion 3 on your Apple Silicon Mac

Stable Diffusion 3 (SD3) is the newest version of the open-source AI model that turns text into images. You can run it locally on your Apple Silicon Mac and start making stunning pictures in minutes. Watch this video to see it in action:

Prerequisites

- A Mac with an M-series Apple Silicon chip

- Git

Clone the repository and set up the environment

Run this to clone the SD3 code repository:

git clone https://github.com/zsxkib/sd3-on-apple-silicon.git

cd sd3-on-apple-siliconThen, create a new virtual environment with the packages SD3 needs:

python3 -m venv sd3-env

source sd3-env/bin/activate

pip install -r requirements.txtRun it!

Now, you can generate your first SD3 image:

python sd3-on-mps.pyThe first run will download the SD3 model and weights, which are around 15.54 GB. Subsequent runs will use the downloaded files.

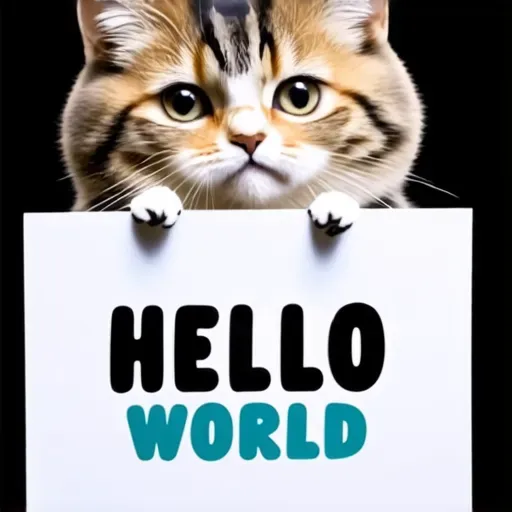

In under a minute, you’ll have a new image called sd3-output-mps.png in your directory. It’ll look something like this:

That’s it!

Customize your images

You can tweak various settings in sd3-on-mps.py to generate different images:

seed: Set a specific number to recreate the same image, or leave it asNonefor unique images each time.prompt: Modify this text to generate different images (e.g., “A sunset over the mountains”).heightandwidth: Adjust these values to change the image size (e.g., 1024 for larger images).num_inference_steps: Increase this for more detailed images. More steps mean better quality but slower generation.guidance_scale: Change this to control how closely the image matches the prompt. Higher values make the image more accurate but may look less natural.

Play around with these options to create a variety of cool images!

Another option: DiffusionKit

DiffusionKit is a CLI tool that lets you run SD3 using Python MLX. It’s another way to run SD3, like we did above, but without cloning the SD3 repository and setting up the environment manually. Here’s how to get it working:

1. Set up a new virtual environment and install DiffusionKit:

python3 -m venv diffusionkit-env

source diffusionkit-env/bin/activate

cd /path/to/diffusionkit/repo

pip install -e .2. Log in to Hugging Face Hub with your READ token:

huggingface-cli login --token YOUR_HF_HUB_TOKEN3. Run DiffusionKit with your desired settings:

diffusionkit-cli \

--prompt "A cat holding a sign that says hello world" \

--height 512 \

--width 512 \

--steps 28 \

--cfg 7.0 \

--output-path "/path/to/output/sd3-output-diffusionkit.png" \

--a16 \

--w16Here’s what some of these flags do:

--a16: Uses less memory by running the model in float16.--w16: Saves more memory by loading models in float16.--t5: Improves text understanding but uses more memory but allows longer prompts (>77 tokens) but uses more memory.

Check out the DiffusionKit repo for more details on all the available options.

Speed comparison

Here’s how long it takes on average to generate a 512x512 image with 28 steps on my M3 Max MacBook Pro with 128GB RAM:

| Method | Average Time |

|---|---|

| DiffusionKit (Python MLX) | ~16.73s |

| Torch MPS Backend | ~14.14s |

And here are some timings with different DiffusionKit settings:

| Settings | Time |

|---|---|

| Standard | 18.091s |

--a16 | 18.078s |

--a16 --t5 | 20.600s |

--a16 --w16 | 15.997s |

--a16 --w16 --t5 | 19.040s |

Using --a16 and --w16 together can save memory and make generation faster, while --t5 allows longer prompts but uses more memory and slows down generation.

Next steps

Stable Diffusion 3 (SD3)

- Run SD3 with an API in the cloud

- Push a custom version of Stable Diffusion 3

- Run Stable Diffusion 3 on your own machine with ComfyUI

- Run Stable Diffusion 3 with an API

Stable Diffusion XL (SDXL)

Community and Social

- Hop in our Discord and show us what you made!

- Follow us on

TwitterX - Or follow me on Twitter @zsakib_

Happy generating!