How to prompt Nano Banana Pro

Run Nano Banana Pro

…so Nano Banana Pro was released yesterday, as we’re sure you are aware.

The AI community has already created an insane amount of generations with this model. Yes, it can handle the basics of any image model: style transfer, object removal, text rendering, realistic images. But these are the least shocking of its capabilities.

In this post, we really wanted to highlight some of the crazy images the AI community has been able to extract from Nano Banana Pro. Strap in.

Logic

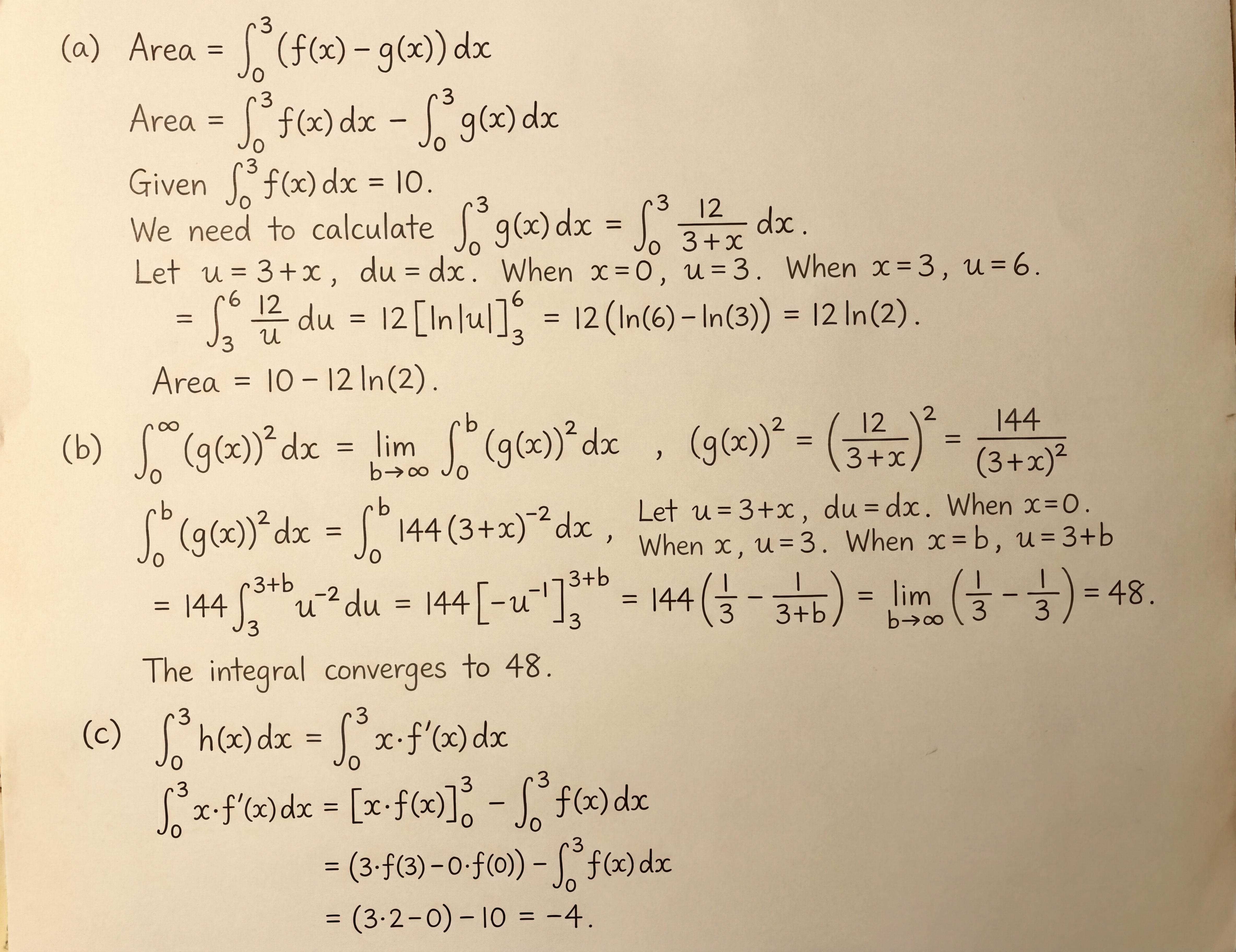

One of the most impressive facets of Nano Banana Pro is its baked-in logic. Typically, image models are good at constructing new photos from the spatial information found in the input image. However, no image model has been able to deduce, interpret, and answer textual information found in a prior image. The closest might have been GPT-image-1 which came out earlier this year, due to its autoregressive architecture. With Nano Banana Pro, we can clearly note there are intermediary prompting layers that help the model make logical conclusions. These layers seem to act as a reasoning bridge between the input image and the final output, allowing the model to not just see text in an image, but actually understand and respond to it contextually.

(Side note: someone might have discovered how to uncover said system prompt.)

Very clever trick by @minimaxir to get Nano Banana to reveal its system prompt by drawing it as refrigerator magnets. pic.twitter.com/dRIVqfkUXh

— Gene Kogan (@genekogan) November 20, 2025

For instance, you can feed Nano Banana your homework and get correct answers with work shown.

write the answers to the questions in pencil. show your work

We loved seeing creators take long pieces of information, like papers or websites, and creating summary images from them.

Nano Banana Pro is wild.

— Pietro Schirano (@skirano) November 20, 2025

Here’s my favorite use case so far: take papers or really long articles and turn them into a detailed whiteboard photo.

It’s basically the greatest compression algorithm in human history. pic.twitter.com/9TEa5xnZzW

Nano Banana Pro just took in the entire Nvidia Q3 earnings PDF and generated this beautiful infographic.

— Deedy (@deedydas) November 20, 2025

This is the world's best compression engine. pic.twitter.com/2OTf0bitKD

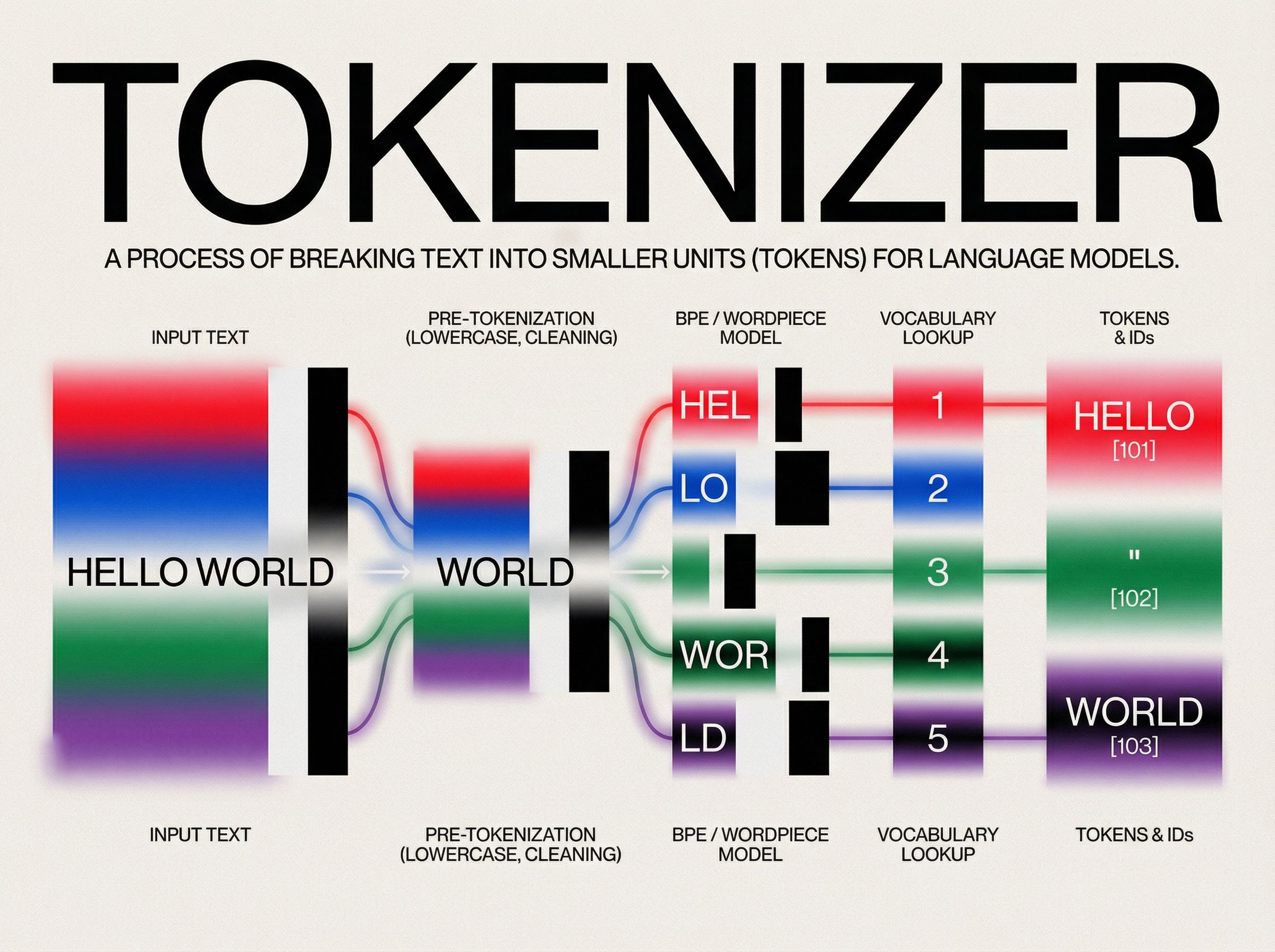

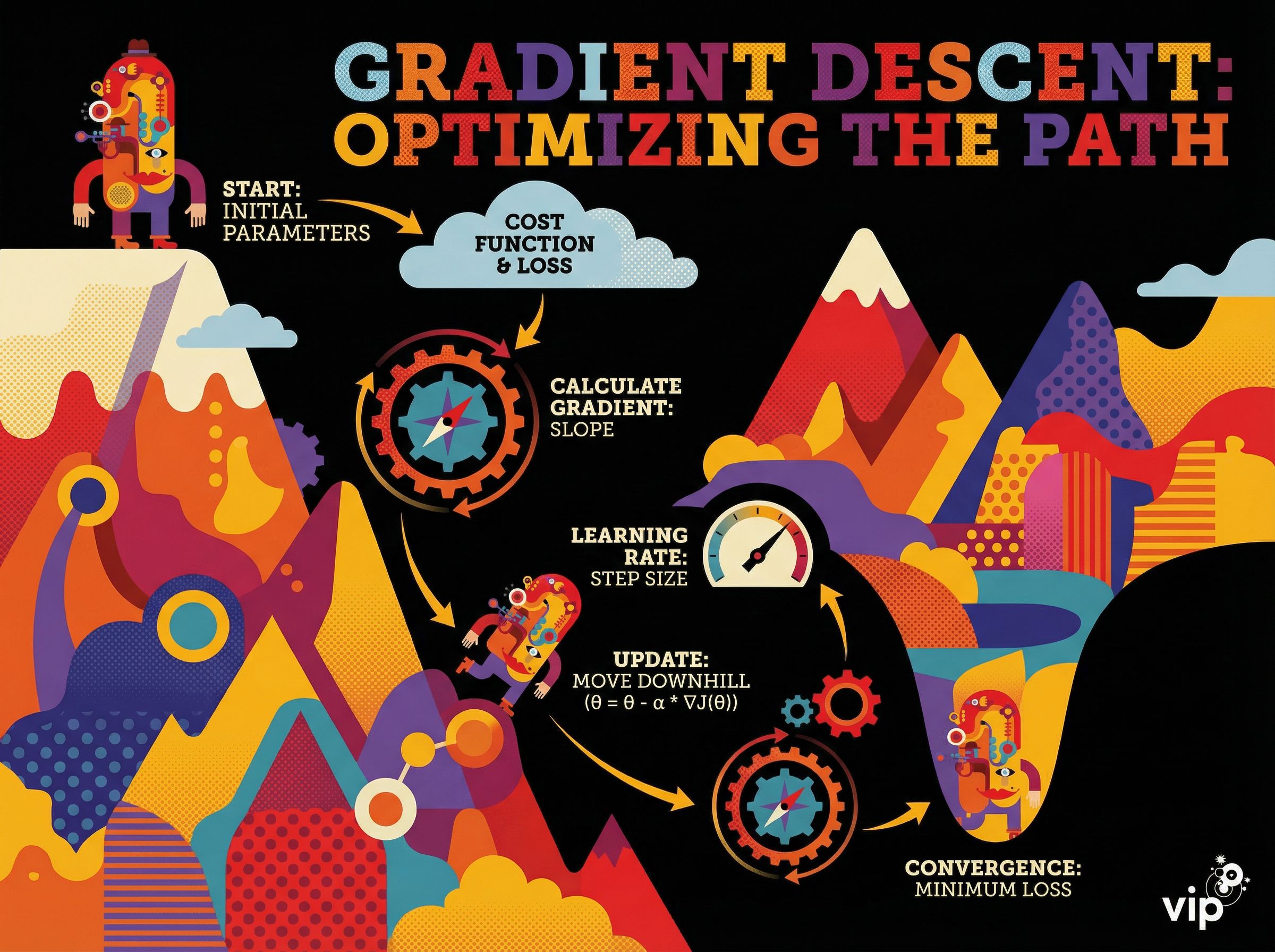

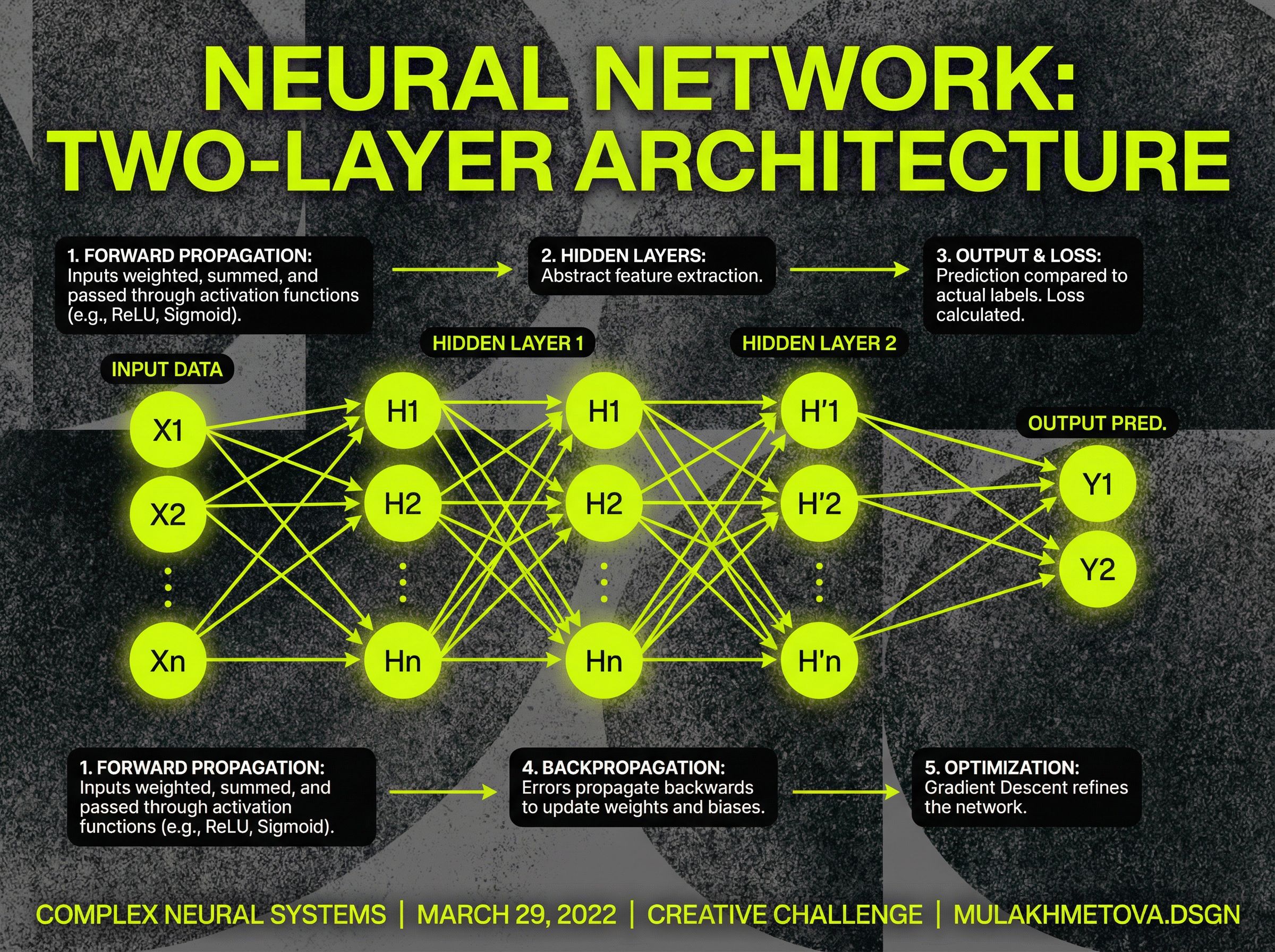

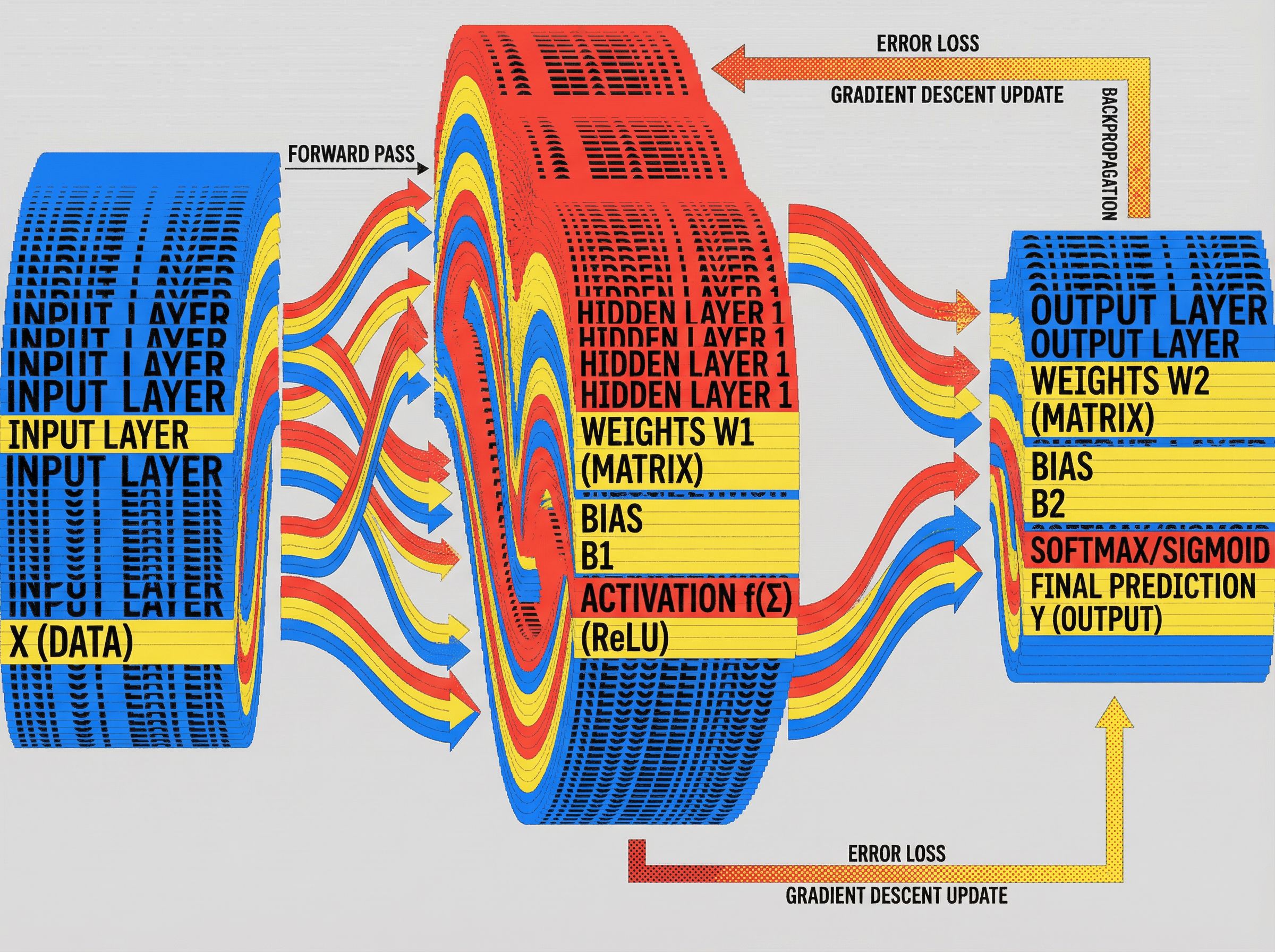

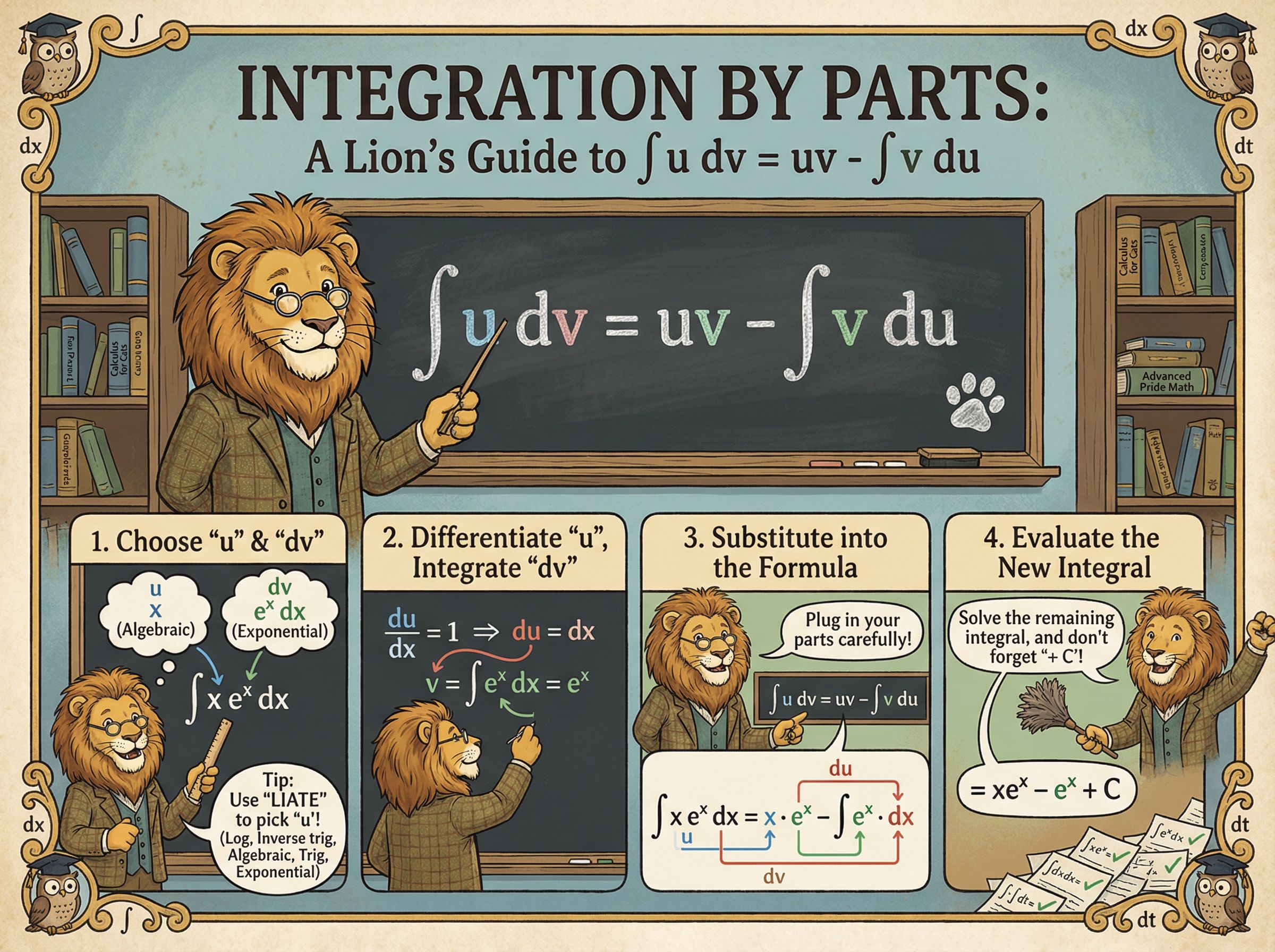

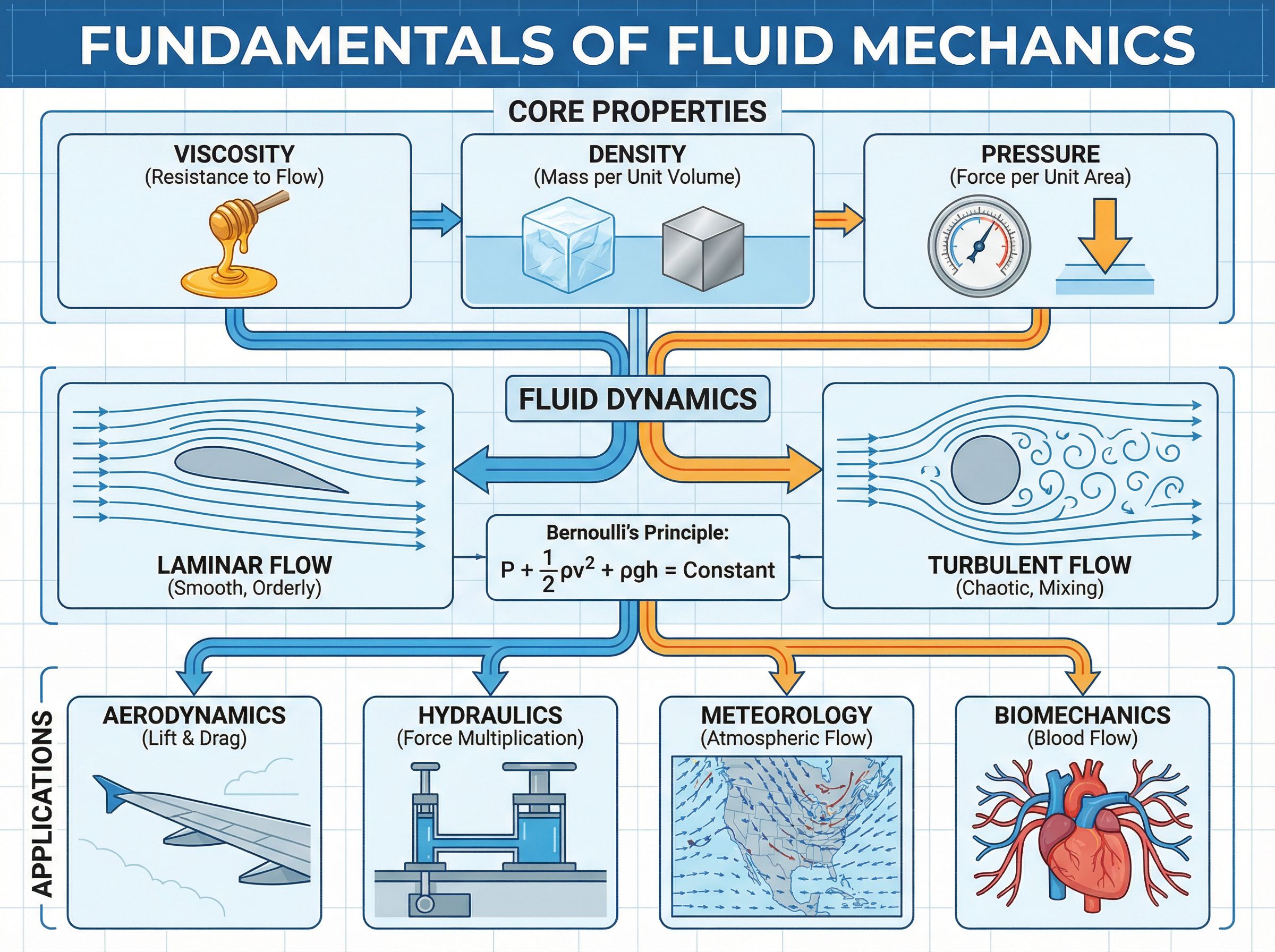

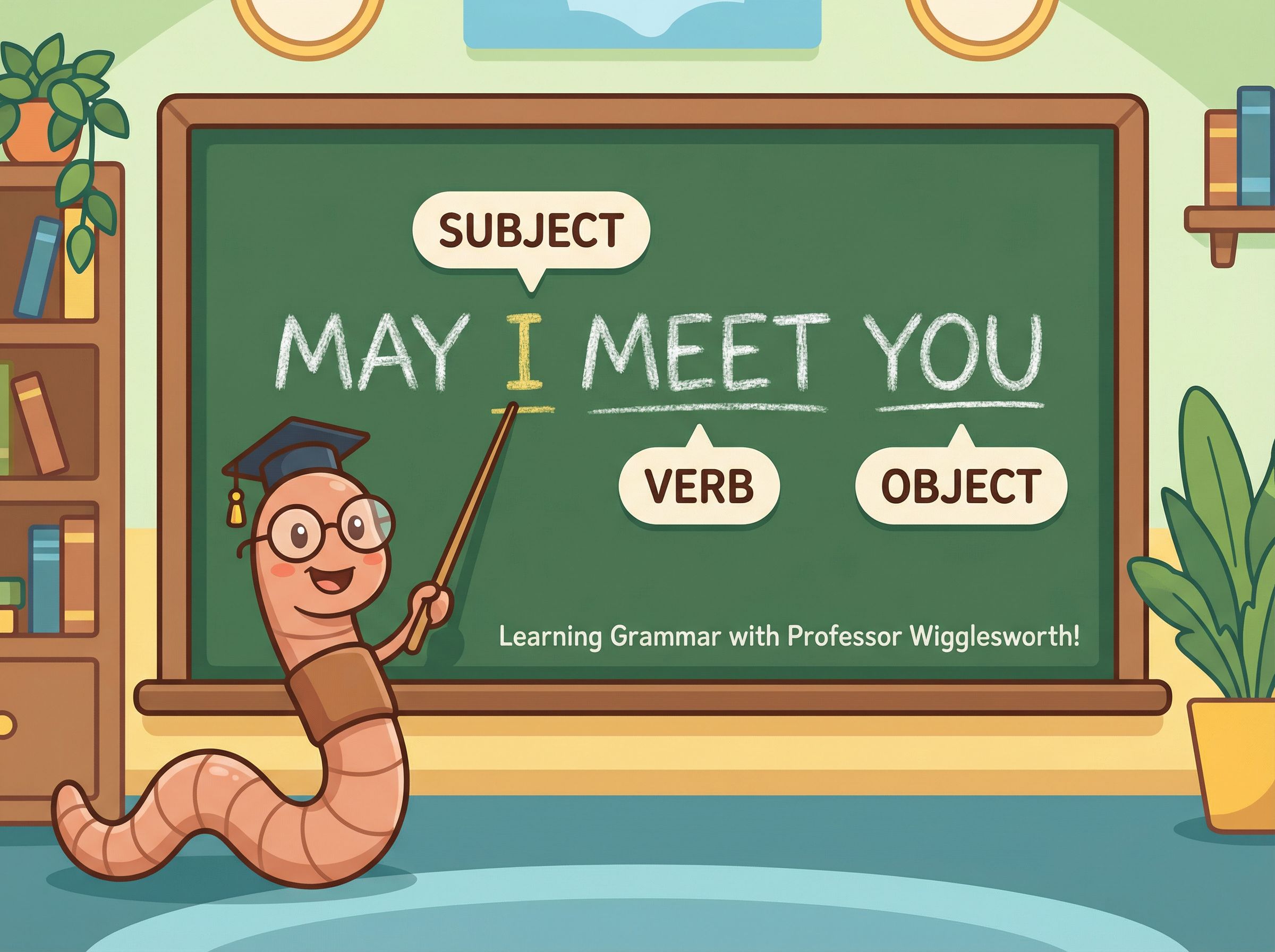

A picture is worth a thousand words. We could definitely see this model being a powerful tool for educators to create visuals. Check out these infographics:

This also means Nano Banana Pro is really good at rendering code. Other image models seemed to hallucinate with this task, but given how this model is entangled in the larger Gemini 3 Pro language model, code interpretability is much better than other SOTA models.

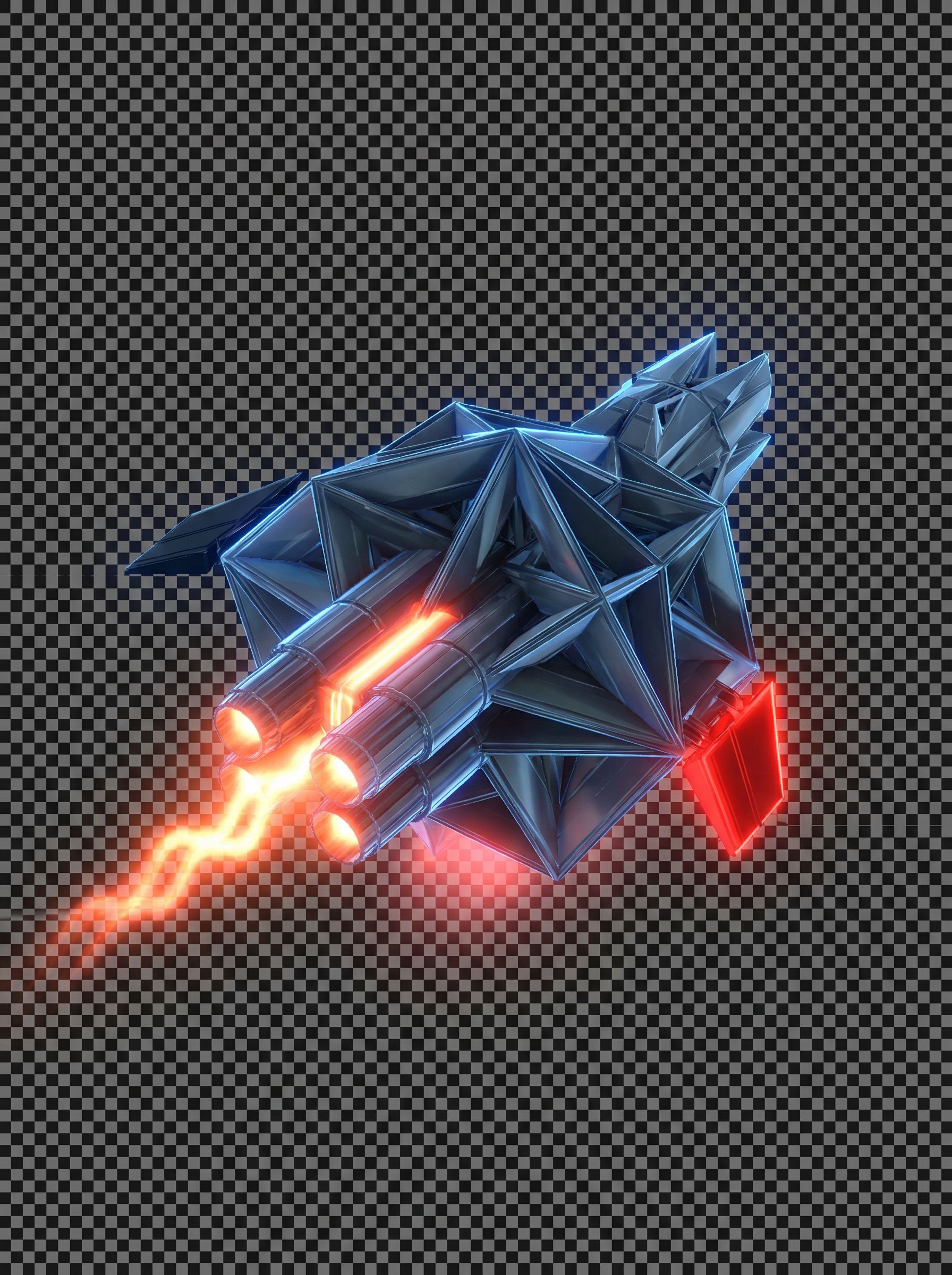

Check out how the model was able to render this ship written in React and WebGL shader code (click on the image to see the entire code snippet):

render this: /** * @license * SPDX-License-Identifier: Apache-2.0 */ import React, useRef, useEffect from ‘react’; import useAppContext from ’../context/AppContext’; // A simple full-screen quad vertex shader const VERTEX_SHADER = #version 300 es in vec2 a_position; out vec2 v_uv; void main() v_uv = a_position; gl_Position = vec4(a_position, 0.0, 1.0); const FRAGMENT_SHADER = version 300 es precision highp float; in vec2 v_uv; out vec4 outColor…

Text and Design

This model arguably has some of the best text adherence — you should be able to input any piece of text and Nano Banana Pro will get it word for word. Look at what fofr has to say:

To say Nano Banana Pro is good at text is an understatement. Here's the Gemini 3 blog post, as a glossy magazine article.

— fofr (@fofrAI) November 20, 2025

> Put this whole text, verbatim, into a photo of a glossy magazine article on a desk, with photos, beautiful typography design, pull quotes and brave… pic.twitter.com/NVm1r4UEHY

What’s really cool is that text adherence is still maintained even when you are trying out various styles or designs. Nano Banana Pro does not sacrifice one or the other. This means we can take an infographic with a lot of dense information and actually apply some interesting styles to them. Take a look at these Machine Learning posters we created, for instance:

We’ve also been seeing some cool design spreads that might have taken folks hours to lay out using other tools. We see this as a great model for designers to rapidly design and iterate on mockups and potentially create assets that could be used in production.

Cover magazine editors are done.

— DΞV (@junwatu) November 21, 2025

Let me show you how good Nano Banana Pro is at creating magazine covers. I intentionally used Indonesian magazine prompts to see whether the model would misspell non-English text.

It didn’t. 🤯

Nano Banana Pro follows long prompts precisely.… pic.twitter.com/0JCRJ7gkjK

Nano Banana Pro is here!

— Connor (@Jchammond_) November 20, 2025

I used it to generate an app design mock up for a tower defense game

This will be so great for getting app design inspiration pic.twitter.com/HXxoFBodmA

nano banana を使うと文章のみ1回目でここまで正確な画像を数秒で生成してくれるというのは、AIが製造分野に使える十分な理由になると思う。… pic.twitter.com/6joHXAcFbw

— ぶたまる@生技 (@pokamaru3) September 28, 2025

Bottom line? With Nano Banana Pro, you no longer have to compromise between accurate text rendering and creative design freedom. The model maintains pixel-perfect text accuracy while fully embracing your stylistic input.

Characters

One of the standout features of Nano Banana Pro is its ability to handle character consistency across multiple reference images. The model can process up to 14 reference images simultaneously, allowing you to maintain consistent character appearances, poses, and styles across different scenes and contexts. This makes it incredibly powerful for storytelling, brand consistency, and creating cohesive visual narratives where the same characters need to appear in various situations.

NANO BANANA IS INSANE🤯

— Sebastien Jefferies (@SebJefferies) November 20, 2025

Prompt in ALT👇 pic.twitter.com/eqVSYLw0Hn

Multiple characters also means that we can input multiple objects to synthesize a new image. This is great for virtual try-on (a little too great):

Turned these photos into one cinematic visual in seconds in Nano Banana Pro👇 pic.twitter.com/3OX5MWmKyJ

— Sebastien Jefferies (@SebJefferies) November 20, 2025

New record? 25 items combined into one image using the collage method with Nano Banana Pro. The previous record for Nano Banana 1 that I tried was 13 items from a collage. Overall accuracy of items is still much better with less items but I wanted to push it. I'm sure It can go… pic.twitter.com/Ob9C8QcVA9

— Travis Davids (@MrDavids1) November 20, 2025

We can even take characters and put them out of context (this was one of our favorite effects).

WOWWWWWWWWW pic.twitter.com/z1ueShTvF4

— nic (@nicdunz) November 20, 2025

Make him [insert scenario here]. Keep his whiteboard style, but make the surroundings realistic.

Yup, that means character consistency is a given with this model. Even with minimal prompting, characters are able to persist after multiple iterative generations. For example, take a look at Google’s example of exploring camera zooms with the same character:

Change aspect ratio to 1:1 by reducing background. The character remains exactly locked in its current position.

Source: Google

We see a world where Nano Banana Pro can be used for tasks like storyboarding where character consistency is important, regardless of the environments characters are placed in. The model is clearly able to persist the features of characters as we put them through the model wringer.

World Knowledge (experiments)

Is Nano Banana Pro actually connected to the internet?

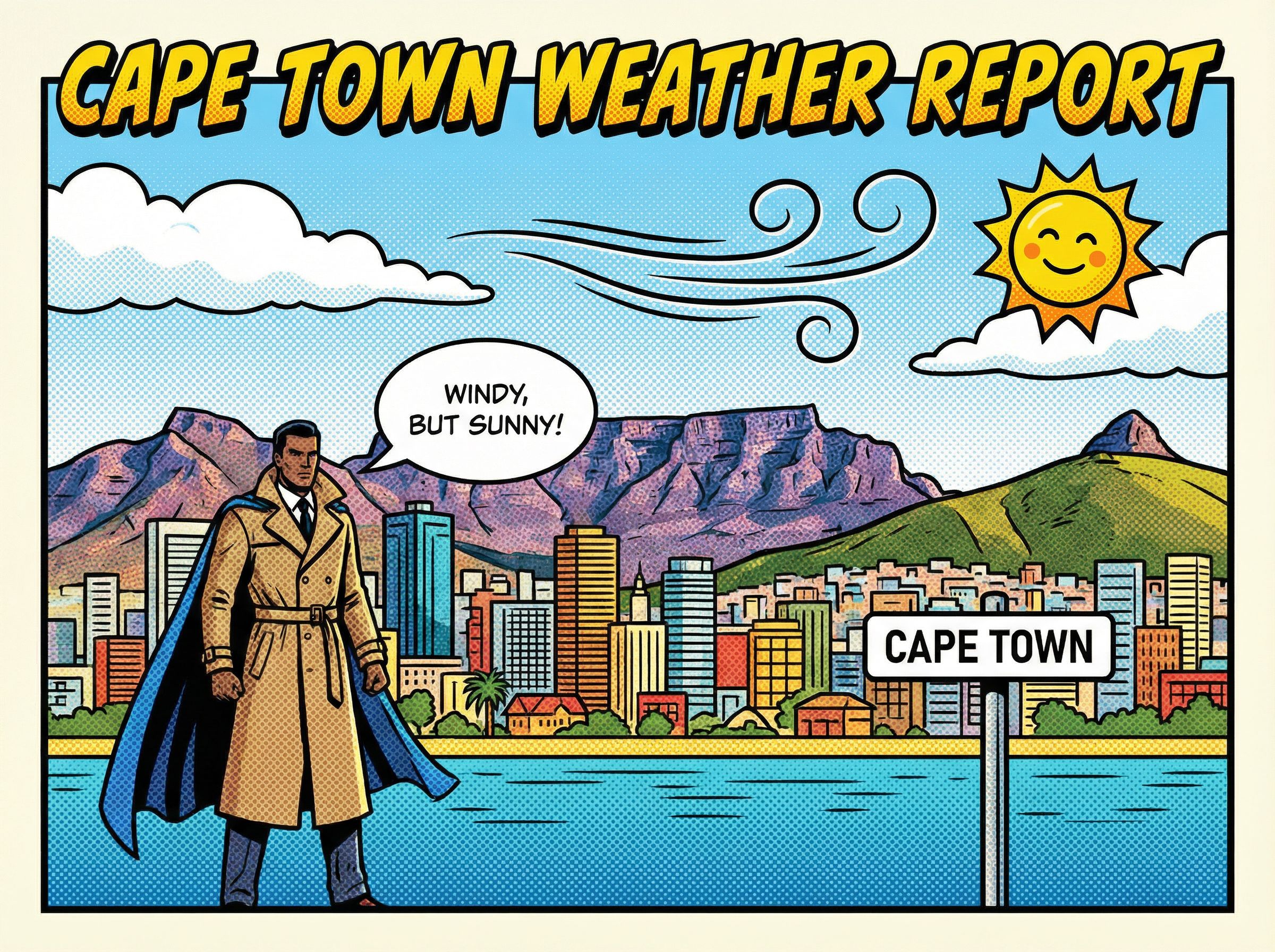

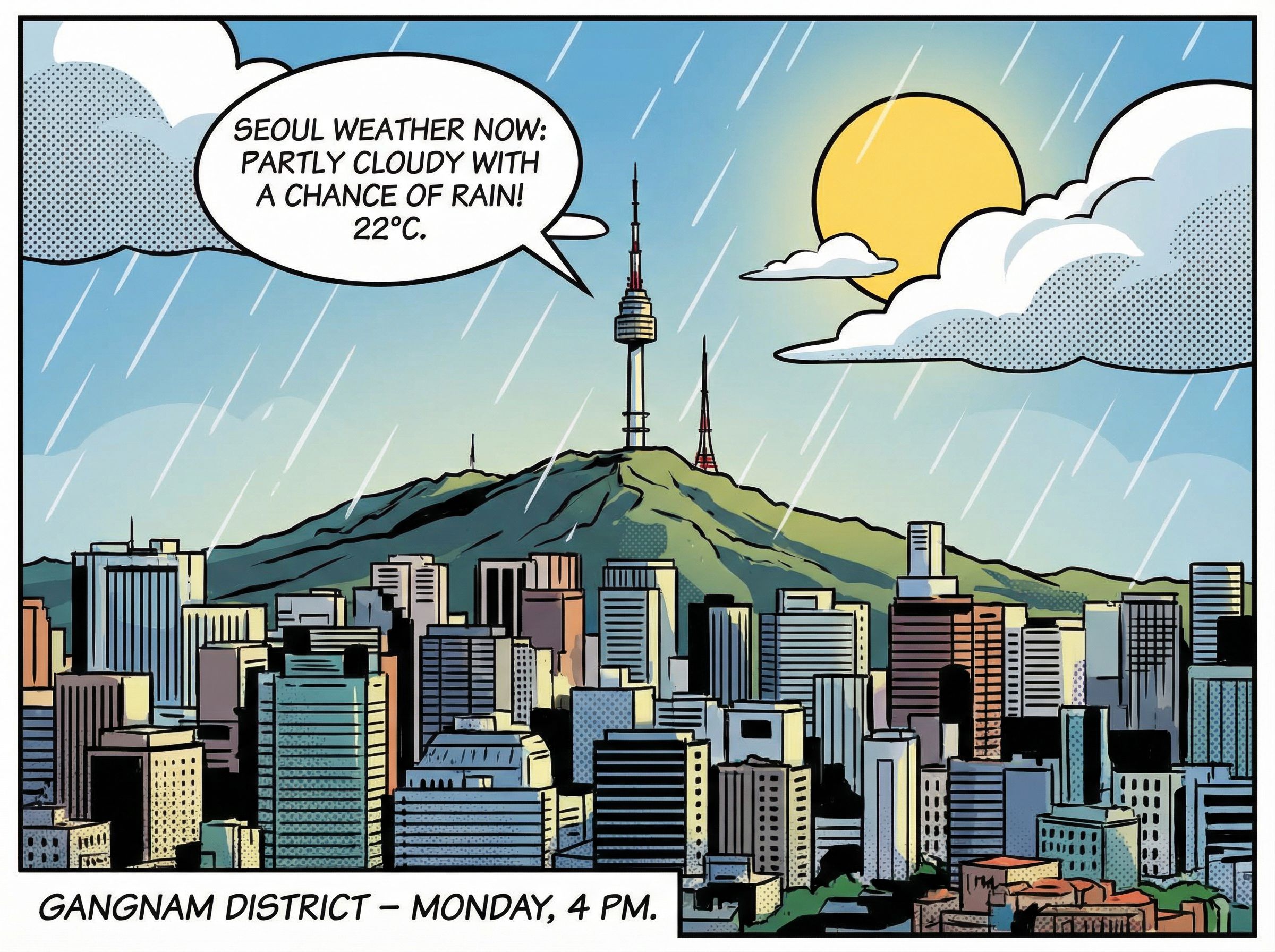

We tried to test making comics of the weather in different places around the world.

These were good guesses, but the model wasn’t able to get the exact weather of these places at the time of our generations. You’ll need to integrate Search as a tool to provide real-time information to Nano Banana (structured tool calling coming soon!).

We thought we might be able to get real-time snapshots of places (for example, a morning shot of San Francisco and at the same time, a night shot in New Delhi). Interestingly, both of these images were marked with the same date in 2023 🤷♂️

However, the amount of real-world knowledge baked into the model itself is quite impressive. Just look at how it is able to deduce world landmarks just given their coordinates.

turn GPS coords into visuals like this Tokyo Tower https://t.co/qBUyU3YrTP pic.twitter.com/HU0eEDGuYg

— Luis Catacora (@lucatac0) November 20, 2025

The amount of world knowledge in Nano Banana Pro could make for a multitude of apps. Just some simple prompting layers and/or tools integrated can make this model even more insanely capable than it already is.

Getting Started with the API

Okay, we just bombarded you with a ton of cool Nano Banana Pro examples. What’s even cooler is that you can use this model in your own apps.

Here’s how to call Nano Banana Pro using JavaScript and the Replicate API:

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});

const output = await replicate.run(

"google/nano-banana-pro",

{

input: {

prompt: "Your prompt here",

// Additional parameters

}

}

);

console.log(output);Yup. It’s that simple.

That’s it with Nano Banana Pro! Give it a try — we want to see what you can create.

Start creating with Nano Banana Pro

…actually, we’re not done.

Community Round-up

The AI community has been absolutely crushing it with Nano Banana Pro. Here’s some of our favorite creations:

Nano Banana Pro is crazy good at generating ads.

— Justine Moore (@venturetwins) November 21, 2025

It's SO much better than the last model at photorealistic humans / scenes around a product photo.

Uploaded the Graza bottle, prompt was "have a young white influencer holding it in her kitchen"

Head-to-head comparison 👇 pic.twitter.com/erO9snrcon

"Still from a Shopping channel VHS tape from 1986, where someone is selling the most useless trinket ever invented" pic.twitter.com/Ii5lzzRQbq

— Andreas Jansson (@allnoteson) November 20, 2025

Map to satellite view is insane https://t.co/Hu0v2LoAm5 pic.twitter.com/Oodavlps1N

— Shridhar (@shridharathi) November 20, 2025

92-page PDF paper to whiteboard 🤯 https://t.co/4tc3YXmoMF pic.twitter.com/DP5yVam28Z

— Crystal (@crystalsssup) November 21, 2025

Imagine one AI prompt making this entire infographic!!

— Bindu Reddy (@bindureddy) November 21, 2025

Nano Banana Pro is absolutely NEXT LEVEL 🤯 pic.twitter.com/173SH7cooM

More Tests with Nano Banana 2 🍌🍌🔥

— Marco (@ai_artworkgen) November 20, 2025

Prompt: "Create a grid of 4 editorial fashion images focused on [Nike], [2 x macro, 2 x dynamic action] that follow the same style and colour palette as [@]img1. Make each of the new shots unique."

More examples (and prompts) in the thread… pic.twitter.com/60oSMUwD5v

I can't stop pic.twitter.com/3UcVCFpCWy

— karim_yourself (@karim_yourself) November 20, 2025

Nano Banana Pro 🍌

— Ryan (@bisantian) November 20, 2025

From the original Manga panel, to Colorized version, to a faithful 3D recreation version. pic.twitter.com/dfYAhvA3UJ

I made a short ad concept for a brand of beer from New Zealand.

— RenderDave (@RenderDave_) November 4, 2025

I used Nano Banana and Seedance on @replicate to make this pic.twitter.com/Tpp4zqZ9kX

obsessed with these annotated diagrams.

— Sahil (@sahilypatel) November 20, 2025

made all of them with nano banana pro.

google cooked. pic.twitter.com/PU8ynAG9MI

Feel old yet?

— Gizem Akdag (@gizakdag) November 20, 2025

This is Nano Banana 2 now.

Fluffy logo prompt👇 https://t.co/LE0m7CBYMM pic.twitter.com/rdGeQ7V03P

Nano Banana Pro is wild pic.twitter.com/R5gg8wKqek

— @levelsio (@levelsio) November 21, 2025

Bringing cartoons to life with Nano Banana Pro 🍌 pic.twitter.com/wSi84kHCQ7

— CHRIS FIRST (@chrisfirst) November 20, 2025

Pretty cool. Ariel Noyman at MIT Media Lab adapted my Nano Banana Pro prompts to map "urban informality" -- complex settlements that are notoriously difficult to map manually.

— Bilawal Sidhu (@bilawalsidhu) November 21, 2025

"What used to take hours (days) of manual work can now be distilled into minutes, with results that are… https://t.co/Of3rPzSqee pic.twitter.com/iyCYJOIGrk

Nano-Banana is everywhere🍌

— Eyisha Zyer (@eyishazyer) September 17, 2025

Here’s the ultimate resource to master its creative editing power

60+ Nano-Banana use cases, each with the prompt, input, and output side by side: (Part 1) pic.twitter.com/tDuva7IRwP

Ruining great art with the nano banana pro command “Make this much more cheerful with as few changes as possible” pic.twitter.com/4OyAHkwiau

— Ethan Mollick (@emollick) November 21, 2025

Landmark Infographics by NANO BANANA PRO on @BasedLabsAI 🗽🍌

— TechieSA (@TechieBySA) November 21, 2025

Prompt👇🏻 https://t.co/g8ec3tDfe8 pic.twitter.com/vqLPzURAKA

Social media is about to get weird 🍌

— Shweta (@shweta_ai) November 20, 2025

I just built an entirely new Instagram profile with the newly launched Nano Banana Pro. Not one of these photos is real.

Prompt below ⬇️ pic.twitter.com/dKJumn9S4S

Congratulations to Google team on Nano banana Pro another SOTA model, I was testing the model in the last two weeks and absolutely love it 🫶 pic.twitter.com/MY8uLiV3L4

— Emily (@IamEmily2050) November 20, 2025

Nano Banana Pro / Gemini 3 Pro Image is crazy.

— AshutoshShrivastava (@ai_for_success) November 20, 2025

It turned this blueprint into a realistic 3D image.

It did not just create the image, it first read the blueprint properly and then created the final output with every small detail.

Blueprint source image added below 👇 https://t.co/ZLQSypUd4w pic.twitter.com/UKyzk5PV14

And some inspo for the interior designers:

Start creating with Nano Banana Pro