Readme

Omnivore: A Single Model for Many Visual Modalities

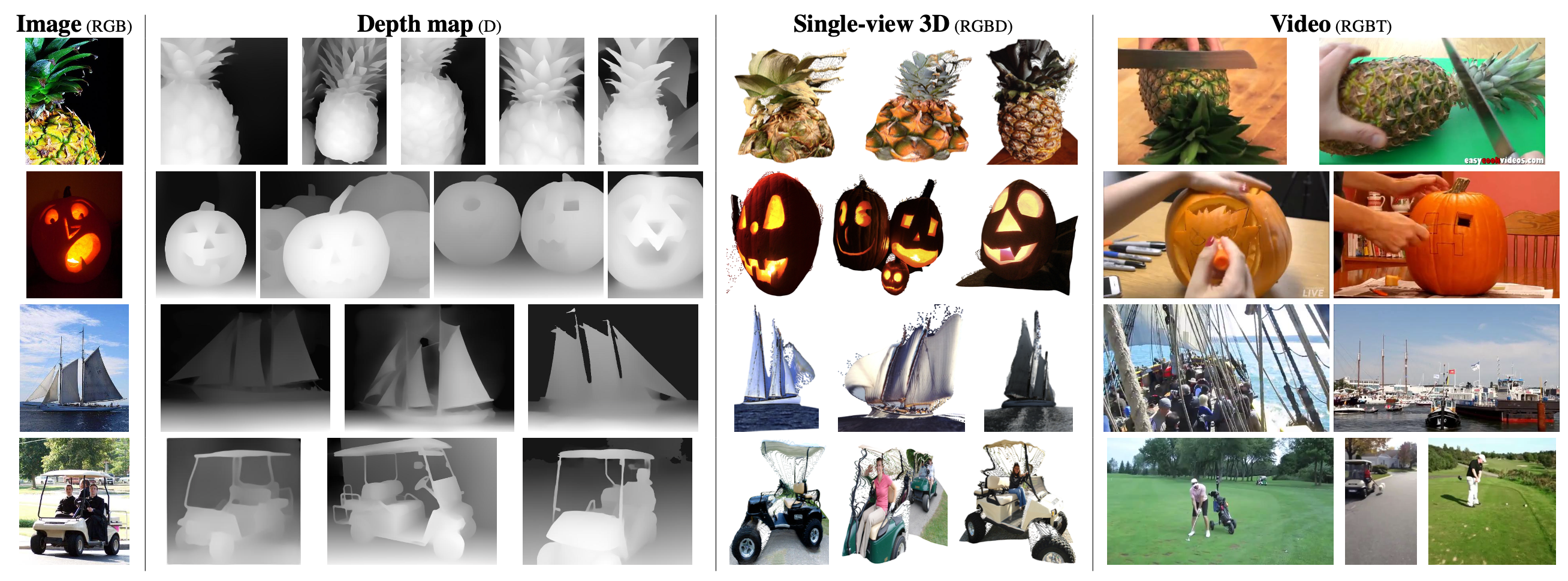

OMNIVORE is a single vision model for many different visual modalities. It learns to construct representations that are aligned across visual modalities, without requiring training data that specifies correspondences between those modalities. Using OMNIVORE’s shared visual representation, we successfully identify nearest neighbors of left: an image (ImageNet-1K validation set) in vision datasets that contain right: depth maps (ImageNet-1K training set), single-view 3D images (ImageNet-1K training set), and videos (Kinetics-400 validation set).

This repo contains the code to run inference with a pretrained model on an image, video or RGBD image.

Citation

If this work is helpful in your research, please consider starring :star: us and citing:

@article{girdhar2022omnivore,

title={{Omnivore: A Single Model for Many Visual Modalities}},

author={Girdhar, Rohit and Singh, Mannat and Ravi, Nikhila and van der Maaten, Laurens and Joulin, Armand and Misra, Ishan},

journal={arXiv preprint arXiv:2201.08377},

year={2022}

}

Contributing

We welcome your pull requests! Please see CONTRIBUTING and CODE_OF_CONDUCT for more information.

License

Omnivore is released under the CC-BY-NC 4.0 license. See LICENSE for additional details. However the Swin Transformer implementation is additionally licensed under the Apache 2.0 license (see NOTICE for additional details).