Readme

MAT: Mask-Aware Transformer for Large Hole Image Inpainting (CVPR2022 Best Paper Finalists, Oral)

Wenbo Li, Zhe Lin, Kun Zhou, Lu Qi, Yi Wang, Jiaya Jia

[Paper]

News

[2022.06.21] We provide a SOTA Places-512 model (Places_512_FullData.pkl) trained with full Places data (8M images). It achieves significant improvements on all metrics.

Usage

To run the hole inpainting model, choose and image and desired mask as well as parameters.

image: Reference image to inpaint. Images are automatically resized to 512x512.

mask: Black and white mask denoting areas to inpaint. The black regions will be inpainted by the model. This mask should be size 512x512 (same as image)

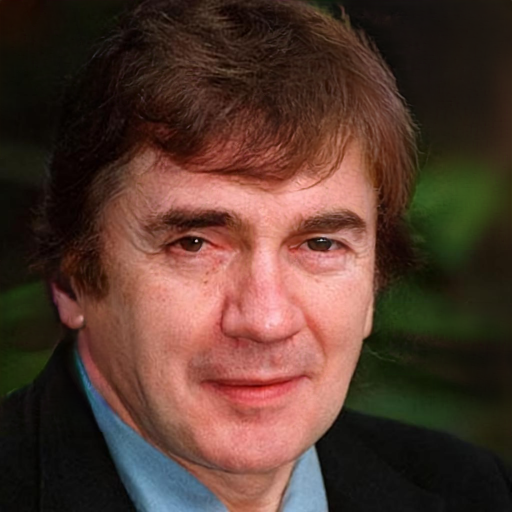

model:Choose between the “Places” model, which is trained on buildings/scenes, and the “CelebA” model, trained on human faces.

truncation_psi: Usually between 0.5 and 1, increasing this variable improves image quality at the cost of output diversity/variation; truncation psi ψ = 1 means no truncation, ψ = 0 means no diversity.

random_noise: Choose randomness of noise vector.

seed: random number for random seed generator. Read more about it here.

| Model | Data | Small Mask | Large Mask | ||||

|---|---|---|---|---|---|---|---|

| FID↓ | P-IDS↑ | U-IDS↑ | FID↓ | P-IDS↑ | U-IDS↑ | ||

| MAT (Ours) | 8M | 0.78 | 31.72 | 43.71 | 1.96 | 23.42 | 38.34 |

| MAT (Ours) | 1.8M | 1.07 | 27.42 | 41.93 | 2.90 | 19.03 | 35.36 |

| CoModGAN | 8M | 1.10 | 26.95 | 41.88 | 2.92 | 19.64 | 35.78 |

| LaMa-Big | 4.5M | 0.99 | 22.79 | 40.58 | 2.97 | 13.09 | 32.39 |

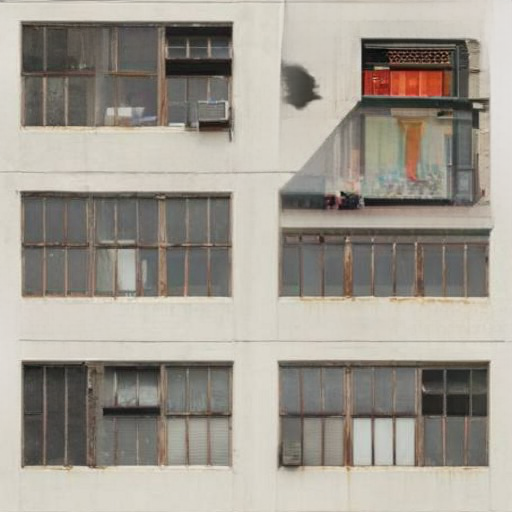

Visualization

We present a transformer-based model (MAT) for large hole inpainting with high fidelity and diversity. Compared to other methods, the proposed MAT restores more photo-realistic images with fewer artifacts.

Citation

@inproceedings{li2022mat,

title={MAT: Mask-Aware Transformer for Large Hole Image Inpainting},

author={Li, Wenbo and Lin, Zhe and Zhou, Kun and Qi, Lu and Wang, Yi and Jia, Jiaya},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2022}

}

License and Acknowledgement

The code and models in this repo are for research purposes only. Our code is bulit upon StyleGAN2-ADA.