Readme

Model Description

The Segmind-Vega Model is a distilled version of the Stable Diffusion XL (SDXL), offering a remarkable 70% reduction in size and an impressive 100% speedup while retaining high-quality text-to-image generation capabilities. Trained on diverse datasets, including Grit and Midjourney scrape data, it excels at creating a wide range of visual content based on textual prompts.

Employing a knowledge distillation strategy, Segmind-Vega leverages the teachings of several expert models, including SDXL, ZavyChromaXL, and JuggernautXL, to combine their strengths and produce compelling visual outputs.

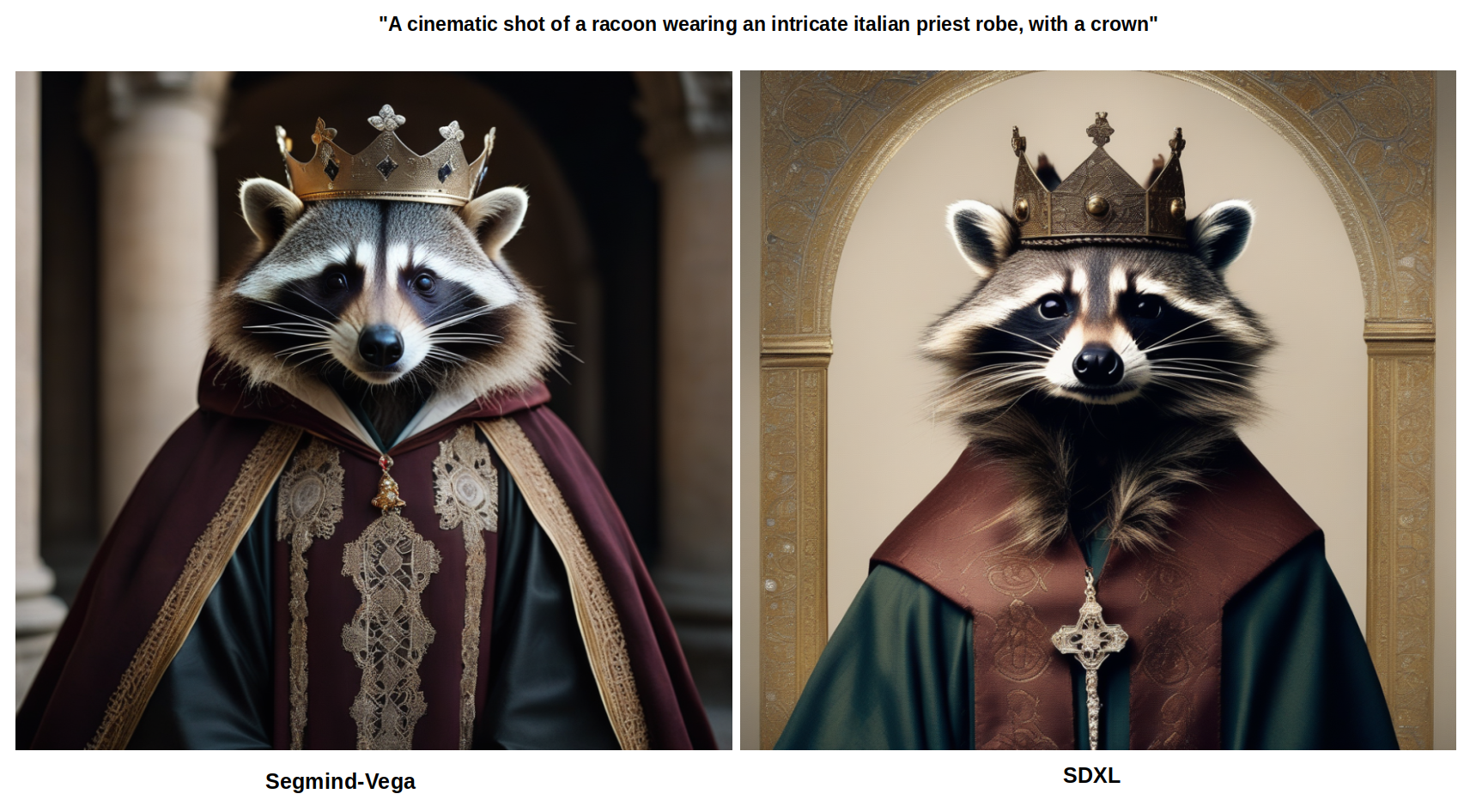

Image Comparison (Segmind-Vega vs SDXL)

Speed Comparison (Segmind-Vega vs SD-1.5 vs SDXL)

The tests were conducted on an A100 80GB GPU.

(Note: All times are reported with the respective tiny-VAE!)

Parameters Comparison (Segmind-Vega vs SD-1.5 vs SDXL)

Key Features

-

Text-to-Image Generation: The Segmind-Vega model excels at generating images from text prompts, enabling a wide range of creative applications.

-

Distilled for Speed: Designed for efficiency, this model offers an impressive 100% speedup, making it suitable for real-time applications and scenarios where rapid image generation is essential.

-

Diverse Training Data: Trained on diverse datasets, the model can handle a variety of textual prompts and generate corresponding images effectively.

-

Knowledge Distillation: By distilling knowledge from multiple expert models, the Segmind-Vega Model combines their strengths and minimizes their limitations, resulting in improved performance.

Model Architecture

The Segmind-Vega Model is a compact version with a remarkable 70% reduction in size compared to the Base SDXL Model.

Out-of-Scope Use

The Segmind-Vega Model is not suitable for creating factual or accurate representations of people, events, or real-world information. It is not intended for tasks requiring high precision and accuracy.

Limitations and Bias

Limitations & Bias: The Segmind-Vega Model faces challenges in achieving absolute photorealism, especially in human depictions. While it may encounter difficulties in incorporating clear text and maintaining the fidelity of complex compositions due to its autoencoding approach, these challenges present opportunities for future enhancements. Importantly, the model’s exposure to a diverse dataset, though not a cure-all for ingrained societal and digital biases, represents a foundational step toward more equitable technology. Users are encouraged to interact with this pioneering tool with an understanding of its current limitations, fostering an environment of conscious engagement and anticipation for its continued evolution.

Please do use negative prompting and a CFG around 9.0 for the best quality!