Generate music

These models generate and modify music from text prompts and raw audio. They combine large language models and diffusion models trained on text-music pairs to understand musical concepts.

Featured models

minimax / music-01

Quickly generate up to 1 minute of music with lyrics and vocals in the style of a reference track

Updated 2 hours ago

meta / musicgen

Generate music from a prompt or melody

Updated 1 year, 5 months ago

lucataco / magnet

MAGNeT: Masked Audio Generation using a Single Non-Autoregressive Transformer

Updated 1 year, 7 months ago

Recommended models

lucataco / ace-step

A Step Towards Music Generation Foundation Model text2music

Updated 3 months, 3 weeks ago

zsxkib / flux-music

🎼FluxMusic Text-to-Music Generation with Rectified Flow Transformer🎶

Updated 11 months, 2 weeks ago

stackadoc / stable-audio-open-1.0

Stable Audio Open is an open-source model optimized for generating short audio samples, sound effects, and production elements using text prompts.

Updated 1 year, 2 months ago

charlesmccarthy / musicgen

MusicGen running on an a40 with 60 seconds max duration

Updated 1 year, 2 months ago

nateraw / musicgen-songstarter-v0.2

A large, stereo MusicGen that acts as a useful tool for music producers

Updated 1 year, 4 months ago

sakemin / musicgen-remixer

Remix the music into another styles with MusicGen Chord

Updated 1 year, 7 months ago

sakemin / musicgen-stereo-chord

Generate music in stereo, restricted to chord sequences and tempo

Updated 1 year, 9 months ago

declare-lab / mustango

Controllable Text-to-Music Generation

Updated 1 year, 9 months ago

sakemin / musicgen-chord

Generate music restricted to chord sequences and tempo

Updated 1 year, 10 months ago

fofr / musicgen-choral

MusicGen fine-tuned on chamber choir music

Updated 1 year, 10 months ago

nateraw / audio-super-resolution

AudioSR: Versatile Audio Super-resolution at Scale

Updated 1 year, 11 months ago

andreasjansson / musicgen-looper

Generate fixed-bpm loops from text prompts

Updated 2 years, 2 months ago

haoheliu / audio-ldm

Text-to-audio generation with latent diffusion models

Updated 2 years, 7 months ago

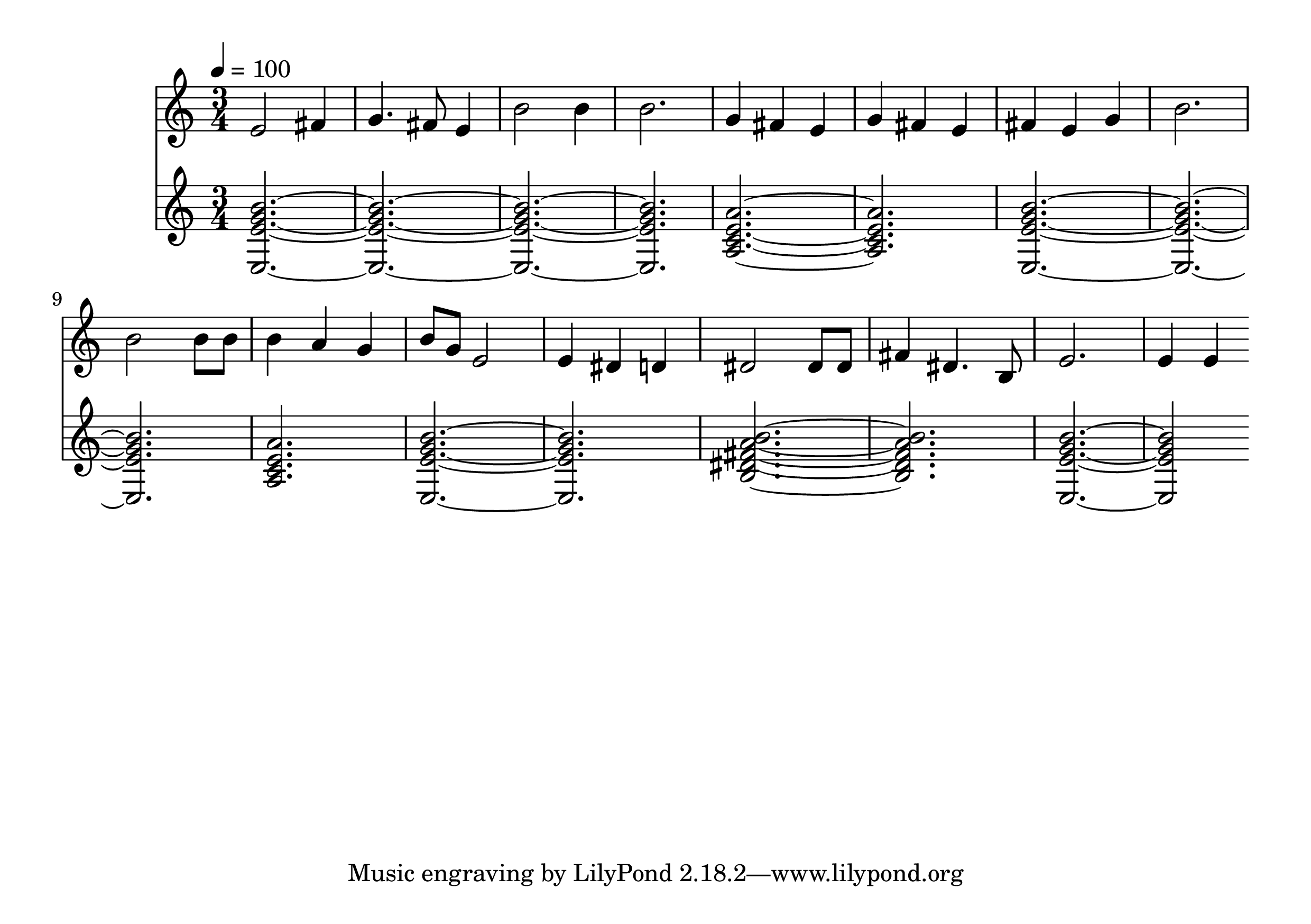

andreasjansson / cantable-diffuguesion

Bach chorale generation and harmonization

Updated 2 years, 7 months ago

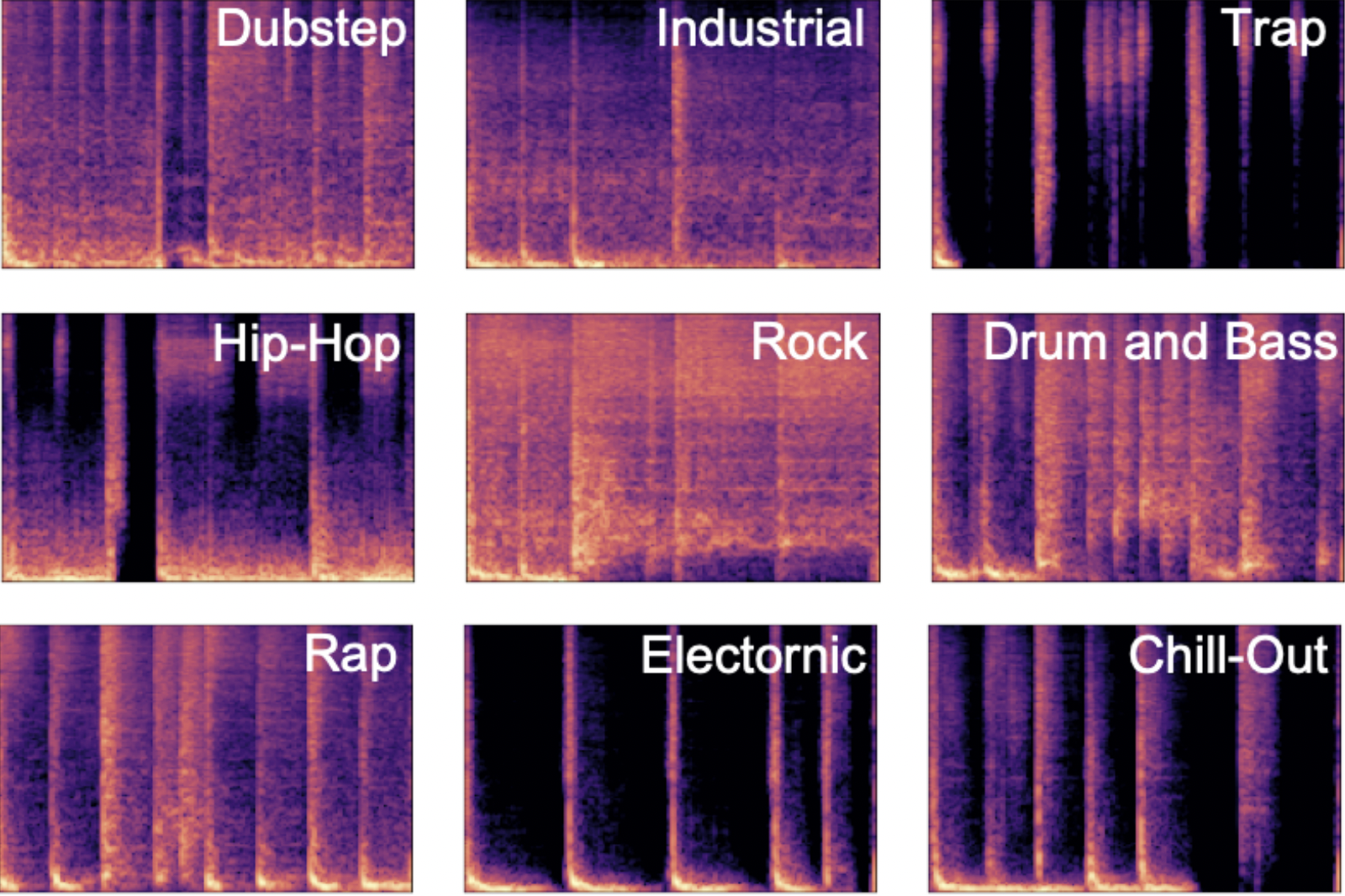

riffusion / riffusion

Stable diffusion for real-time music generation

Updated 2 years, 8 months ago

annahung31 / emopia

Emotional conditioned music generation using transformer-based model.

Updated 2 years, 11 months ago

allenhung1025 / looptest

Four-bar drum loop generation

Updated 2 years, 11 months ago

harmonai / dance-diffusion

Tools to train a generative model on arbitrary audio samples

Updated 2 years, 11 months ago

andreasjansson / music-inpainting-bert

Music inpainting of melody and chords

Updated 3 years, 4 months ago