Large Language Models (LLMs)

These large language models understand and generate natural language. They power chatbots, search engines, writing aids, and more.

Use these for:

- Conversational AI: Chat and engage in natural dialogue. Get an AI assistant.

- Question answering: Provide informative answers to questions. Build a knowledge base.

- Text generation: Generate fluent continuations of text. Autocomplete your writing.

- Summarization: Summarize long passages of text. Get key points quickly.

- Translation: Translate between languages. Communicate across language barriers.

Language models keep getting bigger and better at these tasks. The largest models today exhibit impressive reasoning skills. But you can get great results from smaller, faster, cheaper models too.

Featured models

openai/gpt-5

openai/gpt-5OpenAI's new model excelling at coding, writing, and reasoning.

Updated 3 weeks, 3 days ago

1.2M runs

Google's most advanced reasoning Gemini model

Updated 2 months, 2 weeks ago

832.6K runs

Claude Sonnet 4.5 is the best coding model to date, with significant improvements across the entire development lifecycle

Updated 4 months, 2 weeks ago

590.2K runs

Recommended Models

Frequently asked questions

Which models are the fastest?

If you want speed and low latency, google/gemini-2.5-flash and openai/gpt-5-nano are strong choices. Both are designed for fast responses and lower compute use while keeping good reasoning quality.

For conversational tasks at scale, anthropic/claude-4.5-haiku also offers quick turnarounds with solid performance.

Which models offer the best balance of cost and quality?

openai/gpt-5-mini and anthropic/claude-4.5-sonnet both deliver high-quality writing, summarization, and reasoning at a manageable cost.

If you want strong reasoning without high overhead, deepseek-ai/deepseek-r1 and meta/meta-llama-3.1-405b-instruct offer impressive results for their size.

What works best for chat and conversational AI?

For natural dialogue and chatbots, openai/gpt-5, anthropic/claude-4.5-sonnet, and google/gemini-2.5-flash are all reliable.

They handle multi-turn conversations, context retention, and friendly tone well. Smaller variants like openai/gpt-5-nano or anthropic/claude-4.5-haiku are ideal for lighter-weight chat assistants.

What works best for coding and reasoning tasks?

anthropic/claude-4.5-sonnet and deepseek-ai/deepseek-r1 are tuned for structured reasoning, code generation, and debugging support.

openai/gpt-5 also performs well for both natural language and code reasoning tasks, especially in multi-step logic or problem-solving scenarios.

What’s the difference between key subtypes or approaches in this collection?

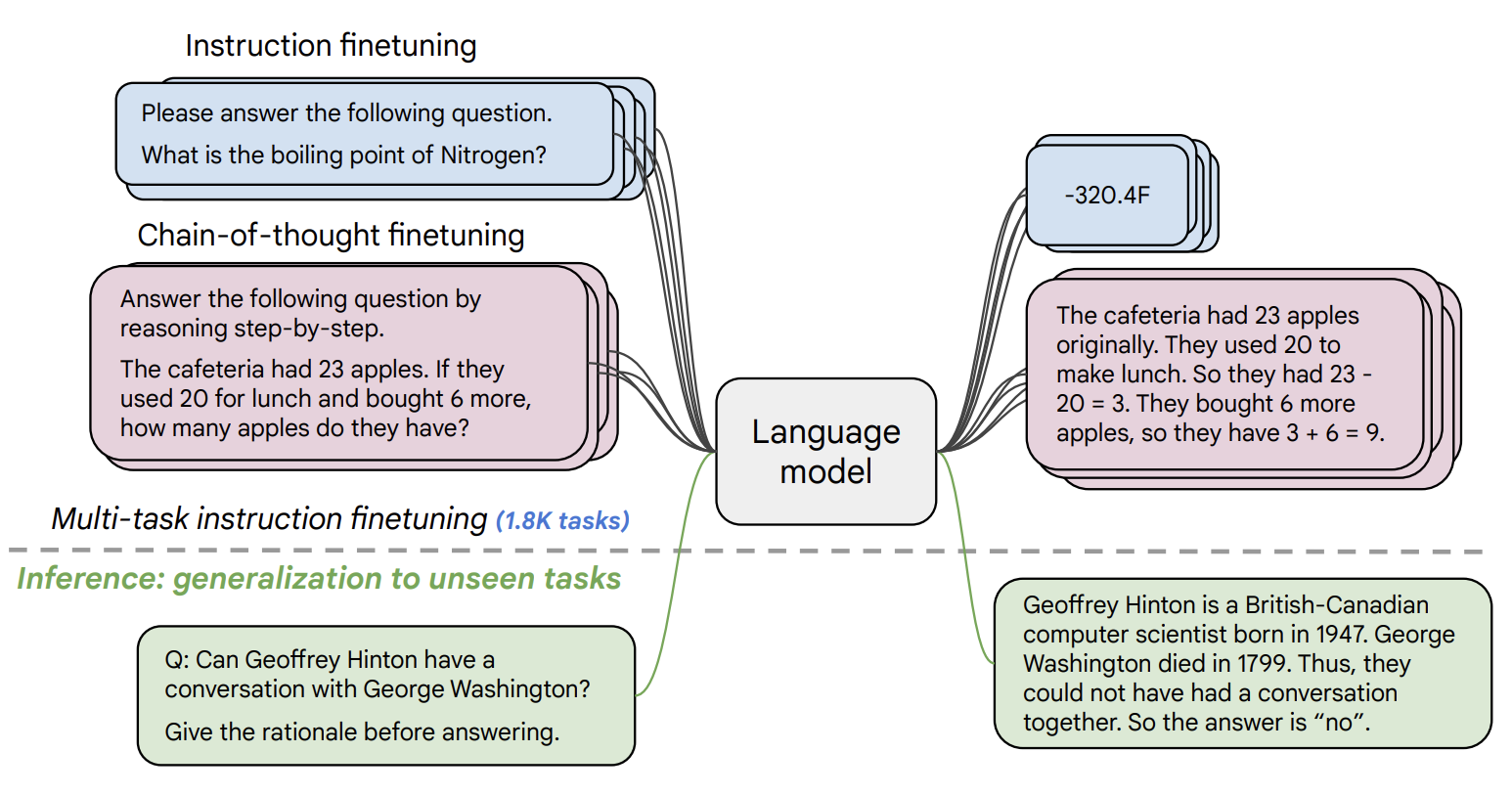

Large language models differ mainly by scale, tuning, and purpose:

- Flagship models (openai/gpt-5, anthropic/claude-4.5-sonnet, google/gemini-2.5-flash): Provide high reasoning and writing ability with broad general knowledge.

- Smaller or “nano” variants (openai/gpt-5-nano, anthropic/claude-4.5-haiku): Optimized for latency and lower compute.

- Reasoning models (deepseek-ai/deepseek-r1, xai/grok-4): Emphasize logical analysis and multi-step reasoning.

- Instruction-tuned models (meta/meta-llama-3.1-405b-instruct): Fine-tuned for chat, summarization, and structured tasks.

What kinds of outputs can I expect from these models?

These models output natural language text, often in conversational or structured formats.

They can generate, summarize, translate, or explain information, and some also handle light reasoning, analysis, or code generation.

How can I self-host or push a model to Replicate?

Several models in this collection are open-weight and can be self-hosted, such as meta/meta-llama-3.1-405b-instruct or openai/gpt-oss-120b.

To publish your own model on Replicate, package it with a replicate.yaml defining input and output fields, then push it to your account to run on managed GPUs.

Can I use these models for commercial work?

Yes—many of these models are available for commercial use, depending on their license. Always review the License section of each model page before deployment, as some require attribution or restrict redistribution.

How do I use or run these models?

You can run them directly on Replicate by providing a text prompt in the model’s playground or via API.

For example, type a question or instruction and receive a natural language response. Some models, like google/gemini-2.5-flash or openai/gpt-4o, may also accept image or multimodal inputs depending on version.

What should I know before running a job in this collection?

- Longer prompts mean more compute time—keep requests concise if you need speed.

- Choose smaller models for low-latency chatbots and larger ones for reasoning or detailed writing.

- If your workflow involves structured output (like JSON or Markdown), look for models tagged with “instruct” or “reasoning.”

- Model behavior can vary between versions; test prompts before production use.

Any other collection-specific tips or considerations?

- Flagship models like openai/gpt-5 and anthropic/claude-4.5-sonnet are best for complex multi-step reasoning or creative writing.

- Smaller models like openai/gpt-5-nano and anthropic/claude-4.5-haiku are ideal for embedding in real-time applications.

- Reasoning models (deepseek-ai/deepseek-r1, xai/grok-4) are tuned for analysis, logic puzzles, and research workflows.

- For the best mix of performance and reliability, start with google/gemini-2.5-flash—it’s fast, affordable, and general-purpose.