Vision models

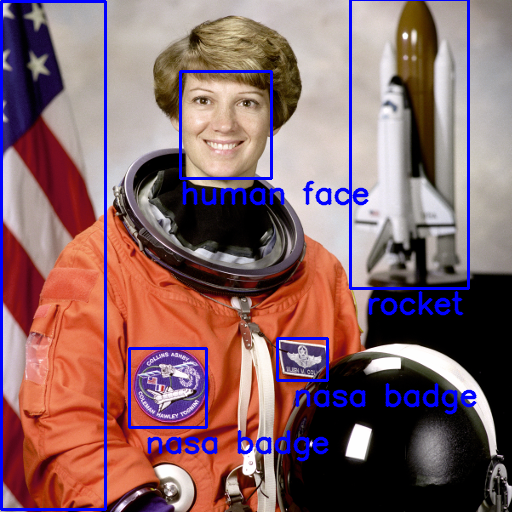

Vision models process and interpret visual information from images and videos. You can use vision models to answer questions about the content of an image, identify and locate objects, etc.

For example, you can use the yorickvp/llava-13b vision model to generate recipe ideas from an image of your fridge

If you don't need reasoning abilities and just want to get descriptions of images, check out our image captioning collection →

Featured models

Google’s hybrid “thinking” AI model optimized for speed and cost-efficiency

Updated 1 week, 1 day ago

1.8M runs

openai/gpt-4o-mini

openai/gpt-4o-miniLow latency, low cost version of OpenAI's GPT-4o model

Updated 6 months, 1 week ago

29.7M runs

Claude Sonnet 4 is a significant upgrade to 3.7, delivering superior coding and reasoning while responding more precisely to your instructions

Updated 8 months, 1 week ago

2.3M runs

Recommended Models

Frequently asked questions

Which models are the fastest for vision tasks like “chatting with an image”?

If you want a low-latency model that can understand and talk about images, lighter options in the Vision Models collection—such as openai/gpt-4o-mini—are well-suited for quick interactions.

Faster models work well for straightforward scenes and short prompts. For more complex reasoning, you may prefer a larger model.

Which models offer the best balance of reasoning and visual understanding?

yorickvp/llava-13b is a strong all-around model in the Vision Models collection. It supports both image captioning and visual question answering (VQA), producing more descriptive and context-aware responses.

If you just need a quick caption, smaller models can be faster and cheaper to run.

What works best when you need to ask questions or “talk” about an image?

For interactive use—like asking “What’s happening in this photo?” or “How many people are in the image?”—pick a model that supports image + text prompting, such as yorickvp/llava-13b.

These models can interpret your image in context and give natural language answers.

What should I use if I only need a short caption or image summary?

If you just want a brief description (e.g., for accessibility, alt text, or indexing), lighter models like openai/gpt-4o-mini can provide fast, simple captions.

You don’t need a heavyweight model unless your task requires more complex reasoning.

How do the main types of models in this collection differ?

- Image-only captioning: Provide an image, get a descriptive caption or summary.

- Image + text prompt (VQA): Provide an image and a question or instruction, get a direct answer or explanation.

- Lightweight vs advanced models: Smaller models are faster and good for quick summaries. Larger ones (like yorickvp/llava-13b) support richer reasoning but may take longer to run.

What kinds of outputs can I expect from vision models?

Most models in this collection return:

- A short caption describing the image.

- A natural language answer to a question about the image.

- Occasionally, tags or structured text, depending on the model.

The exact output depends on the model’s capabilities.

How can I self-host or publish my own vision model?

You can package your own multi-modal model with Cog and push it to Replicate.

Define your input schema (e.g., image + optional question) and output (caption or answer), then publish it to share or use commercially.

Can I use vision models for commercial projects?

Many models in the Vision Models collection allow commercial use, but licenses vary. Always check the model card for attribution requirements or restrictions before using outputs commercially.

How do I use a vision model on Replicate?

- Choose a model from the Vision Models collection.

- Upload an image or provide a URL.

- (Optional) Add a text prompt or question.

- Run the model to get captions or answers.

- Use the output for tasks like accessibility, indexing, or interactive applications.

What should I keep in mind when working with vision models?

- Clear, well-lit images produce better outputs.

- If using image + text, phrase your question clearly for better answers.

- Larger or more complex inputs may increase runtime.

- These models focus on visual reasoning and language—not detection or segmentation.

- Always review outputs for accuracy, especially in sensitive or high-stakes applications.