Readme

Model Description

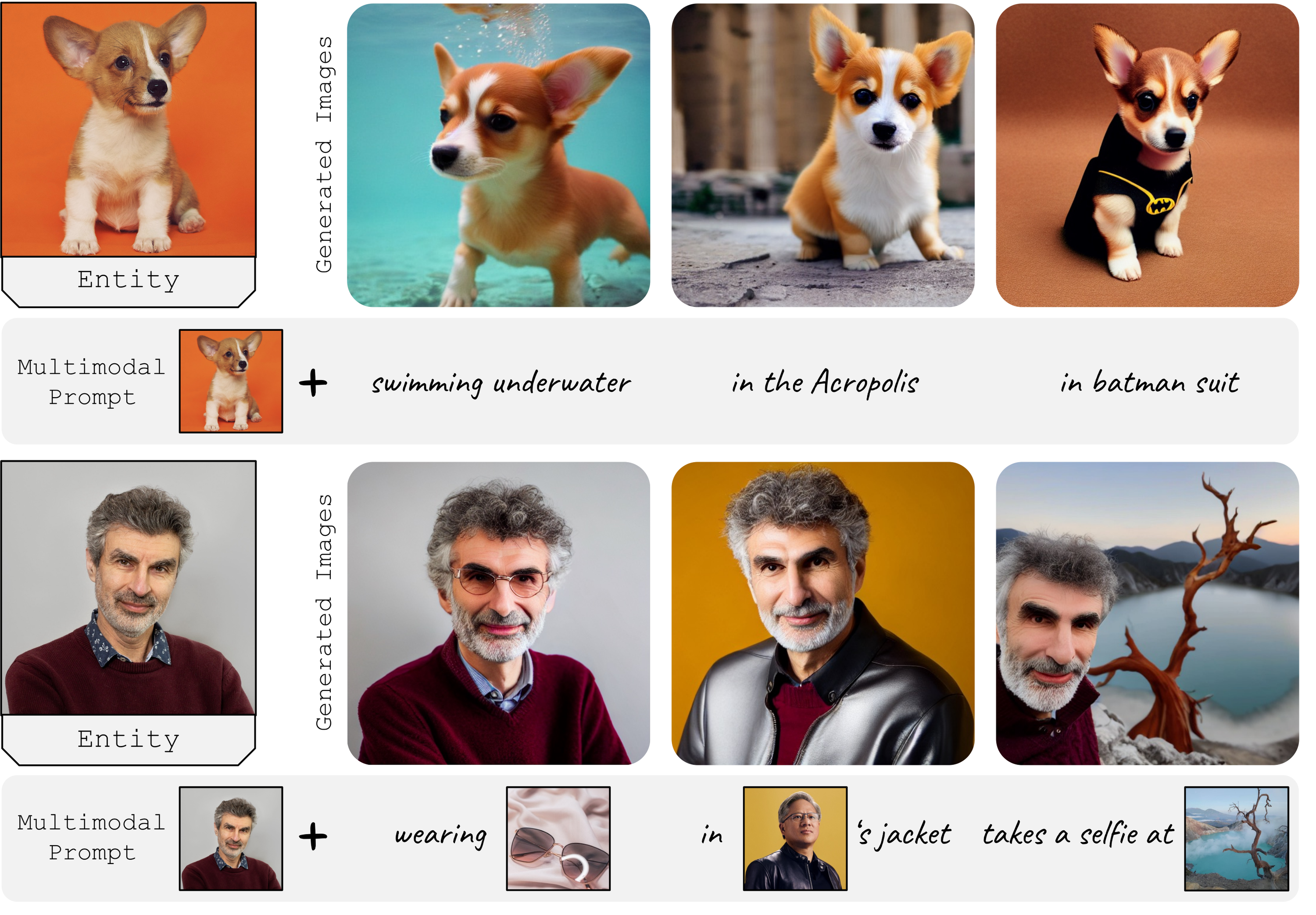

Kosmos-G by Microsoft is a multi-modal model that can generate images from multi-modal prompts. Kosmos-G can generate image variations and perform image mixing out of the box, on top of text driven generation and personalization of images.

Abstract: Recent advancements in text-to-image (T2I) and vision-language-to-image (VL2I) generation have made significant strides. However, the generation from generalized vision-language inputs, especially involving multiple images, remains under-explored. This paper presents Kosmos-G, a model that leverages the advanced perception capabilities of Multimodal Large Language Models (MLLMs) to tackle the aforementioned challenge. Our approach aligns the output space of MLLM with CLIP using the textual modality as an anchor and performs compositional instruction tuning on curated data. Kosmos-G demonstrates a unique capability of zero-shot multi-entity subject-driven generation. Notably, the score distillation instruction tuning requires no modifications to the image decoder. This allows for a seamless substitution of CLIP and effortless integration with a myriad of U-Net techniques ranging from fine-grained controls to personalized image decoder variants. We posit Kosmos-G as an initial attempt towards the goal of “image as a foreign language in image generation.”

See the paper, official repository and project page for more information.

Usage

Kosmos-G expects multi-modal input in the format of one or more images and a text prompt to guide the generation. Images are denoted with within the text prompt - e.g. “ standing next to “. You can additionally input a negative_prompt to guide the diffusion process.

Citation

@article{kosmos-g,

title={{Kosmos-G}: Generating Images in Context with Multimodal Large Language Models},

author={Xichen Pan and Li Dong and Shaohan Huang and Zhiliang Peng and Wenhu Chen and Furu Wei},

journal={ArXiv},

year={2023},

volume={abs/2310.02992}

}