Readme

✨Stable Diffusion 3 w/ ⚡InstantX’s Canny, Pose, and Tile ControlNets🖼️

About

Implementation of InstantX/SD3-Controlnet-Canny, InstantX/SD3-Controlnet-Pose, and InstantX/SD3-Controlnet-Tile

Changelog

- Added InstantX/SD3-Controlnet-Canny.

- PNGs with alpha (transparency) channels are now converted to RGB.

- Added InstantX/SD3-Controlnet-Pose.

- Added InstantX/SD3-Controlnet-Tile. Implemented lazy loading for controlnets to manage GPU memory limitations, as loading all three controlnets simultaneously is not feasible.

Examples

Tile

Here are examples of outputs with different weights applied to the control image:

| Control Image | Weight=0.0 | Weight=0.3 | Weight=0.5 | Weight=0.7 | Weight=0.9 |

|---|---|---|---|---|---|

|

|

|

|

|

|

Pose

| Control Image | Weight=0.0 | Weight=0.3 | Weight=0.5 | Weight=0.7 | Weight=0.9 |

|---|---|---|---|---|---|

|

|

|

|

|

|

Canny

| Control Image | Weight=0.0 | Weight=0.3 | Weight=0.5 | Weight=0.7 | Weight=0.9 |

|---|---|---|---|---|---|

|

|

|

|

|

|

Limitations

Due to the fact that only 1024x1024 pixel resolution was used during the training phase, the inference performs best at this size, with other sizes yielding suboptimal results. We will initiate multi-resolution training in the future, and at that time, we will open-source the new weights.

Stable Diffusion 3 Medium is a 2 billion parameter text-to-image model developed by Stability AI. It excels at photorealism, typography, and prompt following.

Stable Diffusion 3 on Replicate can be used for commercial work.

Core Model

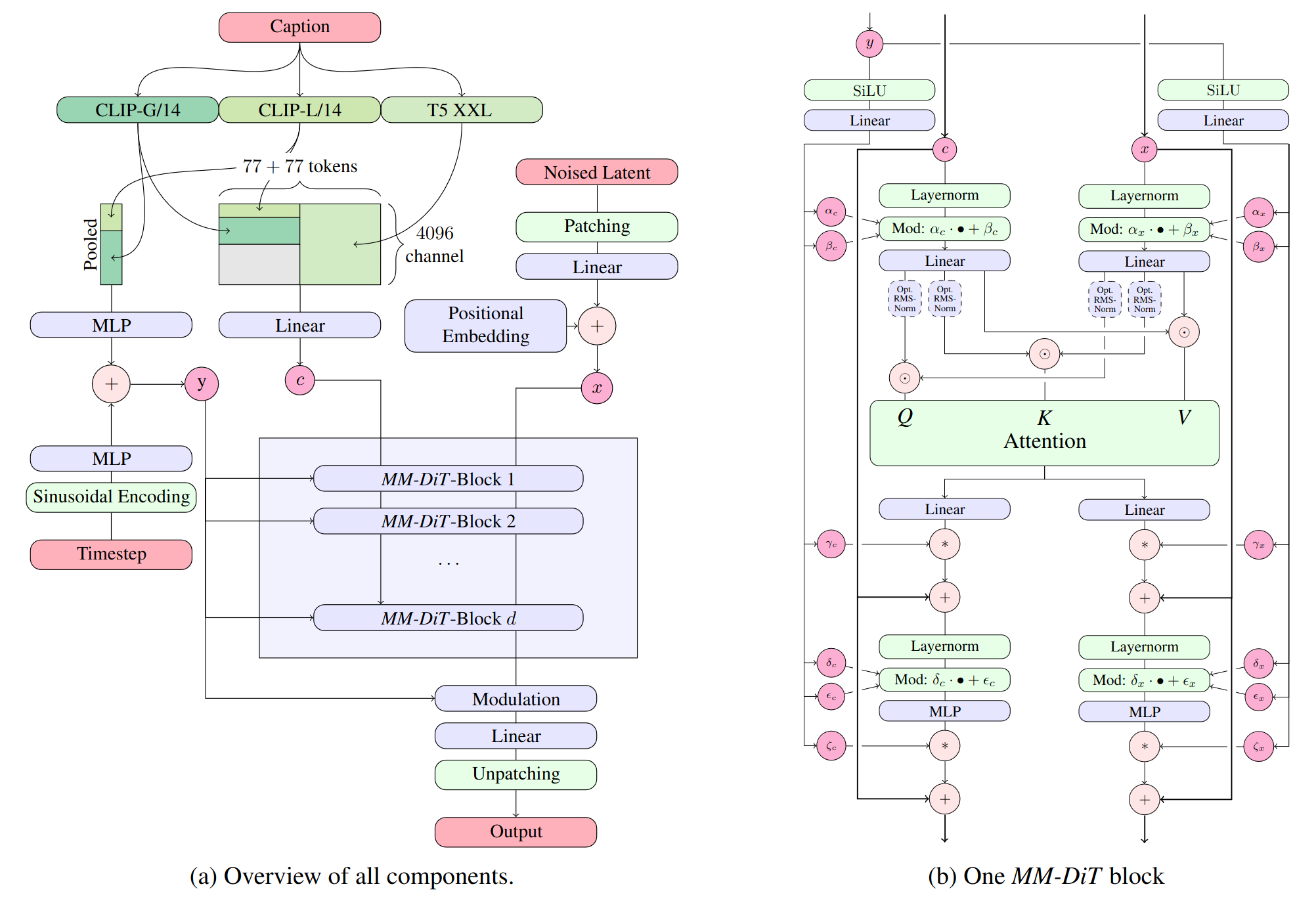

Stable Diffusion 3 Medium is a Multimodal Diffusion Transformer (MMDiT) text-to-image model that features greatly improved performance in image quality, typography, complex prompt understanding, and resource-efficiency.

For more technical details, please refer to the Research paper.

Safety

As part of our safety-by-design and responsible AI deployment approach, Stability AI implement safety measures throughout the development of our models, from the time we begin pre-training a model to the ongoing development, fine-tuning, and deployment of each model. We have implemented a number of safety mitigations that are intended to reduce the risk of severe harms, however we recommend that developers conduct their own testing and apply additional mitigations based on their specific use cases.

For more about our approach to Safety, please visit our Safety page.

Support

All credit goes to the InstantX team Give me a follow on Twitter if you like my work! @zsakib_