Readme

Deforum x Kandinsky-2.2

Generates videos with Kandinsky-2.2 using one or multiple text prompts and specified animation type/s. Deforum is a community of AI image synthesis developers, enthusiasts, and artists that develop open-source methods for text-to-image and text-to-video generation.

The project description from the original repo is as follows:

The project description from the original repo is as follows:

The idea of animating a picture is quite simple: from the original 2D image we obtain a pseudo-3D scene and then simulate a camera flyover of this scene. The pseudo-3D effect occurs due to the human eye’s perception of motion dynamics through spatial transformations. Using various motion animation mechanics, we get a sequence of frames that look like they were shot with a first-person camera. The process of creating such a set of personnel is divided into 2 stages:

- Creating a pseudo-3D scene and simulating a camera flyby (obtaining successive frames)

- Application of the image-to-image approach for additional correction of the resulting images

Using the API

You can use one or multiple text prompts to generate videos. If multiple prompts are entered, the prompts will be used in the given order to generate the final video. Note that the animation prompt/s determine the video content whereas the animations argument determine the type of animation to be applied to the image generated by a given prompt. The API has the following inputs:

- animation_prompts: Prompt/s to generate animation from. If using multiple prompts, separate different prompts with ‘|’.

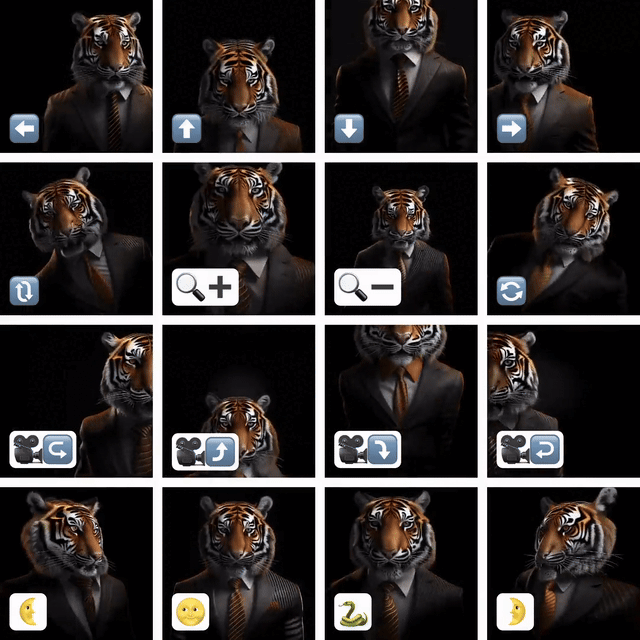

- animations: Animation action/s, available options are [right, left, up, down, spin_clockwise, spin_counterclockwise, zoomin, zoomout, rotate_right, rotate_left, rotate_up, rotate_down, around_right, around_left, zoomin_sinus_x, zoomout_sinus_y, right_sinus_y, left_sinus_y, flipping_phi, live]. Enter one action per animation prompt and separate actions with ‘|’.

- prompt_durations: Optional input, duration (seconds) to generate each animation prompt for, enter float or int values separated by ‘|’. Each prompt will have an equal duration if no value is provided.

- max_frames: Number of frames to generate.

- fps: Video frame rate per second.

- height: Video height.

- width: Video width.

- scheduler: Scheduler to use for the diffusion process. Select between [euler_ancestral, klms, dpm2, dpm2_ancestral, heun, euler, plms, ddim].

- steps: Number of diffusion denoising steps.

- seed: Random seed for generation.