Recommended Models

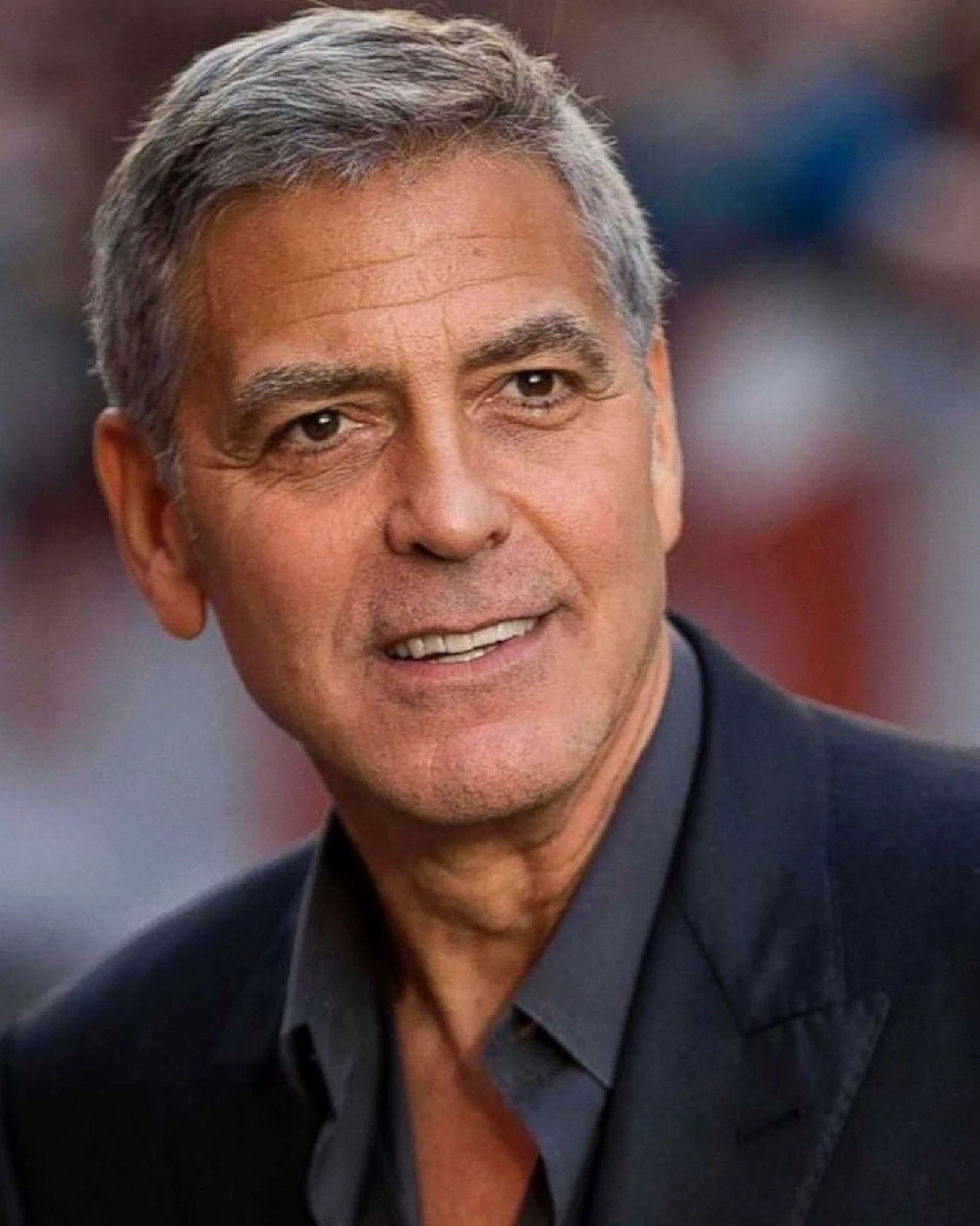

lucataco/flux-content-filter

lucataco/flux-content-filterFlux Content Filter - Check for public figures and copyright concerns

Updated 7 months, 3 weeks ago

165.7K runs

meta/llama-guard-4-12b

meta/llama-guard-4-12bUpdated 8 months ago

42.9K runs

meta/llama-guard-3-8b

meta/llama-guard-3-8bA Llama-3.1-8B pretrained model, fine-tuned for content safety classification

Updated 1 year, 2 months ago

363.1K runs

meta/meta-llama-guard-2-8b

meta/meta-llama-guard-2-8bA llama-3 based moderation and safeguarding language model

Updated 1 year, 9 months ago

735K runs

falcons-ai/nsfw_image_detection

falcons-ai/nsfw_image_detectionFine-Tuned Vision Transformer (ViT) for NSFW Image Classification

Updated 2 years, 3 months ago

80.9M runs

m1guelpf/nsfw-filter

m1guelpf/nsfw-filterRun any image through the Stable Diffusion content filter

Updated 3 years, 3 months ago

12M runs